Contrastive Learning

Quick Navigation:

- Contrastive Learning Definition

- Contrastive Learning Explained Easy

- Contrastive Learning Origin

- Contrastive Learning Etymology

- Contrastive Learning Usage Trends

- Contrastive Learning Usage

- Contrastive Learning Examples in Context

- Contrastive Learning FAQ

- Contrastive Learning Related Words

Contrastive Learning Definition

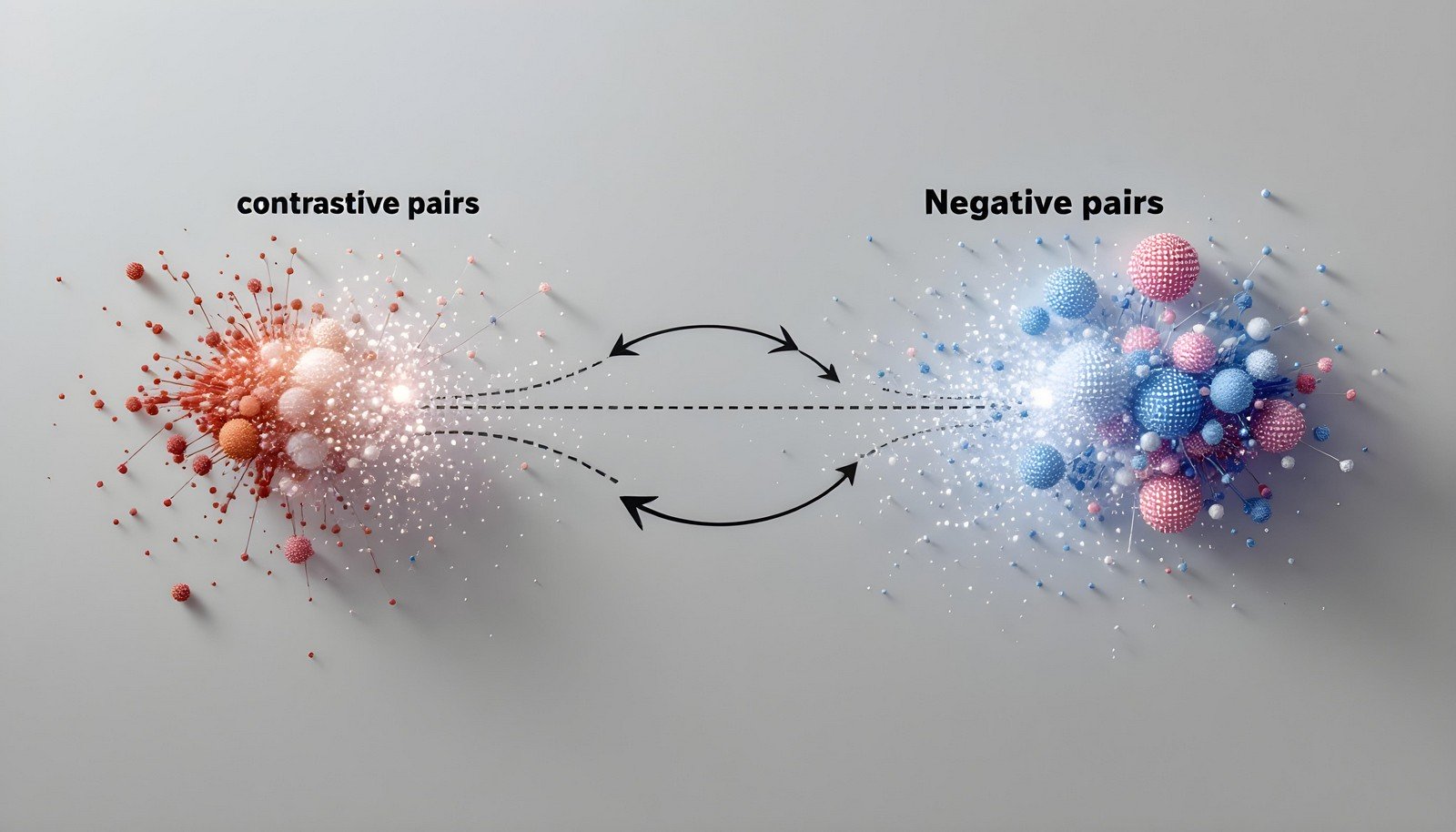

Contrastive learning is a machine learning technique that focuses on distinguishing between similar and dissimilar data points. In this approach, models learn by comparing pairs of examples: those that are related (positive pairs) and those that are unrelated (negative pairs). The objective is to minimize the distance between positive pairs while maximizing the distance between negative pairs. This technique is widely used in unsupervised learning, particularly in computer vision and natural language processing, where defining strict labels can be challenging. By learning to contrast data points, the model develops a nuanced understanding of feature representations, improving its ability to recognize patterns.

Contrastive Learning Explained Easy

Imagine you have two friends, one who loves sports and another who dislikes it. To remember who likes what, you compare them. You note that one friend likes soccer jerseys and the other doesn't. By comparing their likes and dislikes, you start remembering their preferences better. In contrastive learning, a computer does something similar – it learns to identify things by comparing them, grouping similar things together and keeping different things apart.

Contrastive Learning Origin

The concept of contrastive learning evolved as researchers looked for methods to improve machine learning models in recognizing features and representations without the need for extensive labeled data. Early research in the 2010s demonstrated that comparing data points rather than relying solely on labels could enhance model learning. This approach gained traction in unsupervised learning fields like computer vision, where it became essential for tasks involving images and videos.

Contrastive Learning Etymology

The term "contrastive" stems from the word "contrast," meaning to compare differences. In contrastive learning, this comparison is central, as models distinguish between different data points to learn representations.

Contrastive Learning Usage Trends

Contrastive learning has become increasingly popular with the rise of unsupervised and self-supervised learning techniques. It's used extensively in applications like image classification, recommendation systems, and natural language processing. Notable frameworks like SimCLR, MoCo, and CLIP have advanced this field, especially in computer vision and multimodal learning applications. The technique’s relevance has grown alongside the need for models that perform well without requiring vast amounts of labeled data.

Contrastive Learning Usage

- Formal/Technical Tagging: unsupervised learning, self-supervised learning, similarity learning, deep learning

- Typical Collocations: contrastive learning methods, positive and negative pairs, feature representation, similarity metric, contrastive loss function, self-supervised model

Contrastive Learning Examples in Context

"In unsupervised image recognition, contrastive learning helps models learn to differentiate between objects without explicit labels."

"Researchers used contrastive learning to improve their recommendation engine, focusing on user behavior similarities."

"In language models, contrastive learning is used to understand semantic similarities by contrasting sentence pairs."

Contrastive Learning FAQ

- What is contrastive learning used for?

It's used to teach models to differentiate between similar and dissimilar data points. - How does contrastive learning differ from supervised learning?

Unlike supervised learning, contrastive learning does not require labeled data and focuses on feature similarity instead. - What industries benefit from contrastive learning?

Fields like image recognition, recommendation systems, and natural language processing benefit greatly. - Is contrastive learning only for unsupervised learning?

Primarily, yes, but it can also enhance supervised learning models by refining feature representations. - What are positive and negative pairs in contrastive learning?

Positive pairs are related data points, and negative pairs are unrelated ones, used to train the model. - What is contrastive loss in this context?

Contrastive loss is a function that minimizes distances between positive pairs and maximizes distances between negative pairs. - What is SimCLR in relation to contrastive learning?

SimCLR is a popular framework for applying contrastive learning in unsupervised image classification. - Can contrastive learning be used in text processing?

Yes, it’s used to identify semantic similarities and differences between sentences or words. - Why is contrastive learning important for AI development?

It enables AI to learn with limited labeled data, improving scalability and flexibility. - What are some common challenges in contrastive learning?

Selecting effective positive and negative pairs can be challenging, especially in complex datasets.

Contrastive Learning Related Words

- Categories/Topics: unsupervised learning, computer vision, natural language processing, similarity learning

- Word Families: contrast, contrasting, contrastive, contrastively

Did you know?

Contrastive learning took a major leap forward with the release of CLIP, an AI model by OpenAI that learned to connect images and text by comparing them. This breakthrough allowed the model to interpret images based on textual descriptions, a huge step in multimodal learning.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment