Federated Averaging

Quick Navigation:

- Federated Averaging Definition

- Federated Averaging Explained Easy

- Federated Averaging Origin

- Federated Averaging Etymology

- Federated Averaging Usage Trends

- Federated Averaging Usage

- Federated Averaging Examples in Context

- Federated Averaging FAQ

- Federated Averaging Related Words

Federated Averaging Definition

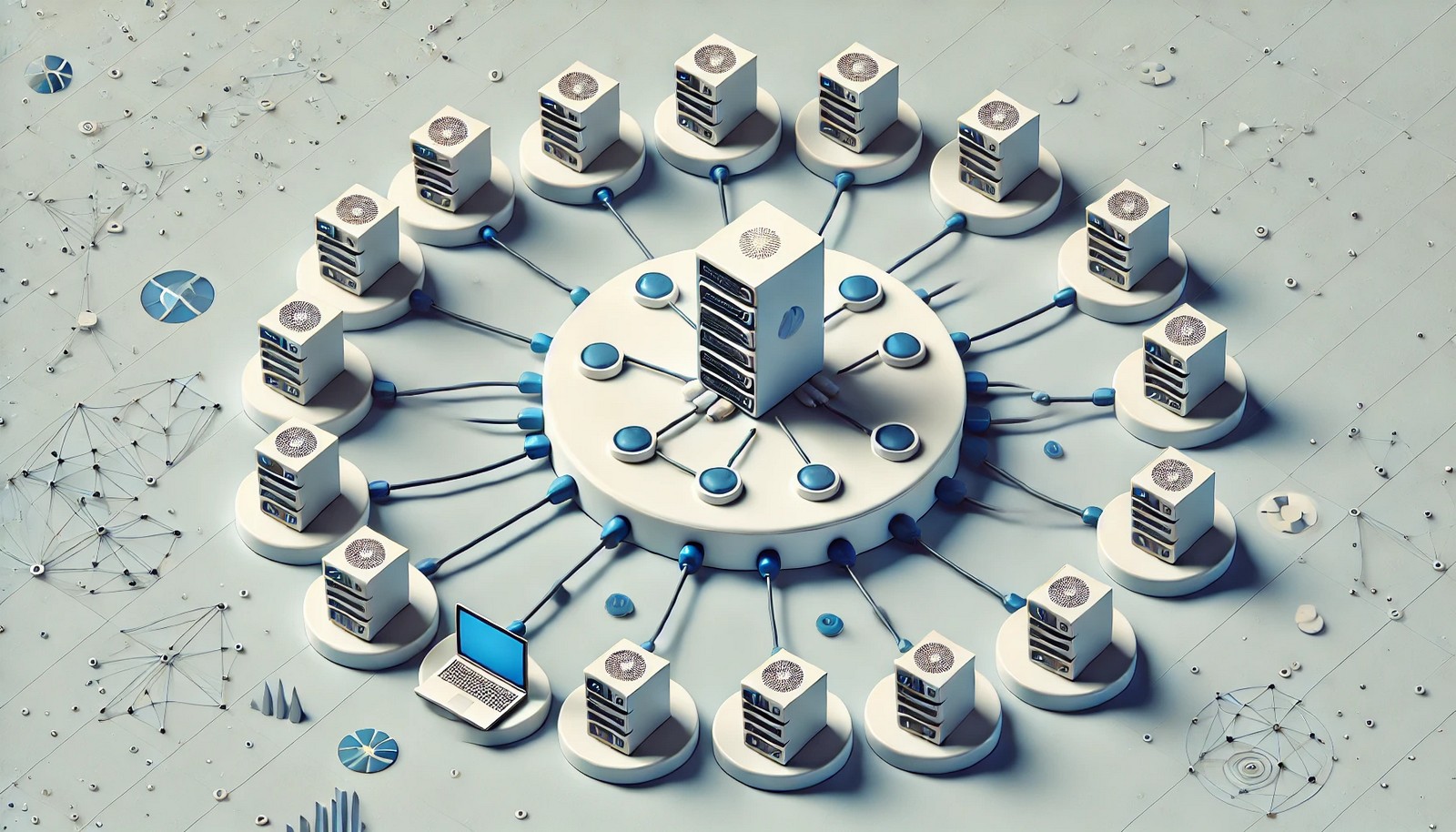

Federated Averaging is a key algorithm in federated learning, enabling models to train collaboratively across decentralized devices or servers without directly sharing the data used to train them. This method aggregates locally trained model updates by averaging them, producing a global model that reflects the knowledge across all devices while preserving individual data privacy. Federated Averaging is vital for applications in sectors like healthcare and finance, where data cannot be centralized due to privacy concerns.

Federated Averaging Explained Easy

Imagine you and your friends each learn a skill on your own, and then you all share what you learned with a group leader who combines the best parts of everyone’s knowledge into a new guide. Federated Averaging works similarly, collecting knowledge from different places without needing everyone to share their own secrets.

Federated Averaging Origin

Federated Averaging emerged with the rise of federated learning, introduced by Google in 2016 as a solution for training machine learning models directly on users’ devices. Its development was driven by the need to improve user privacy in sensitive applications like text prediction and health monitoring.

Federated Averaging Etymology

The term "federated" relates to federations, implying a union of multiple groups or systems. "Averaging" refers to the process of combining model updates into a single, averaged result, forming the basis for the algorithm's global model.

Federated Averaging Usage Trends

The use of Federated Averaging has expanded as data privacy concerns grow. Federated learning is increasingly applied in industries like healthcare, finance, and telecommunications, leveraging Federated Averaging to improve local model training without risking data security. This trend aligns with rising global data privacy laws, making decentralized model training a priority.

Federated Averaging Usage

- Formal/Technical Tagging:

- Federated Learning

- Machine Learning

- Data Privacy

- Decentralized Computing - Typical Collocations:

- "federated averaging algorithm"

- "global model aggregation"

- "privacy-preserving model training"

- "federated learning framework with federated averaging"

Federated Averaging Examples in Context

- In healthcare, Federated Averaging allows hospitals to collaboratively train a predictive model for disease detection without sharing sensitive patient data.

- Federated Averaging is used in mobile devices to improve on-device models, such as text prediction and speech recognition, without sending data to a central server.

- Telecom companies employ Federated Averaging to enhance predictive models across devices without compromising users' personal information.

Federated Averaging FAQ

- What is Federated Averaging?

Federated Averaging is an algorithm that combines locally trained model updates into a global model in federated learning. - How does Federated Averaging maintain data privacy?

It allows devices to update a central model without directly sharing the raw data, only sending model updates. - Where is Federated Averaging applied?

It’s widely used in healthcare, finance, and mobile applications for privacy-preserving model training. - Who developed Federated Averaging?

Google introduced it as part of federated learning research in 2016. - How does Federated Averaging benefit healthcare?

It enables hospitals to collaboratively improve predictive models without sharing sensitive patient information. - Is Federated Averaging only for smartphones?

No, it’s used across various devices, including medical equipment and IoT systems, where privacy is essential. - Can Federated Averaging improve model accuracy?

Yes, by averaging updates from multiple devices, it produces a comprehensive model that reflects diverse learning sources. - How is Federated Averaging different from central model training?

Federated Averaging doesn’t centralize data; only model updates are shared, protecting individual data. - What are the challenges of Federated Averaging?

Challenges include device variability, ensuring consistent updates, and handling data that’s not uniformly distributed. - How does Federated Averaging relate to federated learning?

It’s the core method used in federated learning to aggregate individual model updates while maintaining privacy.

Federated Averaging Related Words

- Categories/Topics:

- Decentralized Computing

- Privacy

- Machine Learning

- Federated Learning

Did you know?

Federated Averaging plays a critical role in mobile applications, where it's used in predictive keyboards. By training models locally and sending only aggregated updates, companies enhance user experience while keeping data secure. This approach has made Federated Averaging a pioneering technology in privacy-preserving AI.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment