Fine Tuning in ML

Quick Navigation:

- Fine-tuning Definition

- Fine-tuning Explained Easy

- Fine-tuning Origin

- Fine-tuning Etymology

- Fine-tuning Usage Trends

- Fine-tuning Usage

- Fine-tuning Examples in Context

- Fine-tuning FAQ

- Fine-tuning Related Words

Fine-tuning Definition

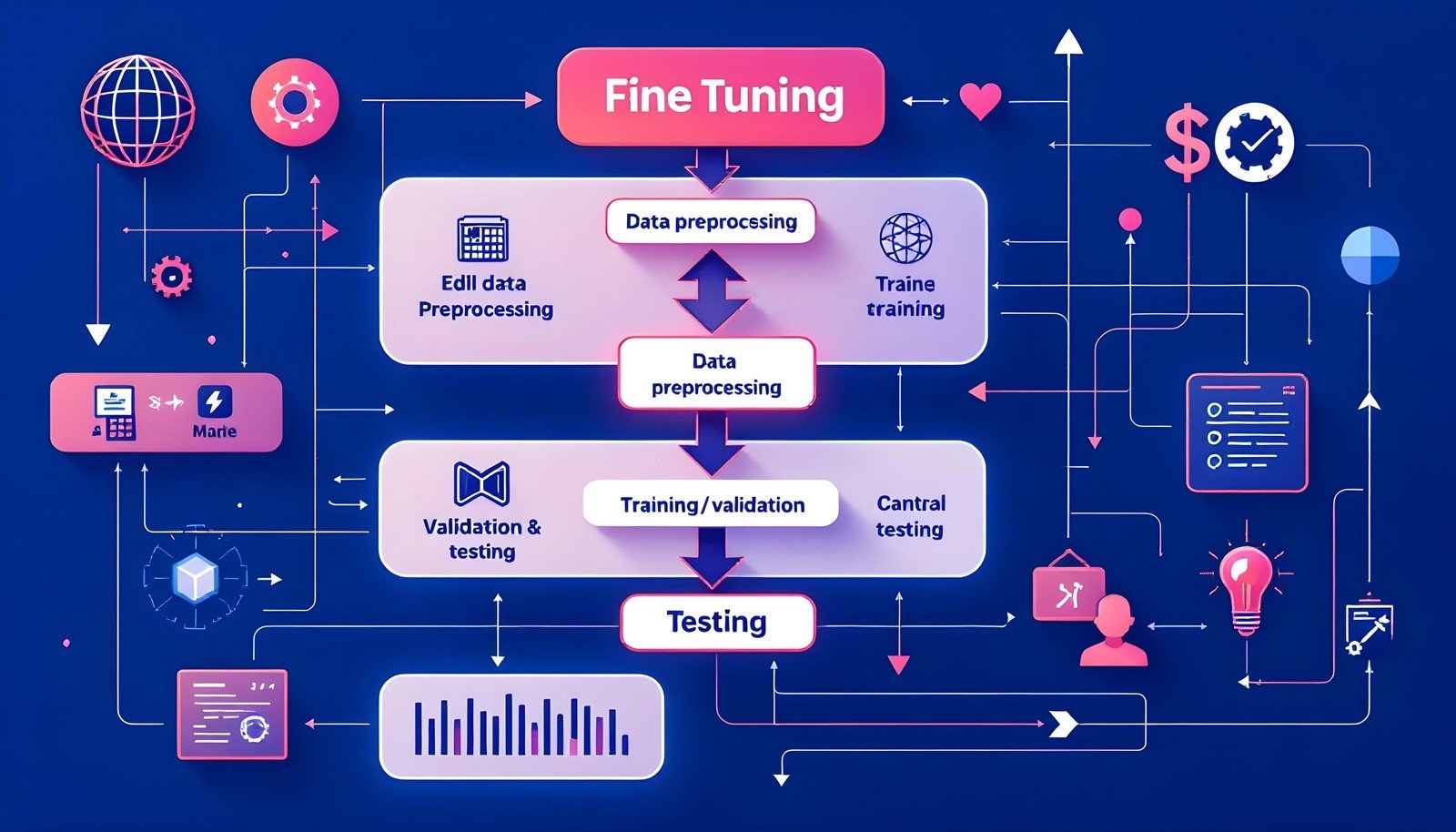

Fine-tuning refers to the process of making small, incremental adjustments to a model, system, or piece of equipment to achieve optimal performance. In machine learning, fine-tuning is used to adapt a pre-trained model to a new dataset or task, allowing it to specialize without being trained from scratch. This involves adjusting weights and parameters of the model to improve its accuracy and performance on specific data. Technically, it leverages transfer learning where a model, trained on one task, is further trained ("tuned") on a related task with new data.

Fine-tuning Explained Easy

Fine-tuning is like adding the final touches to a drawing that you’ve already worked on. The main picture is there, but you make small changes so it looks just right. In computers and AI, fine-tuning means taking a program that already knows how to do something and teaching it to do something similar but in a more specific way, like making it really good at identifying pictures of cats after it already knows how to identify animals.

Fine-tuning Origin

The concept of fine-tuning originally comes from physics and engineering, where it refers to adjusting mechanical systems or instruments for the best results. Over time, this term was adopted by the field of machine learning and artificial intelligence to describe refining a pre-trained model.

Fine-tuning Etymology

The word ‘fine-tuning’ is derived from ‘fine’, meaning ‘small’ or ‘detailed’, and ‘tune’, which refers to the adjustment of an instrument or device for optimal performance.

Fine-tuning Usage Trends

Fine-tuning has seen a significant increase in usage within the technology sector, particularly in AI and machine learning, over the past decade. This is due to the growth of pre-trained models like BERT and GPT, which require fine-tuning to adapt to specific applications such as language translation, sentiment analysis, and question-answering systems. Additionally, industries beyond tech, including healthcare, finance, and marketing, have adopted fine-tuning to tailor AI solutions to their unique data and needs.

Fine-tuning Usage

- Formal/Technical Tagging: Machine learning, optimization, model training, transfer learning

- Typical Collocations: fine-tuning models, fine-tuning algorithms, fine-tuning data, pre-trained model fine-tuning

Fine-tuning Examples in Context

- “The team used fine-tuning on the pre-trained language model to customize it for their medical data analysis project.”

- “With some fine-tuning, the AI system was able to classify customer reviews by sentiment with high accuracy.”

- “The startup fine-tuned a voice recognition system to better understand different regional accents.”

Fine-tuning FAQ

- What is fine-tuning in machine learning?

Fine-tuning is the process of taking a pre-trained model and training it further on a specific dataset to make it more accurate for a particular task. - Why is fine-tuning important?

It saves time and resources as it leverages the work done by pre-trained models and improves their performance on new tasks without starting from scratch. - What are common examples of fine-tuning?

Examples include adapting a general language model for specific tasks like customer service chatbots or medical diagnosis. - How does fine-tuning differ from training a model from scratch?

Training from scratch requires extensive data and computational power, whereas fine-tuning uses an existing trained model and modifies it for specific tasks. - Can fine-tuning be applied outside of machine learning?

Yes, fine-tuning can refer to any scenario where minor adjustments are made for improved performance, such as in musical instruments or engineering systems. - What types of models benefit most from fine-tuning?

Large, pre-trained models like neural networks for natural language processing or image classification benefit most from fine-tuning. - Is fine-tuning always necessary?

No, it depends on the use case. For very general tasks, pre-trained models might suffice, but for specialized tasks, fine-tuning is helpful. - What are some common techniques used in fine-tuning?

Techniques include freezing initial layers, adjusting learning rates, and training only the last few layers of a model. - How long does fine-tuning typically take?

The time can vary based on the complexity of the model and the size of the dataset, ranging from minutes to several hours. - Are there risks in fine-tuning?

Yes, if not done carefully, it can lead to overfitting, where the model performs well on the training data but poorly on new data.

Fine-tuning Related Words

- Categories/Topics: Machine learning, AI, optimization, training processes

- Word Families: Tuning, adjustment, refinement, calibration

Did you know?

Fine-tuning in AI became particularly popular with the advent of deep learning models like BERT and GPT, which transformed how tasks like language translation and question-answering were approached, making solutions much more efficient and accessible to non-expert users.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment