Pruning in Artificial Intelligence

Quick Navigation:

- Pruning Definition

- Pruning Explained Easy

- Pruning Origin

- Pruning Etymology

- Pruning Usage Trends

- Pruning Usage

- Pruning Examples in Context

- Pruning FAQ

- Pruning Related Words

Pruning Definition

Pruning in artificial intelligence is a technique used to streamline neural networks by removing redundant neurons, weights, or connections. This optimization process keeps essential components while discarding less relevant ones, ultimately improving computational efficiency and often reducing memory requirements. Pruning is especially valuable for deploying models on devices with limited resources, such as mobile phones or embedded systems.

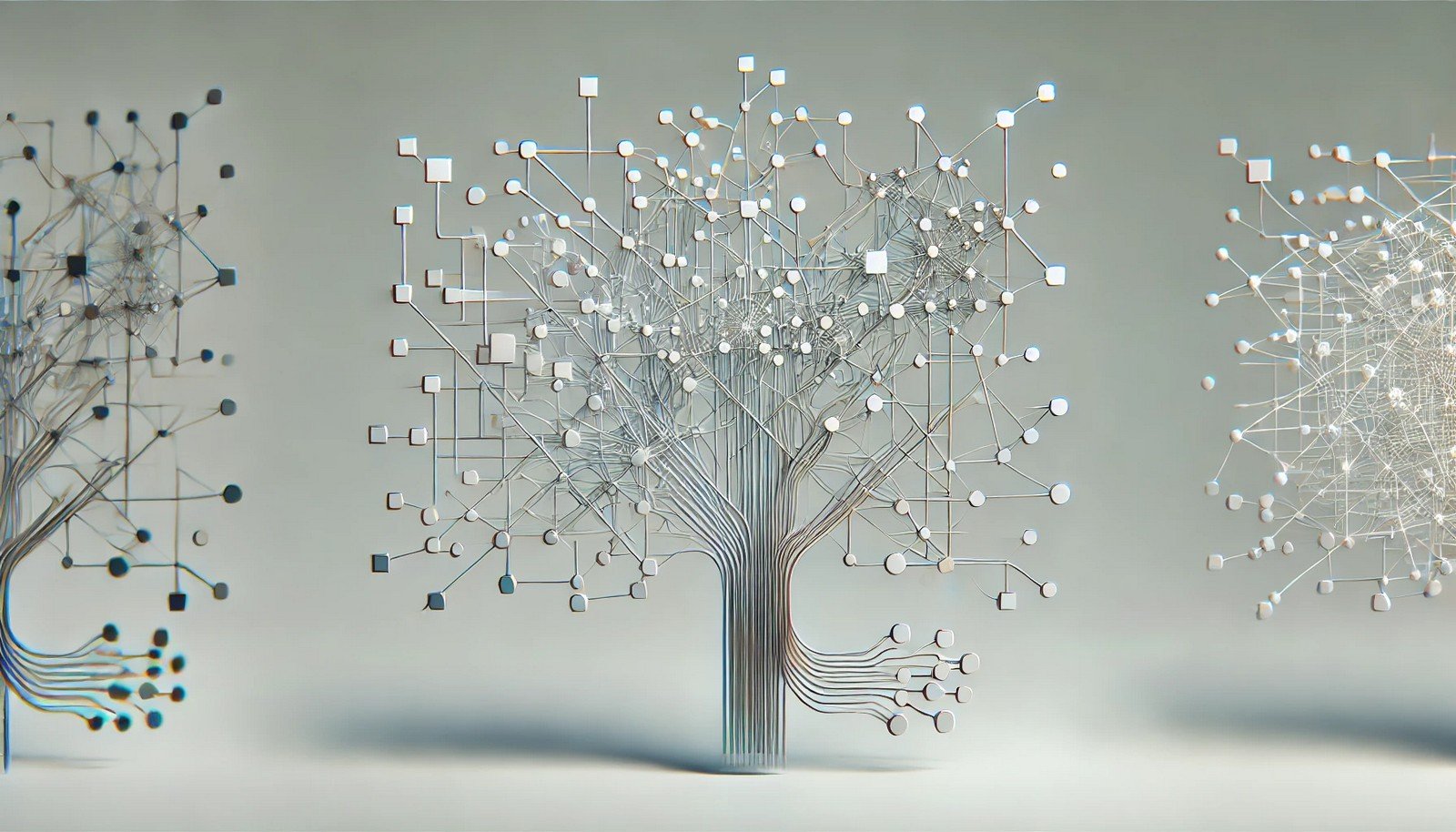

Pruning Explained Easy

Think of a tree with many branches. If there are too many branches, they may block sunlight or make the tree heavy. By trimming or “pruning” a few branches, the tree becomes healthier and grows better. In AI, pruning removes unnecessary parts in a model so it can work faster and more efficiently, just like trimming a tree.

Pruning Origin

The concept of pruning originates from classical optimization techniques and was adapted for neural networks as machine learning models became larger and more complex. The development of pruning techniques accelerated as researchers sought to deploy efficient models on smaller devices, such as smartphones.

Pruning Etymology

The term “pruning” in AI is derived from horticulture, where it refers to selectively cutting branches to improve plant health or appearance.

Pruning Usage Trends

In recent years, pruning has gained traction, especially with the rise of mobile and edge computing. By 2023, pruning is widely used in model deployment for AI applications requiring high efficiency, such as voice recognition on smartphones and image analysis on low-power IoT devices.

Pruning Usage

- Formal/Technical Tagging:

- Model Optimization

- Neural Network Simplification

- Computational Efficiency - Typical Collocations:

- "model pruning technique"

- "neural network pruning"

- "pruning for deep learning"

- "pruned model for deployment"

Pruning Examples in Context

- A pruned neural network can quickly classify images on a smartphone without draining the battery.

- In self-driving cars, pruned models analyze road data in real-time, reducing processing delay.

- By pruning unused connections, AI models in healthcare applications provide rapid diagnostics on small devices.

Pruning FAQ

- What is pruning in AI?

Pruning is a process to remove unimportant parts of a neural network, improving its efficiency. - Why is pruning important in AI?

It reduces the size and computation cost of AI models, making them suitable for deployment on devices with limited resources. - How does pruning work?

Pruning algorithms identify and remove low-importance neurons, connections, or weights in a network. - What types of pruning exist?

Types include weight pruning, neuron pruning, and structured pruning. - Does pruning affect model accuracy?

While pruning may slightly reduce accuracy, careful pruning minimizes this impact while significantly improving efficiency. - Where is pruning commonly applied?

Pruning is often used in mobile applications, IoT, and other resource-constrained environments. - What are the challenges in pruning?

Maintaining model accuracy and deciding which components to prune are significant challenges. - How does pruning benefit mobile AI?

Pruned models require less processing power, making them faster and more battery-efficient. - Is pruning used in all AI models?

Not always. Pruning is most useful for large models where efficiency gains outweigh the minor loss in accuracy. - Can pruning be reversed?

Pruned models can sometimes be fine-tuned or re-trained to regain accuracy, but the removed components are generally not restored.

Pruning Related Words

- Categories/Topics:

- Neural Network Optimization

- Deep Learning

- Edge AI

Did you know?

Pruning is a foundational technique in AI model optimization, enabling models like BERT and GPT to be effectively deployed on smartphones. This allows devices with limited resources to benefit from powerful language models, enhancing on-device capabilities without cloud dependency.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment