Distributed File Systems

Quick Navigation:

- Distributed File Systems Definition

- Distributed File Systems Explained Easy

- Distributed File Systems Origin

- Distributed File Systems Etymology

- Distributed File Systems Usage Trends

- Distributed File Systems Usage

- Distributed File Systems Examples in Context

- Distributed File Systems FAQ

- Distributed File Systems Related Words

Distributed File Systems Definition

A Distributed File System (DFS) is a network-based storage system that enables multiple users and computers to access, manage, and share files as if they were stored on a single local device. By distributing file storage across multiple nodes, DFS ensures high availability, fault tolerance, and scalability. Common implementations include Hadoop Distributed File System (HDFS), Network File System (NFS), and Google File System (GFS), each designed to support efficient large-scale data storage and retrieval.

Distributed File Systems Explained Easy

Imagine you have a big notebook, but instead of keeping it in one place, you tear out the pages and store them in different rooms. However, whenever you want to read or write something, you can go to any room, and a helper brings you the page you need. A distributed file system works the same way—it spreads files across many computers, but it still feels like everything is in one place!

Distributed File Systems Origin

The concept of distributed file systems emerged in the 1970s as computer networks grew. Early implementations like Sun Microsystems' NFS in the 1980s laid the groundwork for modern DFS solutions. With the rise of cloud computing and large-scale data centers, DFS has become essential for handling massive amounts of distributed data efficiently.

Distributed File Systems Etymology

The term "distributed file system" combines "distributed," meaning spread across multiple locations, and "file system," which refers to the method of organizing and storing data.

Distributed File Systems Usage Trends

In recent years, distributed file systems have gained prominence due to the explosion of data-driven applications, cloud storage, and big data analytics. Technologies like Apache Hadoop and Google Cloud Storage rely on DFS to manage petabytes of data seamlessly. Organizations in industries like finance, healthcare, and AI research use DFS for high-performance computing and secure data access.

Distributed File Systems Usage

- Formal/Technical Tagging:

- Cloud Storage

- Data Management

- Big Data Processing

- Distributed Computing - Typical Collocations:

- "scalable distributed file system"

- "DFS architecture"

- "replication in distributed file systems"

- "distributed file storage solution"

Distributed File Systems Examples in Context

- Google uses a distributed file system to store and manage search index data across its massive infrastructure.

- A cloud storage provider like Amazon S3 relies on DFS principles to distribute files across multiple data centers for redundancy.

- Big data platforms like Hadoop employ HDFS to efficiently process large-scale datasets across clusters of machines.

Distributed File Systems FAQ

- What is a distributed file system?

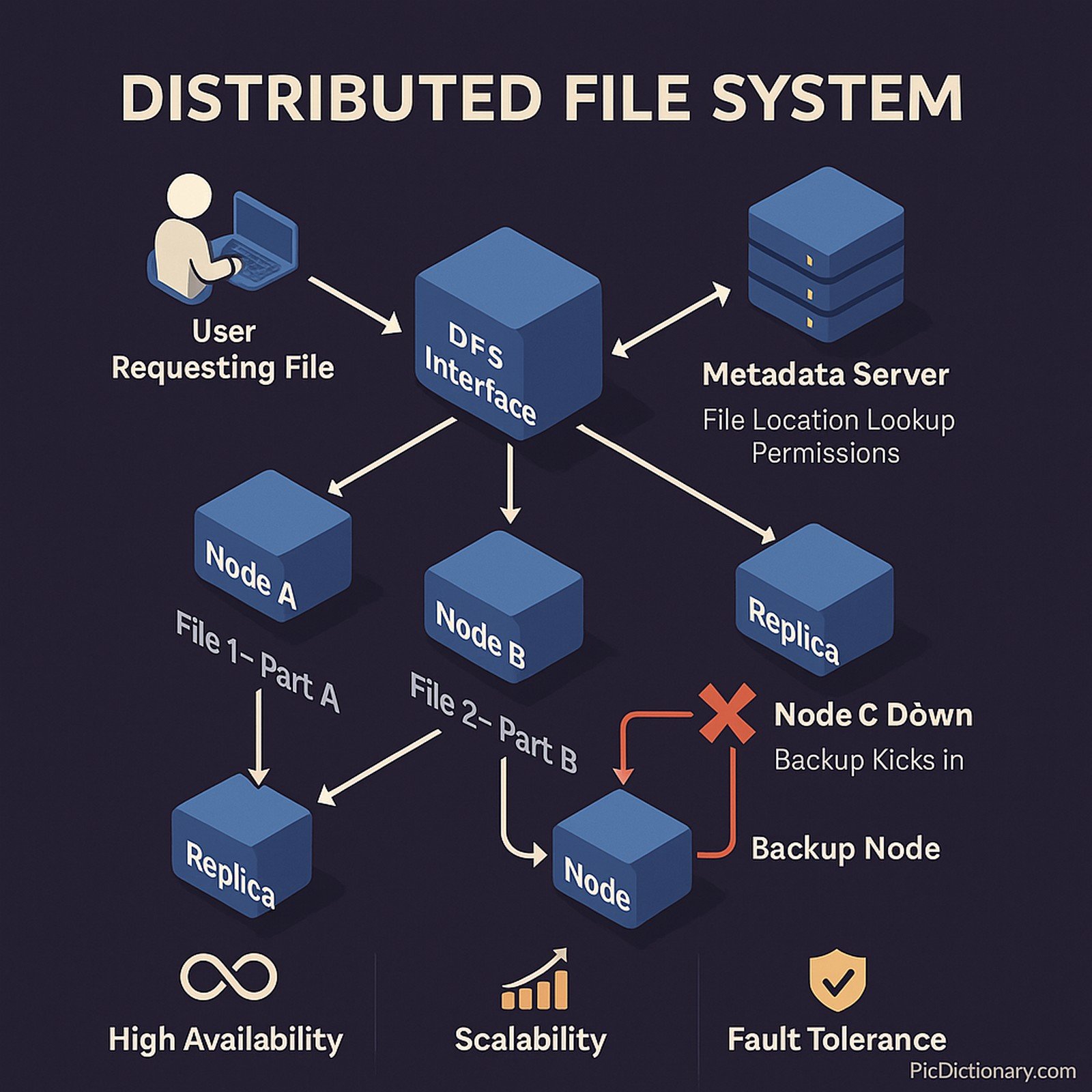

A DFS is a file storage system that allows files to be stored across multiple machines but accessed as if they are on a single system. - How does a distributed file system work?

DFS divides data into chunks, stores them on different nodes, and retrieves them through a coordinated system that ensures accessibility and fault tolerance. - What are some examples of distributed file systems?

Common examples include Hadoop Distributed File System (HDFS), Network File System (NFS), and Google File System (GFS). - Why is DFS important?

DFS ensures scalability, fault tolerance, and high availability, making it essential for modern cloud storage and data-intensive applications. - How does DFS improve fault tolerance?

By replicating data across multiple servers, DFS prevents data loss in case of hardware failures. - Is DFS used in cloud computing?

Yes, cloud providers like AWS, Google Cloud, and Microsoft Azure use DFS to manage large-scale storage and distributed computing. - What challenges does DFS face?

Challenges include data consistency, network latency, and managing distributed system failures efficiently. - How does DFS handle large data volumes?

DFS uses techniques like sharding, replication, and load balancing to efficiently manage and process massive amounts of data. - Can DFS be used for real-time applications?

Yes, many DFS implementations support low-latency data retrieval for real-time analytics and AI workloads. - What industries benefit from DFS?

Industries such as finance, healthcare, AI research, and e-commerce rely on DFS for secure, scalable, and high-performance data storage.

Distributed File Systems Related Words

- Categories/Topics:

- Cloud Computing

- Big Data Storage

- High-Performance Computing

- Scalable Data Management

Did you know?

The Google File System (GFS) was designed to support Google's search engine, allowing it to process and store vast amounts of web data. This innovation later inspired the development of Hadoop Distributed File System (HDFS), which became a cornerstone of big data analytics.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment