Batch Normalization

Quick Navigation:

- Batch Normalization Definition

- Batch Normalization Explained Easy

- Batch Normalization Origin

- Batch Normalization Etymology

- Batch Normalization Usage Trends

- Batch Normalization Usage

- Batch Normalization Examples in Context

- Batch Normalization FAQ

- Batch Normalization Related Words

Batch Normalization Definition

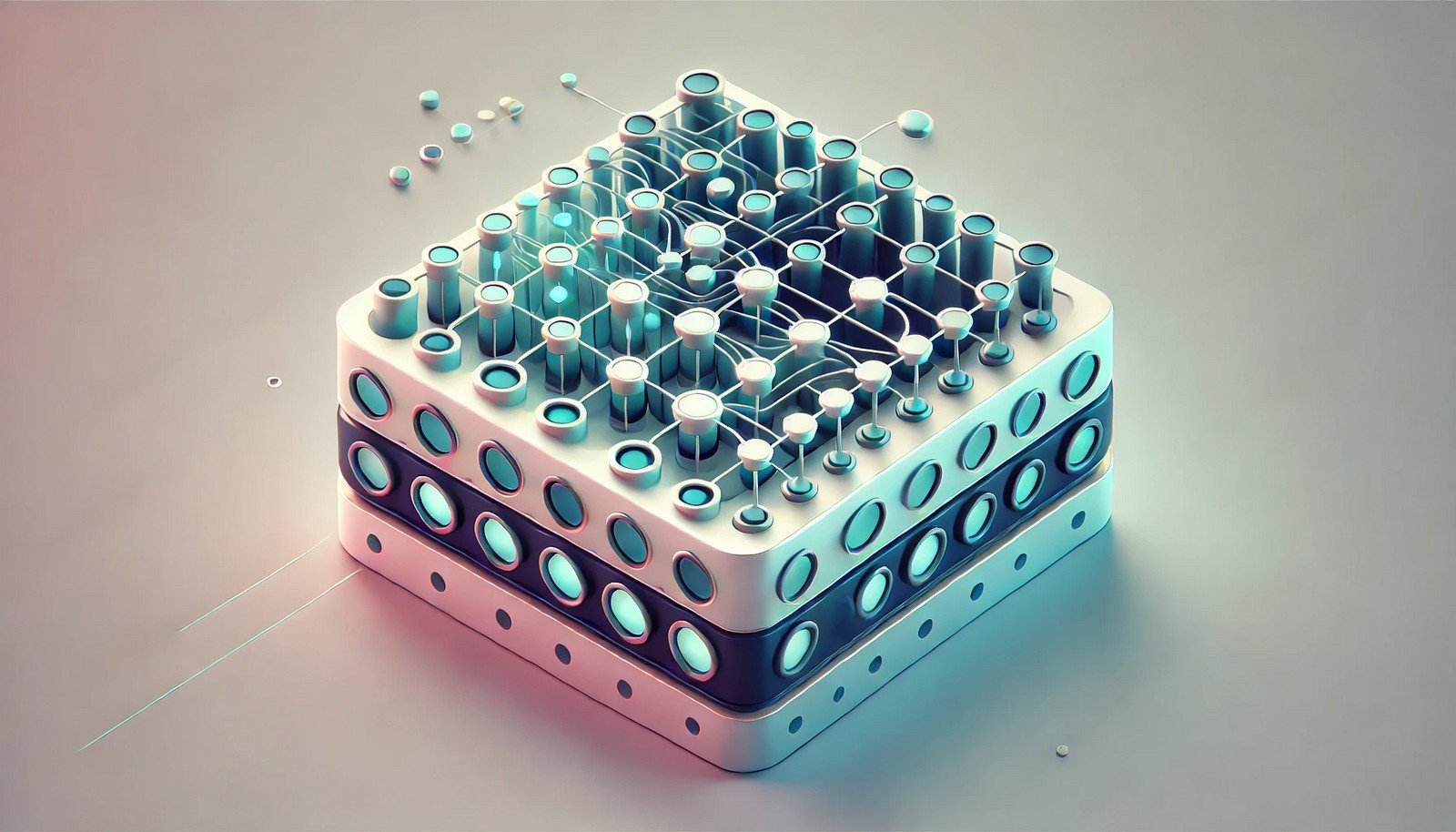

Batch Normalization is a technique in deep learning that normalizes the inputs of each layer to improve training stability and speed. It involves adjusting and scaling layer inputs to maintain consistent mean and variance, preventing issues caused by internal covariate shift. Batch Normalization helps models converge faster by minimizing the risk of gradient vanishing or exploding. Commonly applied after activation functions, this method is a key element in modern neural networks, enhancing performance in models used for image classification, language processing, and reinforcement learning.

Batch Normalization Explained Easy

Imagine you're trying to run a race with weights on, but someone keeps adjusting them to keep you balanced. Batch Normalization works similarly in machine learning, where it adjusts and balances the model’s learning layers to keep them stable and learning well, helping the “race” to finish faster without stumbling.

Batch Normalization Origin

Batch Normalization was introduced by Sergey Ioffe and Christian Szegedy in 2015 to address challenges in training deep neural networks. It quickly became a popular technique in deep learning due to its effectiveness in stabilizing the training process and improving model performance across a variety of tasks.

Batch Normalization Etymology

The term “Batch Normalization” refers to the process of normalizing data within each mini-batch, a smaller subset of the dataset used in training, to ensure consistent data distribution across learning cycles.

Batch Normalization Usage Trends

With the rapid growth of deep learning, Batch Normalization has become an essential technique, especially in convolutional neural networks for tasks like image recognition and language models. As models grow deeper and more complex, Batch Normalization remains vital in reducing the computational burden and ensuring robust model performance. It is widely adopted in frameworks like TensorFlow and PyTorch, shaping the way neural networks are developed and optimized.

Batch Normalization Usage

- Formal/Technical Tagging: Deep Learning, Machine Learning, Neural Networks, Optimization

- Typical Collocations: “batch normalization layer,” “stabilize training with batch normalization,” “normalize inputs in neural networks”

Batch Normalization Examples in Context

- Batch Normalization is often used in image recognition models, allowing them to learn faster and more accurately.

- Applying Batch Normalization in text processing networks helps stabilize training by managing fluctuating data inputs.

- Reinforcement learning models that use Batch Normalization can handle complex tasks like gaming AI with enhanced stability.

Batch Normalization FAQ

- What is Batch Normalization?

Batch Normalization is a technique that normalizes inputs in a neural network to improve training speed and stability. - Why is Batch Normalization important in deep learning?

It mitigates training issues such as gradient vanishing or exploding, speeding up convergence. - How does Batch Normalization work?

It adjusts inputs within each mini-batch to maintain consistent mean and variance, enhancing stability. - Where is Batch Normalization applied in neural networks?

Typically after activation layers, it maintains stable inputs to each subsequent layer. - What challenges does Batch Normalization solve?

It addresses internal covariate shift, a problem where input distributions change during training. - Is Batch Normalization used only in image processing?

No, it is also effective in text and language models, reinforcement learning, and more. - Does Batch Normalization always improve model accuracy?

While it often does, its main benefits are training stability and speed rather than accuracy alone. - Can Batch Normalization be used with dropout?

Yes, it can be combined with dropout to improve regularization in neural networks. - What is internal covariate shift?

It’s a shift in data distribution within network layers, which Batch Normalization helps to control. - Which libraries support Batch Normalization?

Major libraries like TensorFlow, Keras, and PyTorch include Batch Normalization implementations.

Batch Normalization Related Words

- Categories/Topics: Deep Learning, Neural Networks, Model Training, Optimization Techniques

Did you know? Batch Normalization significantly reduced training time for models in image classification challenges like ImageNet. In competitive AI tasks, this technique enabled faster convergence, paving the way for increasingly complex architectures like ResNet, which achieved top performance in benchmarks.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment