Forward Propagation

Quick Navigation:

- Forward Propagation Definition

- Forward Propagation Explained Easy

- Forward Propagation Origin

- Forward Propagation Etymology

- Forward Propagation Usage Trends

- Forward Propagation Usage

- Forward Propagation Examples in Context

- Forward Propagation FAQ

- Forward Propagation Related Words

Forward Propagation Definition

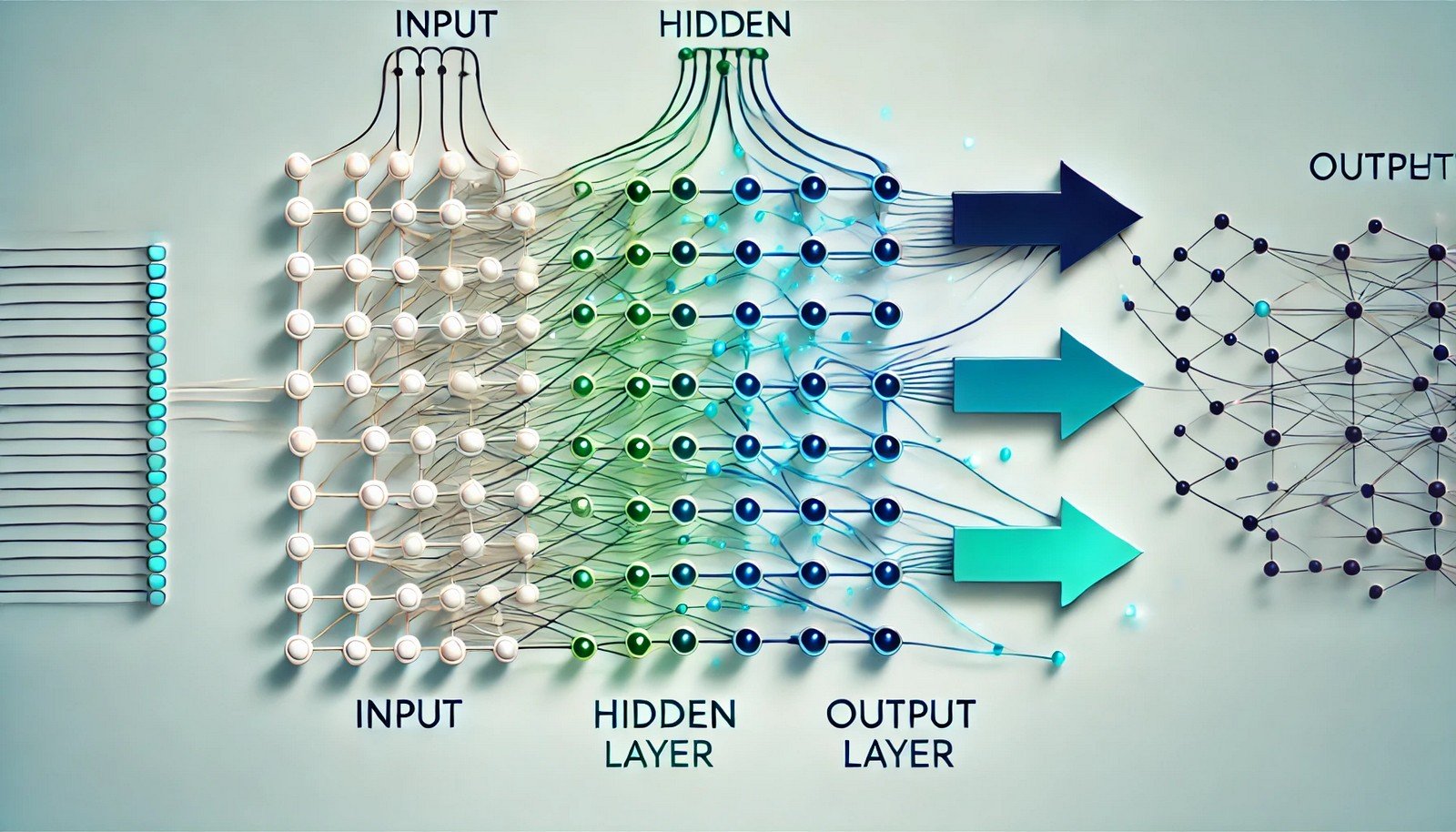

Forward propagation is a computational process used in artificial neural networks where inputs are passed through layers of neurons to produce an output. This output can be a prediction or classification, depending on the model's design. In this process, each neuron's output is calculated as the weighted sum of its inputs, transformed by an activation function like ReLU (Rectified Linear Unit) or Sigmoid. The calculations move layer by layer from the input layer, through any hidden layers, to the output layer. Forward propagation does not involve adjusting weights; it merely feeds data forward to generate predictions based on the current model parameters.

Forward Propagation Explained Easy

Imagine forward propagation as moving through a series of connected rooms in a maze. Each room has switches you can flip to light up the next room, but only in one direction—forward. When you start, you give some initial clues (inputs) to the first room. These clues move from room to room (layer to layer) until they reach the end, where they light up the answer. The switches are already set to certain positions, and you only see how well they light up the answer at the end.

Forward Propagation Origin

Forward propagation originates from the field of artificial intelligence and neural networks, particularly within the broader context of machine learning and deep learning. It has roots in perceptrons and early neural network models from the 1950s and 1960s, which used basic neuron-like structures to process data in a unidirectional flow.

Forward Propagation Etymology

The term combines "forward," indicating a directional flow, with "propagation," meaning the process of spreading or transmitting. Together, it describes the unidirectional movement of data through a neural network model.

Forward Propagation Usage Trends

Forward propagation gained popularity alongside the rise of deep learning models in the 2010s. As interest in artificial neural networks grew, particularly in applications like image recognition, natural language processing, and predictive analytics, forward propagation became a key concept. It remains foundational for understanding how neural networks process and interpret data in real time.

Forward Propagation Usage

- Formal/Technical Tagging: Computational model, machine learning, neural networks, deep learning

- Typical Collocations: forward propagation process, neural network forward pass, activation functions in forward propagation, data flow in forward propagation

Forward Propagation Examples in Context

- "In training a neural network, forward propagation helps compute the predicted output based on the current weights before backpropagation adjusts them."

- "The accuracy of forward propagation in predicting outcomes is crucial for effective machine learning model performance."

- "When forward propagation is used with a softmax function, it can generate probabilities for classification tasks in neural networks."

Forward Propagation FAQ

- What is forward propagation?

Forward propagation is a process where inputs are passed through a neural network to compute an output. - Is forward propagation the same as backpropagation?

No, forward propagation moves data through the network, while backpropagation adjusts the weights. - Why is forward propagation important?

It enables neural networks to generate outputs based on their current weights, helping in prediction tasks. - Does forward propagation require labeled data?

No, it simply processes inputs based on weights; labeled data is more relevant for training. - How does forward propagation relate to prediction?

It’s the process that generates predictions from the input data in a neural network. - What’s the difference between forward propagation and inference?

Forward propagation is a step within inference, used for generating outputs in neural networks. - Can forward propagation occur without an activation function?

Yes, but activation functions help introduce non-linearity, improving model performance. - Does forward propagation affect model accuracy?

Yes, it computes the model's output, which is compared to the target in training to refine accuracy. - What role do weights play in forward propagation?

Weights determine how strongly inputs influence outputs, affecting the final predictions. - Is forward propagation computationally expensive?

It depends on the model size, but it is generally less intensive than backpropagation.

Forward Propagation Related Words

- Categories/Topics: Neural networks, machine learning, AI algorithms, data processing

- Word Families: propagate, propagation, forward

Did you know?

Forward propagation is used in nearly all modern AI applications that involve neural networks, from speech recognition to recommendation systems. Even self-driving cars rely on forward propagation to interpret sensor data and make split-second decisions based on current neural network predictions.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment