Expectation-Maximization (EM)

Quick Navigation:

- Expectation-Maximization Definition

- Expectation-Maximization Explained Easy

- Expectation-Maximization Origin

- Expectation-Maximization Etymology

- Expectation-Maximization Usage Trends

- Expectation-Maximization Usage

- Expectation-Maximization Examples in Context

- Expectation-Maximization FAQ

- Expectation-Maximization Related Words

Expectation-Maximization Definition

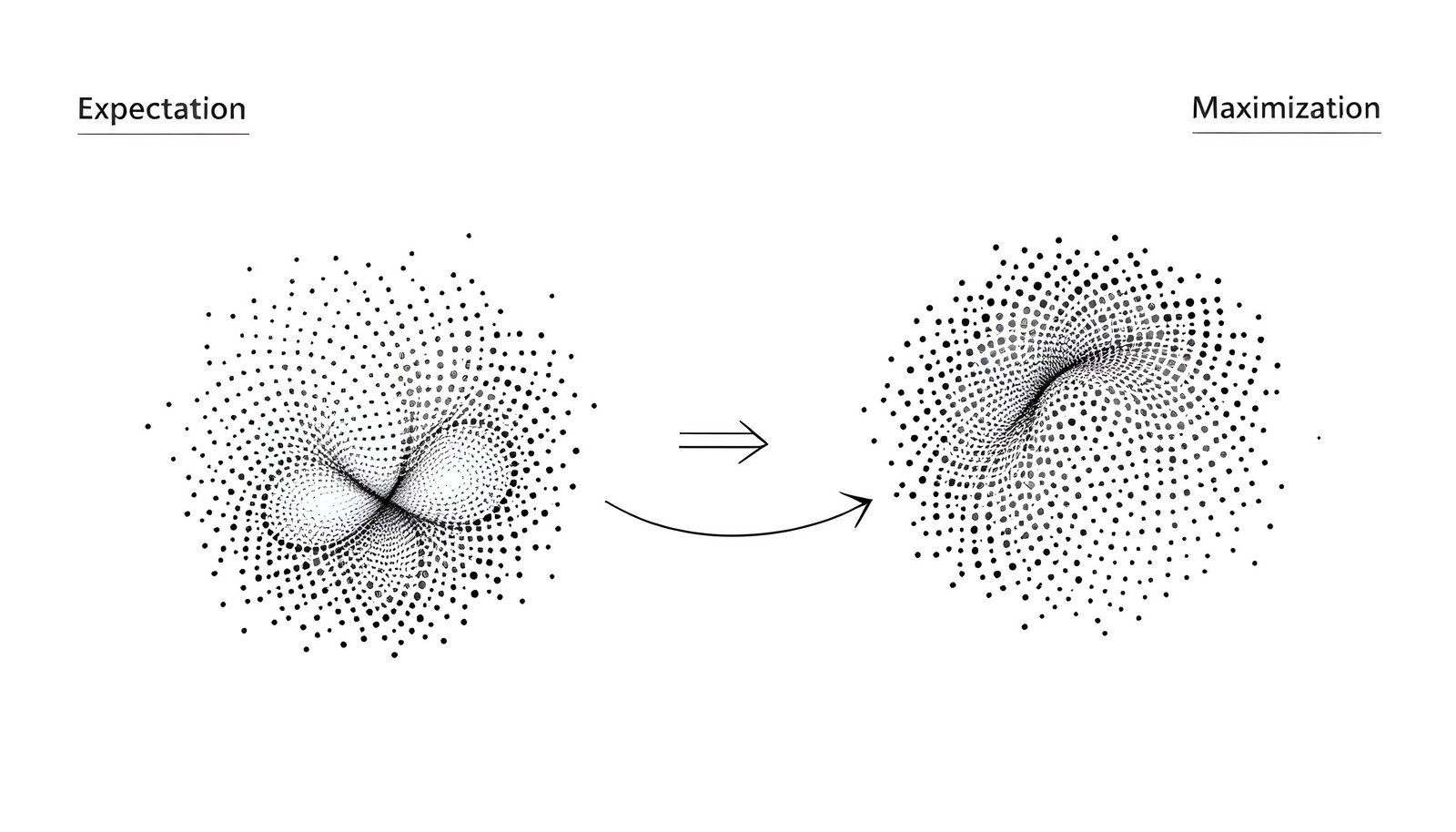

The Expectation-Maximization (EM) algorithm is an iterative method used in statistical computation, particularly in maximum likelihood estimation problems. It addresses cases where data is incomplete or has missing variables, by alternating between assigning probabilities (expectation step) and optimizing parameters (maximization step) based on these probabilities. It’s widely used in machine learning applications, like clustering and latent variable modeling, as it efficiently handles hidden data to enhance prediction accuracy.

Expectation-Maximization Explained Easy

Imagine you’re putting together a puzzle but some pieces are missing. First, you guess where the missing pieces could fit, then adjust other pieces around your guess. The EM algorithm does something similar: it makes a guess about the missing parts, then tweaks its answer based on how everything fits, repeating until it has the best picture possible.

Expectation-Maximization Origin

The algorithm was first formalized by Arthur Dempster, Nan Laird, and Donald Rubin in a seminal 1977 paper. Their work established EM as a general tool for parameter estimation, though its roots trace back to earlier statistical methods dealing with incomplete data.

Expectation-Maximization Etymology

The term comes from its two main steps: "Expectation" for estimating missing data, and "Maximization" for adjusting model parameters to fit the data more accurately.

Expectation-Maximization Usage Trends

The use of EM has grown significantly, particularly in data science and machine learning fields, due to its flexibility in handling incomplete data. EM is now fundamental in clustering, speech recognition, and natural language processing, where it allows models to operate despite gaps in data.

Expectation-Maximization Usage

- Formal/Technical Tagging:

- Machine Learning

- Statistical Computation

- Latent Variable Modeling - Typical Collocations:

- "EM algorithm optimization"

- "Expectation-Maximization steps"

- "hidden variables in EM"

- "parameter estimation using EM"

Expectation-Maximization Examples in Context

- In genetics, the EM algorithm helps infer the most probable genetic sequences when some parts of the sequence are unknown.

- Speech recognition models use EM to fill in missing data, improving their ability to understand partial or unclear audio samples.

- In customer segmentation, EM can handle incomplete user data, grouping users by likely characteristics even when information is missing.

Expectation-Maximization FAQ

- What is the Expectation-Maximization algorithm?

The EM algorithm is a statistical method for finding parameter estimates in models with missing or hidden data. - How does the EM algorithm work?

It alternates between estimating missing data (expectation) and updating parameters (maximization) to improve model accuracy. - What fields use EM?

EM is common in machine learning, genomics, speech recognition, and natural language processing. - Why is EM important for incomplete data?

EM allows models to make educated guesses for missing data, providing more accurate predictions overall. - What is the role of the expectation step?

The expectation step assigns probabilities to missing data points, helping the model fill in gaps. - What happens in the maximization step?

During maximization, the algorithm adjusts model parameters to align with both known and estimated data points. - Is the EM algorithm guaranteed to work?

It converges to a solution, but it may not find the global best solution depending on initial conditions. - How is EM used in clustering?

EM assigns clusters based on probabilistic estimates, iteratively refining clusters to improve fit. - What is a hidden variable?

Hidden variables are values in the data that are not observed but influence the outcome, which EM helps to estimate. - Can EM handle big datasets?

Yes, but it can be computationally intensive, requiring optimizations for very large datasets.

Expectation-Maximization Related Words

- Categories/Topics:

- Machine Learning

- Clustering

- Maximum Likelihood Estimation

Did you know?

Expectation-Maximization played a critical role in the development of advanced facial recognition technology, allowing systems to handle images with incomplete or obscured data. By filling in missing details, EM has helped enhance accuracy and reliability in real-world applications like surveillance and security.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment