Vanishing Gradient

Quick Navigation:

- Vanishing Gradient Definition

- Vanishing Gradient Explained Easy

- Vanishing Gradient Origin

- Vanishing Gradient Etymology

- Vanishing Gradient Usage Trends

- Vanishing Gradient Usage

- Vanishing Gradient Examples in Context

- Vanishing Gradient FAQ

- Vanishing Gradient Related Words

Vanishing Gradient Definition

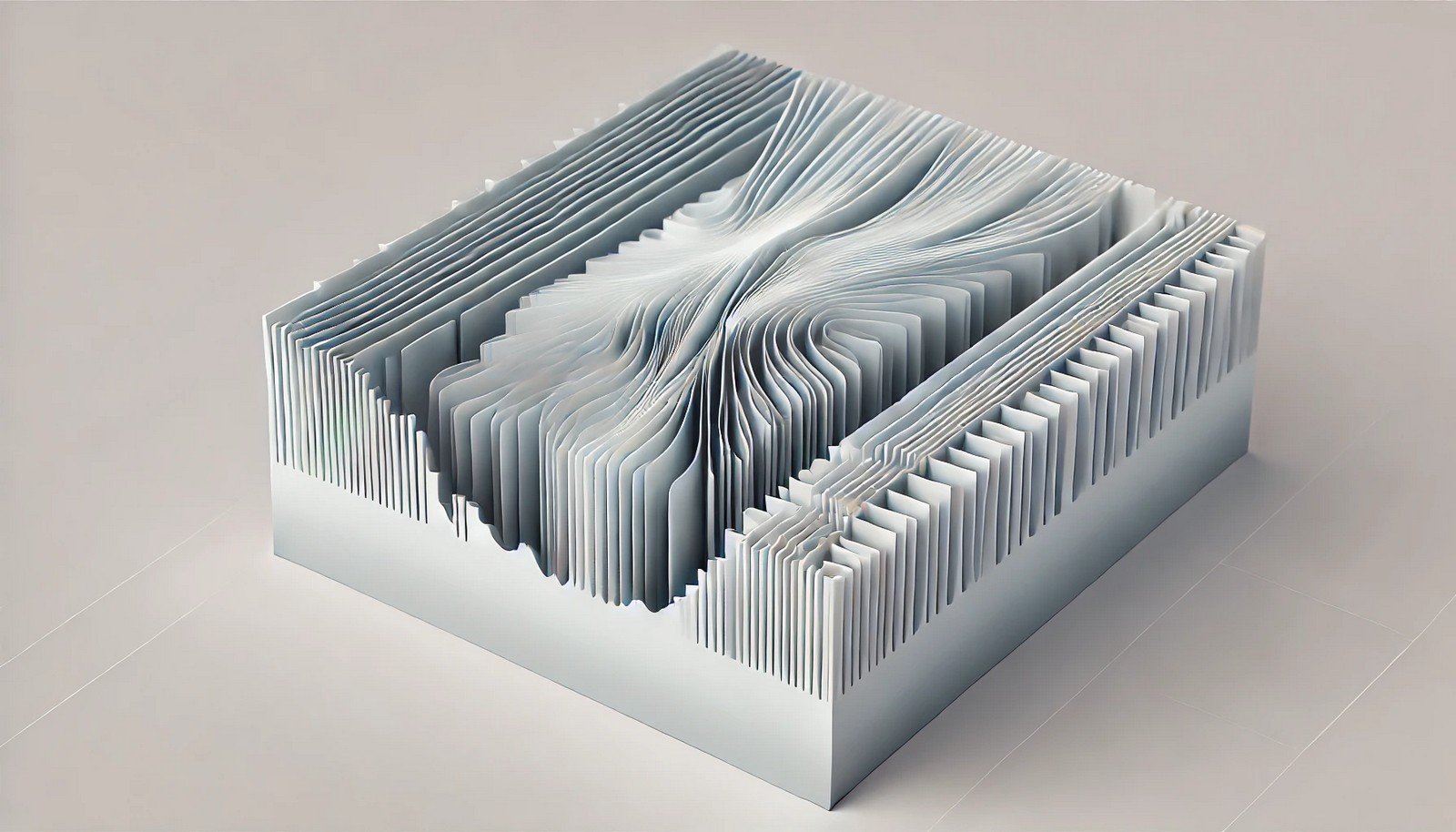

The Vanishing Gradient problem occurs in training deep neural networks when the gradients of the loss function with respect to network weights diminish, especially in layers closer to the input. As gradients get smaller through each layer during backpropagation, they may effectively approach zero, stalling the learning process. This issue particularly impacts recurrent and deep neural networks, where activation functions like sigmoid and tanh compress values, leading to this gradient diminishment.

Vanishing Gradient Explained Easy

Imagine trying to pass water through a long, thin straw. The water trickles down so slowly it almost stops, just like the learning in a neural network when the gradient becomes too small. Without enough "flow" or change in learning, the model struggles to get better. The vanishing gradient is this slowing down, and it makes teaching AI tough!

Vanishing Gradient Origin

The Vanishing Gradient problem was recognized with the development of deep neural networks in the 1980s and 90s, becoming prominent as researchers noticed difficulty training deeper layers. Early experiments revealed that as more layers were added, gradients diminished, making the networks hard to optimize.

Vanishing Gradient Etymology

The term "Vanishing Gradient" reflects the disappearance, or "vanishing," of gradient values during the training of deep neural networks.

Vanishing Gradient Usage Trends

Awareness of the vanishing gradient problem surged as deep learning became popular. Researchers continuously develop strategies, such as ReLU activation functions, batch normalization, and residual connections, to mitigate this challenge. Its impact is observed widely in deep learning applications, from image recognition to language processing.

Vanishing Gradient Usage

- Formal/Technical Tagging:

- Neural Networks

- Deep Learning

- Backpropagation Challenges - Typical Collocations:

- "vanishing gradient problem"

- "gradient descent issues"

- "training deep networks"

Vanishing Gradient Examples in Context

- The vanishing gradient problem limits the effectiveness of traditional recurrent neural networks when processing long sequences.

- Researchers often use ReLU and other non-saturating functions to counteract the vanishing gradient.

- Batch normalization can help stabilize the gradients, reducing the vanishing effect during training.

Vanishing Gradient FAQ

- What is the vanishing gradient problem?

It’s a phenomenon where gradients shrink as they propagate backward through a deep network, halting effective learning. - Why does the vanishing gradient problem occur?

It often results from activation functions that compress gradient values, making them too small for the model to improve. - What are some solutions to the vanishing gradient problem?

Solutions include using ReLU activations, batch normalization, and residual networks. - How does vanishing gradient impact RNNs?

It limits RNNs' ability to learn dependencies in long sequences due to gradient shrinkage over layers. - What is backpropagation?

Backpropagation is a method used to train neural networks by adjusting weights in reverse through layers. - Can vanishing gradient be completely prevented?

It can be mitigated but not fully avoided in all models; strategies can help reduce its impact. - Why is ReLU effective against vanishing gradient?

ReLU does not saturate for positive values, preserving gradient size across layers. - Are vanishing and exploding gradient the same?

No, the exploding gradient causes gradients to become too large, while the vanishing gradient causes them to shrink. - How does vanishing gradient affect learning speed?

It slows down learning as gradient values reduce, stalling model optimization. - Which neural networks are most affected by vanishing gradient?

Deep and recurrent networks, especially those with many layers or tanh/sigmoid activations, are most affected.

Vanishing Gradient Related Words

- Categories/Topics:

- Neural Network Training

- AI Optimization

- Deep Learning Challenges

Did you know?

In the early days of deep learning, the vanishing gradient issue was a significant hurdle until the ReLU activation function was introduced. ReLU’s success has helped researchers train much deeper networks and brought about breakthroughs in AI capabilities.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment