Processor Pipelining

Quick Navigation:

- Processor Pipelining Definition

- Processor Pipelining Explained Easy

- Processor Pipelining Origin

- Processor Pipelining Etymology

- Processor Pipelining Usage Trends

- Processor Pipelining Usage

- Processor Pipelining Examples in Context

- Processor Pipelining FAQ

- Processor Pipelining Related Words

Processor Pipelining Definition

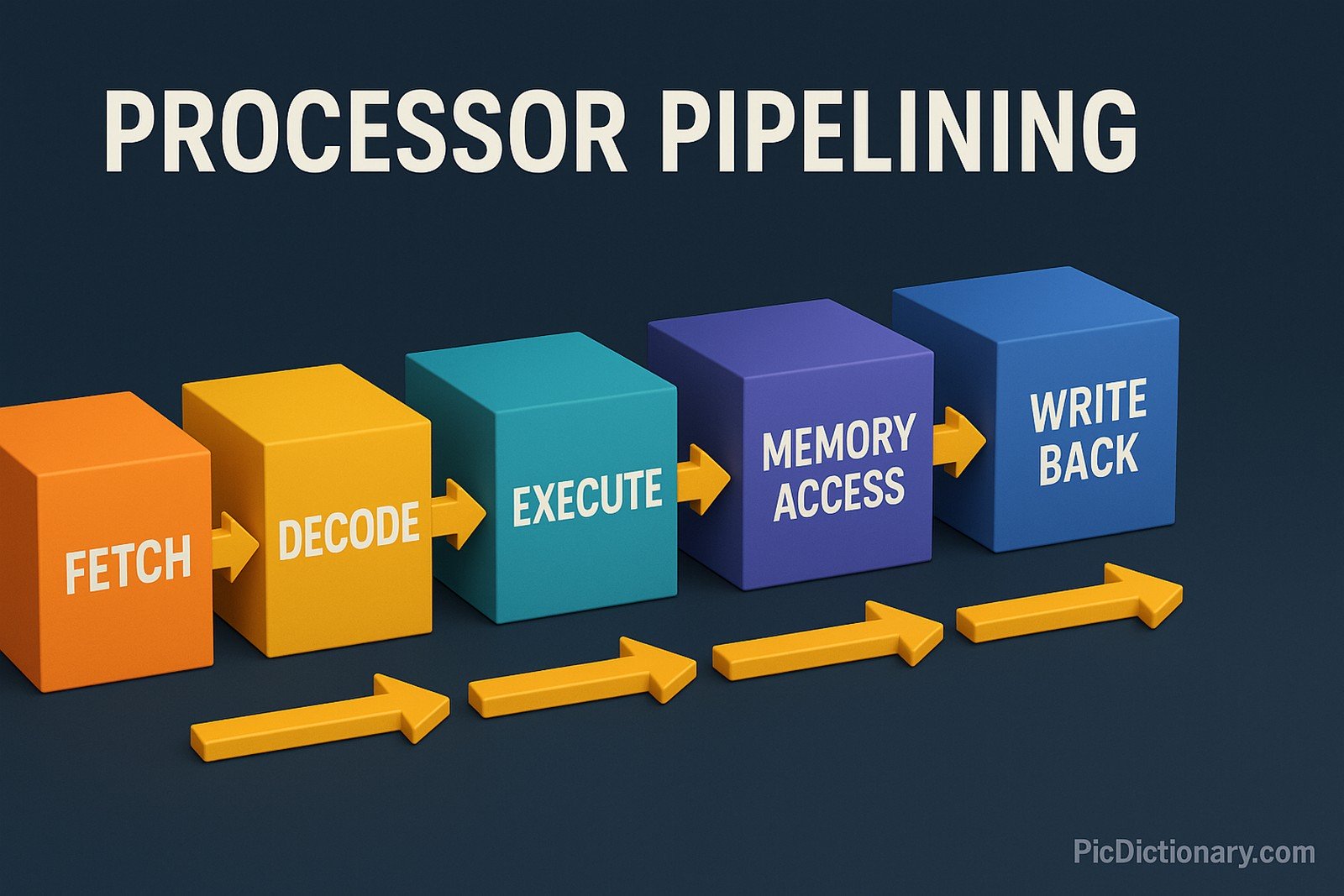

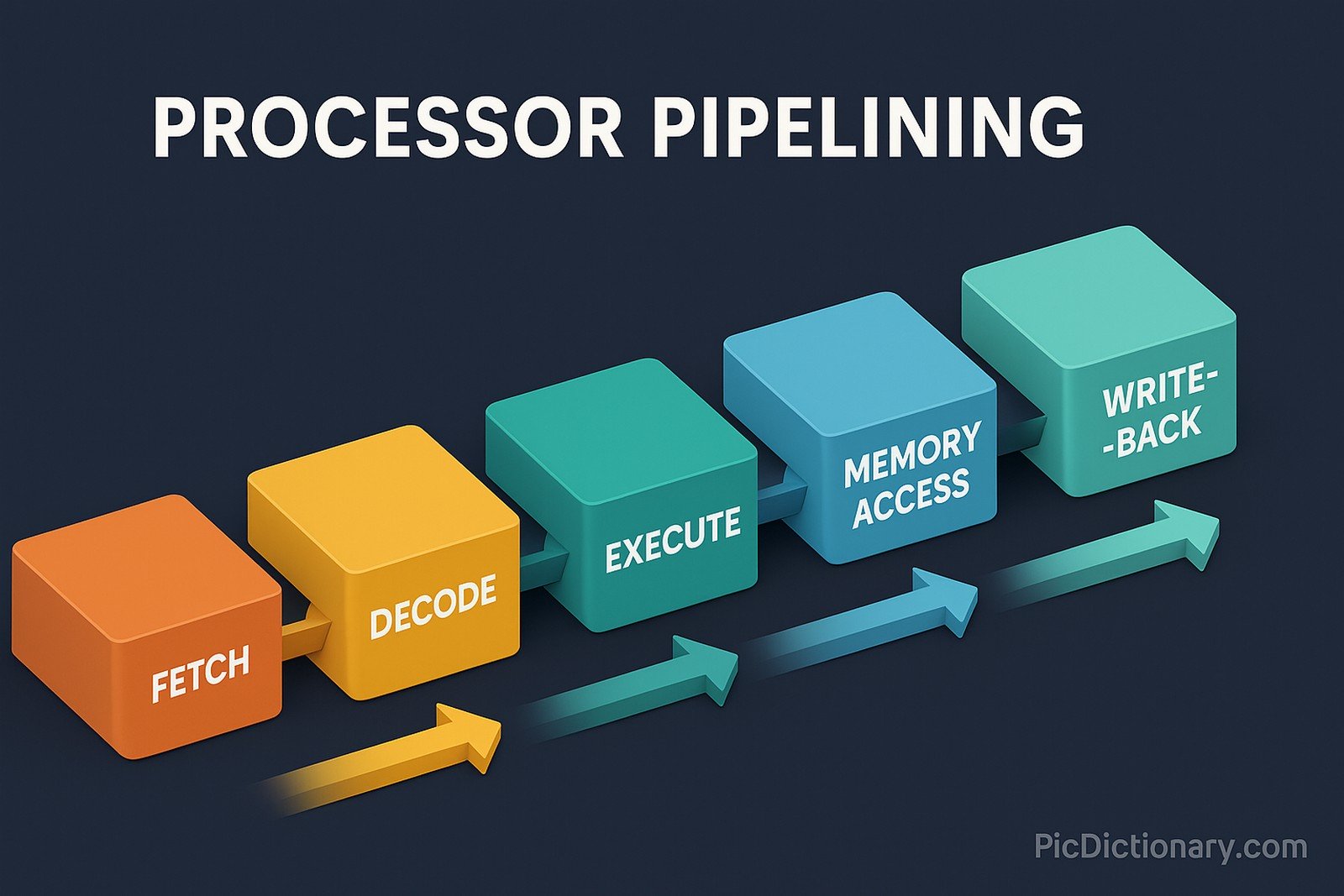

Processor pipelining is a technique used in computer architecture where multiple instruction phases are executed simultaneously in different stages of a processor. By dividing instruction execution into discrete steps—such as fetch, decode, execute, memory access, and write-back—pipelining improves overall performance and efficiency. This method enables modern CPUs to process multiple instructions at once, significantly increasing instruction throughput and reducing idle time. However, challenges like data hazards, control hazards, and pipeline stalls must be managed to maintain efficiency.

Processor Pipelining Explained Easy

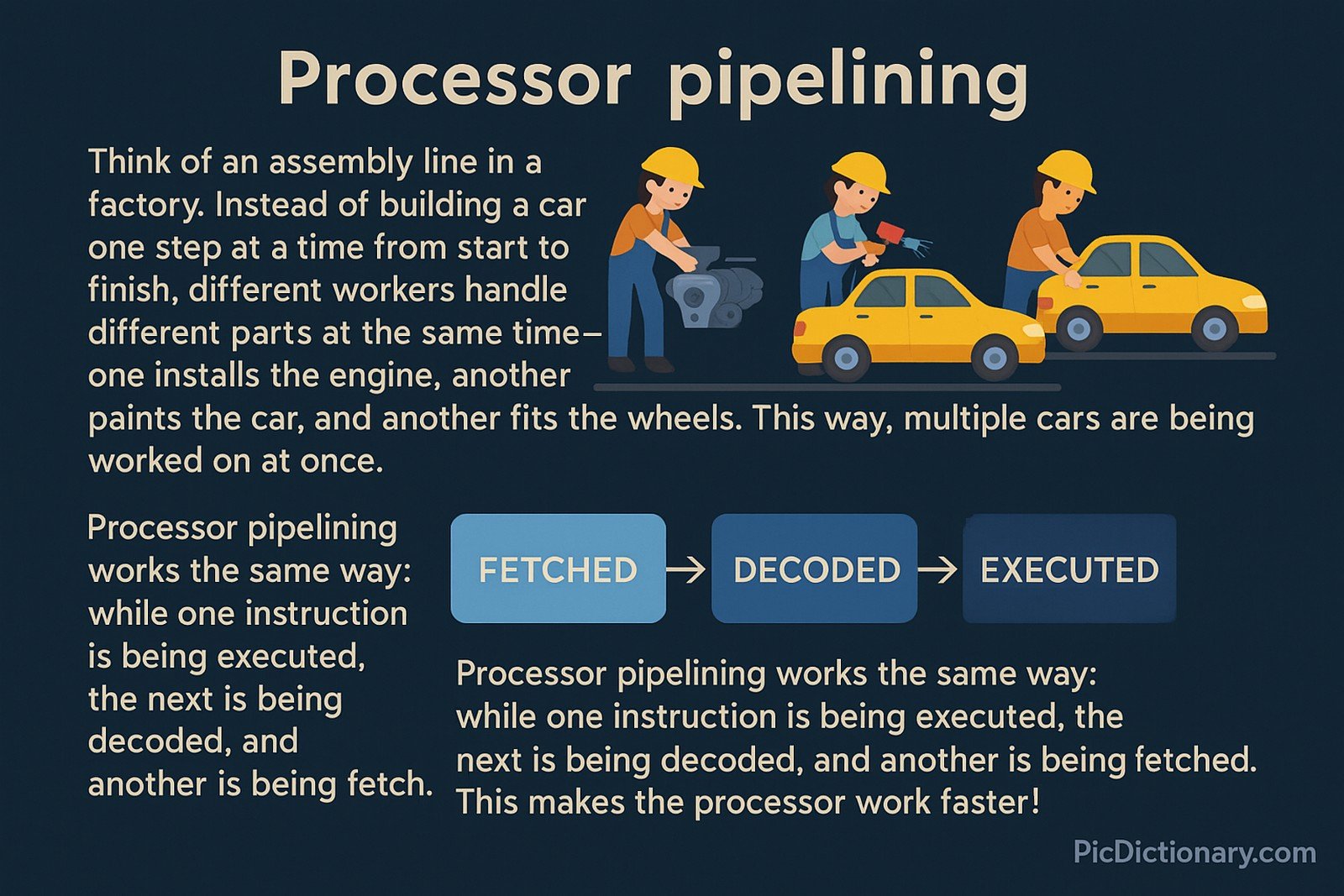

Think of an assembly line in a factory. Instead of building a car one step at a time from start to finish, different workers handle different parts at the same time—one installs the engine, another paints the car, and another fits the wheels. This way, multiple cars are being worked on at once. Processor pipelining works the same way: while one instruction is being executed, the next is being decoded, and another is being fetched. This makes the processor work faster!

Processor Pipelining Origin

The concept of pipelining dates back to early computing systems in the 1950s and 1960s. The IBM Stretch computer introduced pipelining concepts, and the idea was further refined in the 1970s with RISC (Reduced Instruction Set Computing) architectures. Today, almost all modern processors use some form of pipelining to enhance performance.

Processor Pipelining Etymology

The term "pipelining" originates from the analogy of water flowing through a pipeline, where different sections handle different processes simultaneously, improving efficiency.

Processor Pipelining Usage Trends

Processor pipelining has evolved significantly with advances in microarchitecture. Earlier, basic 5-stage pipelines were common, but modern processors use deep pipelining with over 10 stages. Simultaneously, technologies like superscalar execution and speculative execution further enhance instruction throughput. Pipelining is now standard in CPUs, GPUs, and even specialized processing units like DSPs (Digital Signal Processors).

Processor Pipelining Usage

- Formal/Technical Tagging:

- Computer Architecture

- CPU Design

- Instruction Processing - Typical Collocations:

- "pipeline stall"

- "instruction pipeline"

- "pipeline depth"

- "hazard mitigation in pipelining"

Processor Pipelining Examples in Context

- Modern processors like Intel and AMD CPUs use deep pipelining to execute multiple instructions simultaneously.

- GPUs use pipelining extensively to process large numbers of graphical computations in parallel.

- Reduced pipeline stalls through branch prediction techniques significantly improve processor speed.

Processor Pipelining FAQ

- What is processor pipelining?

Processor pipelining is a technique in CPU design that allows multiple instruction phases to execute simultaneously, improving performance. - How does pipelining improve CPU performance?

By overlapping instruction execution stages, pipelining increases throughput and reduces instruction idle time. - What are pipeline hazards?

Pipeline hazards are situations that stall or disrupt pipelining, such as data hazards (data dependency issues), control hazards (branch mispredictions), and structural hazards (hardware conflicts). - What is a pipeline stall?

A pipeline stall occurs when instruction execution is delayed due to dependencies, requiring the processor to wait before continuing execution. - What is the difference between a 5-stage pipeline and a deep pipeline?

A 5-stage pipeline includes fetch, decode, execute, memory access, and write-back stages, whereas deep pipelines extend this concept with additional stages for optimization. - Can pipelining be used in GPUs?

Yes, GPUs utilize extensive pipelining to handle complex parallel computations efficiently. - How does branch prediction help in pipelining?

Branch prediction minimizes control hazards by guessing the outcome of conditional operations, allowing the pipeline to proceed without unnecessary stalls. - Is pipelining used in RISC and CISC architectures?

Yes, both RISC and CISC architectures use pipelining, but RISC architectures are generally more optimized for pipelined execution due to their simpler instruction set. - What is superscalar pipelining?

Superscalar pipelining allows multiple instructions to be executed in parallel, increasing overall processing power. - Are there any drawbacks to pipelining?

Yes, pipelining introduces complexity in handling hazards and requires sophisticated control mechanisms to avoid performance losses due to stalls.

Processor Pipelining Related Words

- Categories/Topics:

- Computer Architecture

- Instruction-Level Parallelism

- CPU Optimization

Did you know?

Processor pipelining is crucial in gaming! Modern gaming consoles and high-end PCs rely on deeply pipelined processors with branch prediction and superscalar execution to ensure smooth gameplay at high frame rates. Without pipelining, advanced 3D rendering and AI processing in modern games would be significantly slower.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment