Thread-Level Parallelism

(Representational Image | Source: Dall-E)

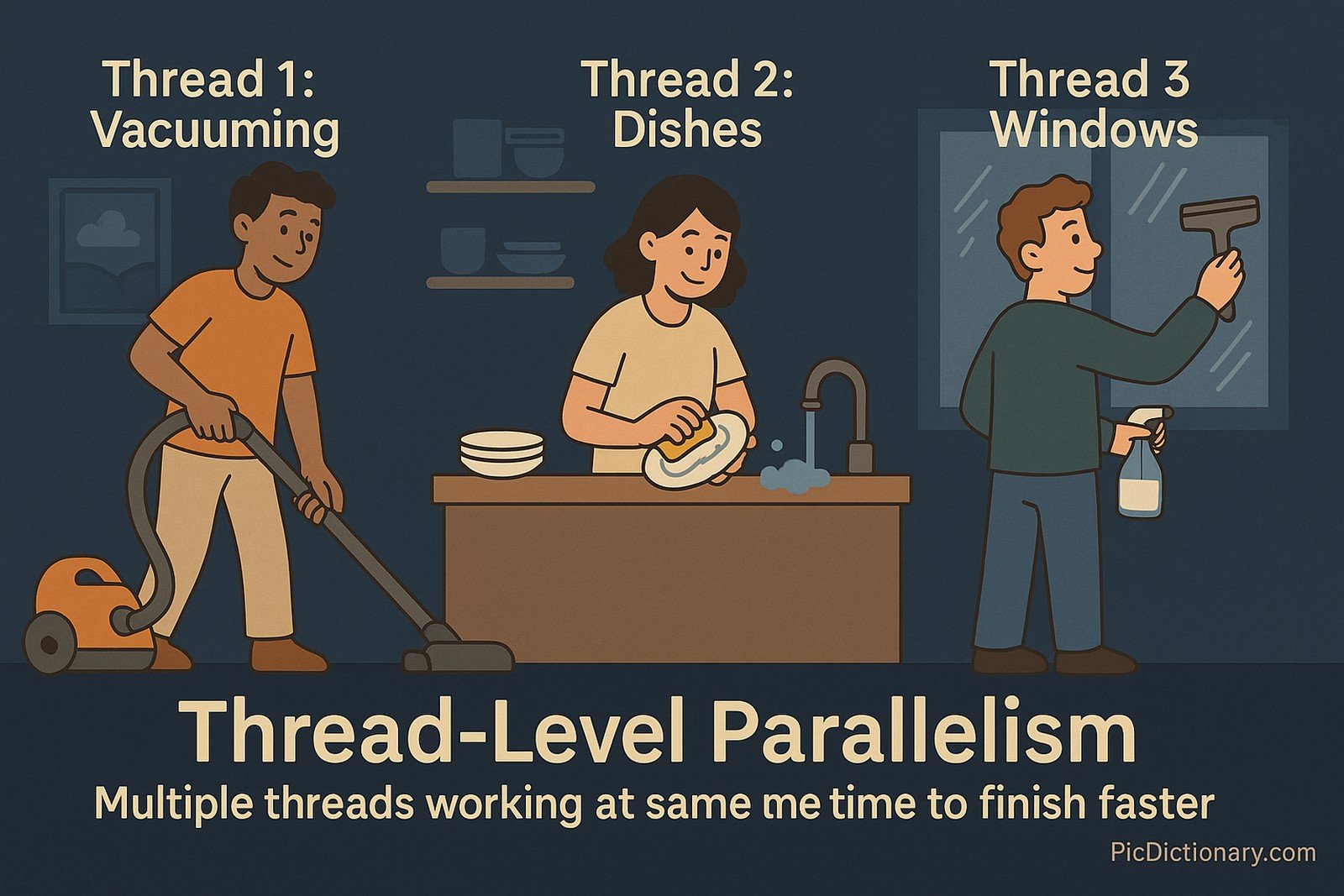

(Representational Image | Source: Dall-E)

Quick Navigation:

- Thread-Level Parallelism Definition

- Thread-Level Parallelism Explained Easy

- Thread-Level Parallelism Origin

- Thread-Level Parallelism Etymology

- Thread-Level Parallelism Usage Trends

- Thread-Level Parallelism Usage

- Thread-Level Parallelism Examples in Context

- Thread-Level Parallelism FAQ

- Thread-Level Parallelism Related Words

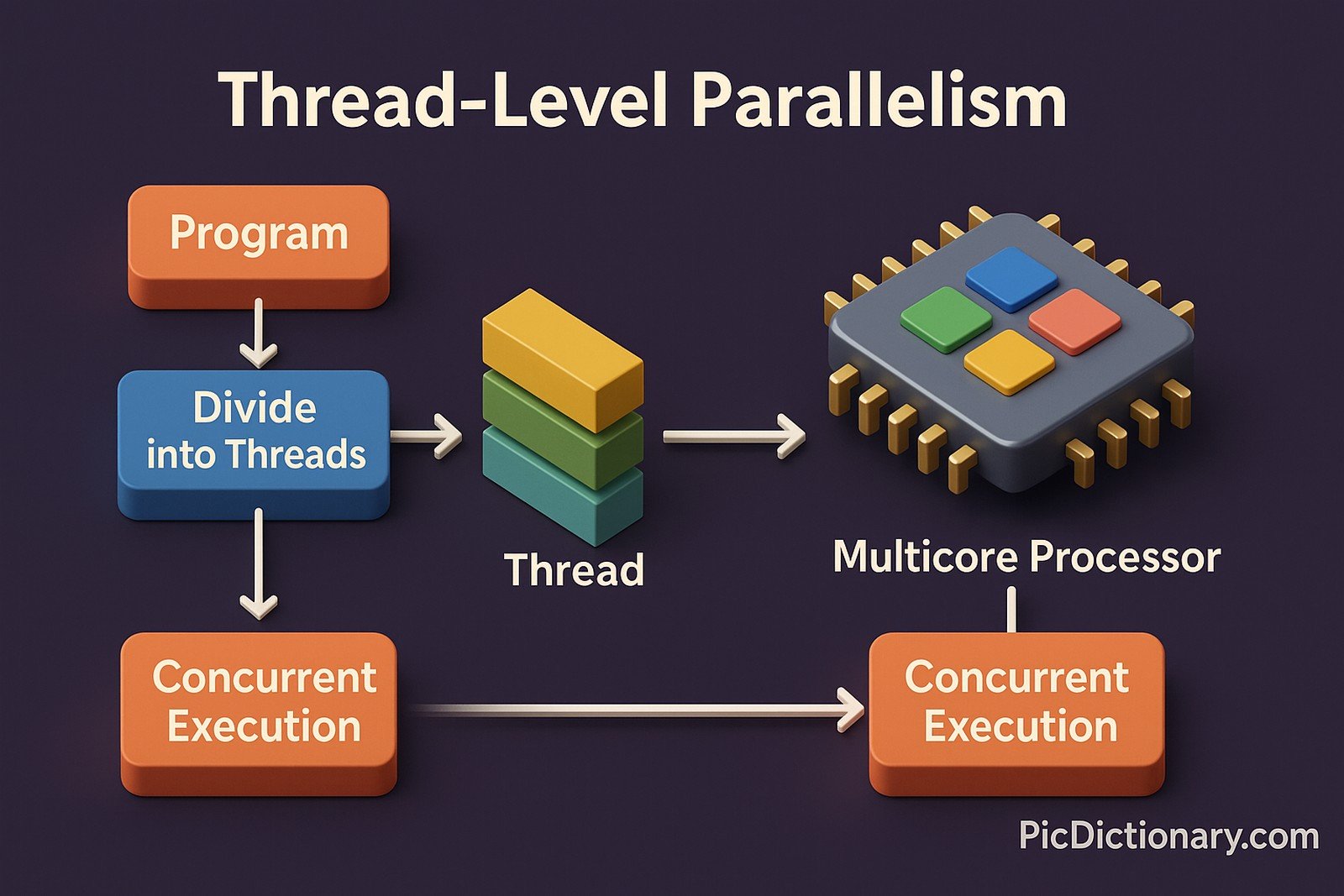

Thread-Level Parallelism Definition

Thread-Level Parallelism (TLP) is a computer architecture concept that enables multiple threads to execute simultaneously to improve overall system performance. It is commonly utilized in multi-core and multi-threaded processor environments, where tasks are divided into smaller threads that run concurrently. TLP is a critical component of modern computing, enabling better resource utilization, reduced execution time, and improved responsiveness in applications like gaming, scientific computing, and data processing.

Thread-Level Parallelism Explained Easy

Imagine you have to clean a house, but instead of doing it alone, you and your friends split the tasks. One person vacuums, another washes the dishes, and someone else cleans the windows—all at the same time. This way, the house gets cleaned much faster. Thread-Level Parallelism works the same way in computers: instead of one task running at a time, multiple smaller tasks (threads) run simultaneously, making everything faster and more efficient.

Thread-Level Parallelism Origin

The concept of parallel computing has existed for decades, but Thread-Level Parallelism gained prominence in the late 1990s and early 2000s with the advent of multi-core processors. As software applications demanded more computational power, chip manufacturers began designing processors that could execute multiple threads simultaneously. Today, TLP is a fundamental aspect of modern CPUs and GPUs.

Thread-Level Parallelism Etymology

The term “Thread-Level Parallelism” derives from:

- Thread: A sequence of executable instructions within a process.

- Level: Indicating the degree or hierarchy at which parallel execution occurs.

- Parallelism: The simultaneous execution of multiple computations.

Thread-Level Parallelism Usage Trends

In recent years, TLP has gained significant traction as multi-core and hyper-threaded processors have become standard in both consumer and enterprise-grade systems. Cloud computing, artificial intelligence, and high-performance computing rely heavily on TLP for efficient execution of workloads. As the demand for real-time processing grows, industries continue to optimize software and hardware architectures to maximize TLP.

Thread-Level Parallelism Usage

- Formal/Technical Tagging:

- Multi-threading

- Parallel Computing

- Concurrent Execution - Typical Collocations:

- “multi-threaded execution”

- “thread-level parallel computing”

- “parallel processing with threads”

- “scalable thread execution”

Thread-Level Parallelism Examples in Context

- Web browsers utilize TLP to render multiple web pages simultaneously, ensuring a smoother browsing experience.

- Video games use TLP to manage physics simulations, AI behavior, and graphics rendering concurrently.

- Data processing systems leverage TLP to run complex computations across multiple threads, improving efficiency.

Thread-Level Parallelism FAQ

- What is Thread-Level Parallelism?

Thread-Level Parallelism is a computing technique that allows multiple threads to execute simultaneously, improving performance and efficiency. - How does TLP differ from instruction-level parallelism?

TLP parallelizes entire threads, while instruction-level parallelism (ILP) optimizes execution within a single thread. - Why is TLP important in modern computing?

It enables better multitasking, efficient CPU utilization, and faster execution of complex applications. - Which processors support Thread-Level Parallelism?

Most modern CPUs and GPUs, including Intel, AMD, and ARM processors, support multi-threading and TLP. - How does multi-threading relate to TLP?

Multi-threading is a technique that implements TLP by allowing multiple threads to run within a single process. - Can TLP improve gaming performance?

Yes, games benefit from TLP as it allows graphics, physics, and AI processing to run concurrently. - What are some challenges of implementing TLP?

Synchronization issues, race conditions, and thread management complexity can arise. - Is TLP used in artificial intelligence?

Yes, AI workloads, particularly in deep learning, leverage TLP for faster model training and inference. - How does TLP benefit cloud computing?

Cloud platforms distribute workloads across multiple threads and cores, optimizing resource usage. - What programming languages support TLP?

Languages like C++, Java, and Python provide multi-threading libraries for implementing TLP.

Thread-Level Parallelism Related Words

- Categories/Topics:

- Computer Architecture

- High-Performance Computing

- Multi-threading

Did you know?

Thread-Level Parallelism plays a crucial role in space exploration! NASA uses TLP in their onboard computing systems to process vast amounts of data from spacecraft sensors, enabling real-time decision-making and autonomous navigation.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment