DropBlock Regularization

Quick Navigation:

- DropBlock Regularization Definition

- DropBlock Regularization Explained Easy

- DropBlock Regularization Origin

- DropBlock Regularization Etymology

- DropBlock Regularization Usage Trends

- DropBlock Regularization Usage

- DropBlock Regularization Examples in Context

- DropBlock Regularization FAQ

- DropBlock Regularization Related Words

DropBlock Regularization Definition

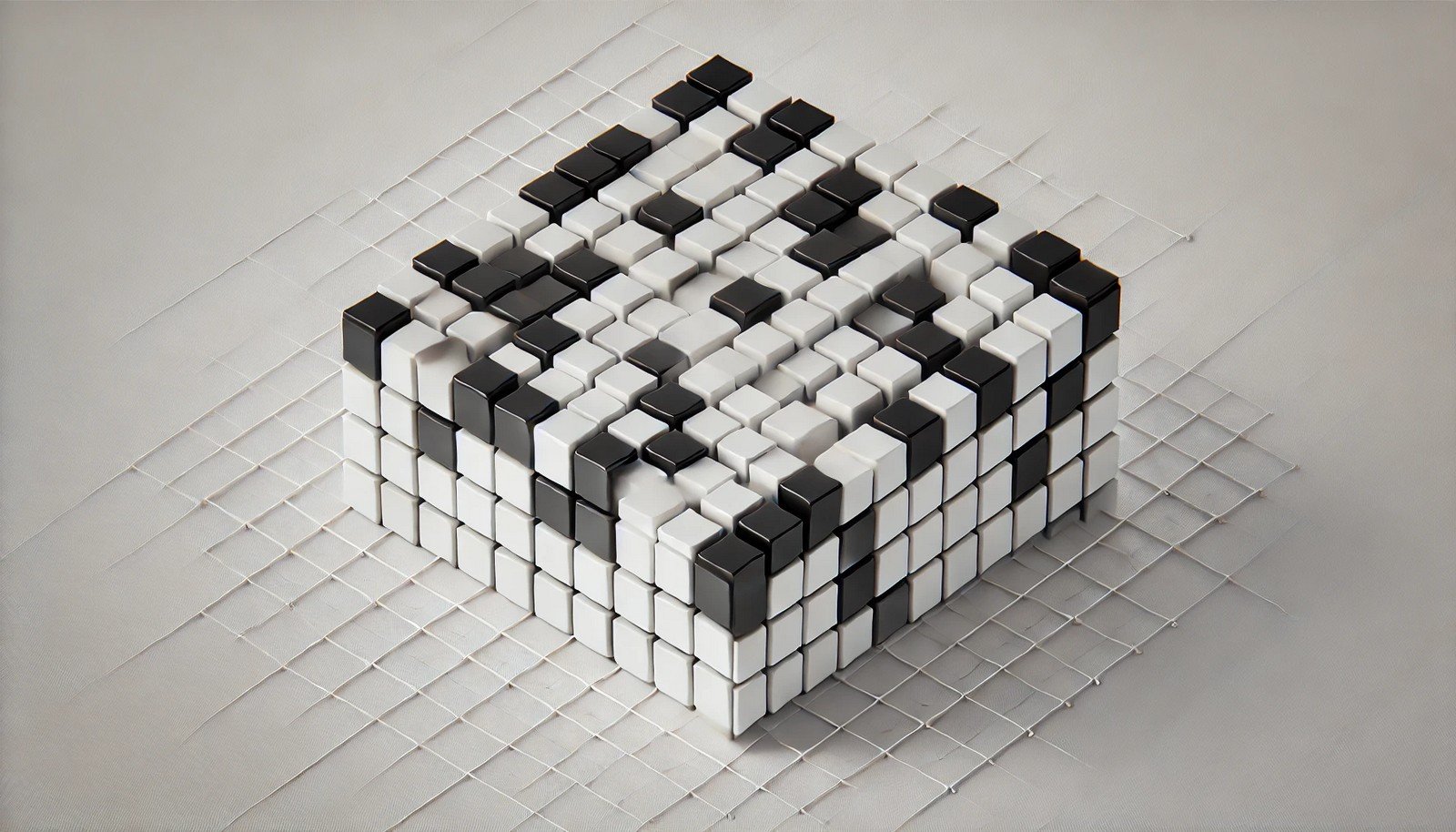

DropBlock Regularization is a technique used in deep learning to prevent overfitting by randomly dropping contiguous blocks of activations within a feature map during training. This strategy reduces the model's dependency on specific features and encourages it to generalize across a broader range of input variations. In contrast to Dropout, which randomly zeros out individual units, DropBlock zeros out regions in feature maps, which has been shown to be more effective in convolutional neural networks (CNNs) for tasks like image recognition.

DropBlock Regularization Explained Easy

Imagine you're playing a memory game where you must remember an entire pattern on a grid. But, some blocks in the pattern get covered randomly, forcing you to focus on the overall picture, not just certain details. DropBlock is like this in deep learning: by blocking out areas in the "picture" (feature map), it makes the computer learn the whole pattern, not just parts.

DropBlock Regularization Origin

DropBlock Regularization emerged in response to limitations of traditional dropout in handling spatial dependencies within convolutional neural networks. It was proposed by researchers to improve generalization on complex image datasets by enforcing spatial regularization, especially for models with many convolutional layers.

DropBlock Regularization Etymology

The term "DropBlock" combines "Drop," as in Dropout regularization, with "Block," indicating that larger, contiguous regions within the neural network are "dropped" or zeroed out.

DropBlock Regularization Usage Trends

DropBlock Regularization has gained traction in computer vision tasks due to its improved performance in models that rely on spatial structure, like image classification and object detection. Researchers frequently use DropBlock in state-of-the-art CNNs when Dropout fails to sufficiently regularize large, high-dimensional layers.

DropBlock Regularization Usage

- Formal/Technical Tagging:

- Neural Networks

- Regularization

- Convolutional Neural Networks - Typical Collocations:

- "DropBlock Regularization"

- "feature map blocking"

- "DropBlock in deep learning"

- "DropBlock for CNNs"

DropBlock Regularization Examples in Context

- DropBlock Regularization improved the performance of a CNN model by reducing overfitting during training.

- In image recognition, DropBlock has been applied to block specific areas of an image, encouraging the network to capture broader patterns.

- Many high-performing neural network architectures use DropBlock as a standard technique for regularization.

DropBlock Regularization FAQ

- What is DropBlock Regularization?

DropBlock Regularization is a technique that randomly drops contiguous blocks in feature maps, enhancing model generalization. - How is DropBlock different from Dropout?

Unlike Dropout, which drops individual units, DropBlock drops entire regions, which is more effective in convolutional layers. - What are the benefits of using DropBlock?

DropBlock reduces overfitting, especially in CNNs, by making the model less reliant on specific features. - When should DropBlock be used?

DropBlock is most effective in convolutional neural networks, particularly for image classification and object detection. - Is DropBlock only for CNNs?

It is primarily used in CNNs but could theoretically be adapted for other architectures. - Does DropBlock affect model performance?

DropBlock can increase model performance by enhancing generalization, though it may require fine-tuning. - How is DropBlock implemented?

DropBlock is typically implemented by setting regions of a feature map to zero during each training step. - Does DropBlock require additional computational power?

DropBlock has minimal impact on computation, as it only modifies feature maps during training. - Why is DropBlock preferred over other regularization methods in CNNs?

DropBlock better maintains spatial structure, crucial for CNNs on tasks that rely on spatial coherence. - Can DropBlock be used with other regularization methods?

Yes, DropBlock can complement other methods, enhancing model robustness.

DropBlock Regularization Related Words

- Categories/Topics:

- Neural Networks

- Deep Learning

- Convolutional Neural Networks

- Computer Vision

Did you know?

The initial experiments with DropBlock showed significant improvements in the accuracy of ResNet models on the ImageNet dataset, showcasing its potential as a superior regularization method over traditional Dropout.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment