Leaky ReLU

Quick Navigation:

- Leaky ReLU Definition

- Leaky ReLU Explained Easy

- Leaky ReLU Origin

- Leaky ReLU Etymology

- Leaky ReLU Usage Trends

- Leaky ReLU Usage

- Leaky ReLU Examples in Context

- Leaky ReLU FAQ

- Leaky ReLU Related Words

Leaky ReLU Definition

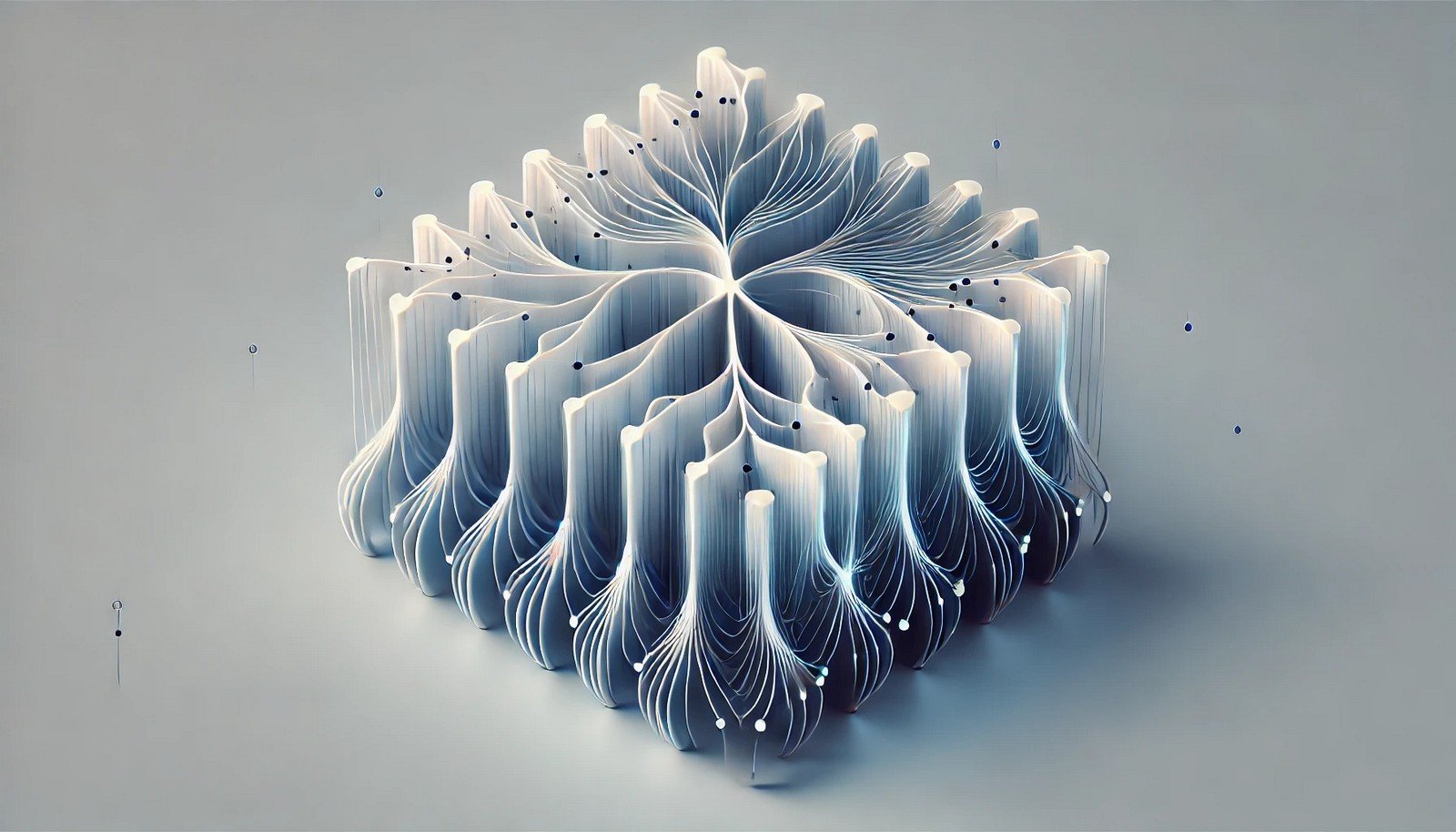

Leaky ReLU (Rectified Linear Unit) is an activation function used in artificial neural networks, especially deep learning models. It introduces a small, non-zero slope for negative values, which allows for a small gradient to pass through even for negative inputs, unlike standard ReLU, which restricts negative values to zero. This property addresses the vanishing gradient problem, enabling networks to learn more efficiently and avoid dead neurons during training.

Leaky ReLU Explained Easy

Think of Leaky ReLU as a friendlier door. While most doors (like regular ReLU) stay shut for anyone not strong enough (negative values), Leaky ReLU is like a door that still allows a bit of air through, even if someone isn’t knocking very hard. This way, the network keeps a small flow going, helping it learn better in the long run.

Leaky ReLU Origin

Leaky ReLU emerged in the context of deep learning to improve upon limitations of the traditional ReLU function. It was introduced to combat the issue of neurons “dying” or becoming inactive when encountering too many negative values during training.

Leaky ReLU Etymology

The term "leaky" refers to the small allowance given for negative values, akin to a “leak,” letting some gradient pass through rather than fully blocking it.

Leaky ReLU Usage Trends

Leaky ReLU has gained traction with the advancement of deeper neural networks and applications in computer vision, language processing, and gaming. It’s often preferred in complex models where continuous learning is crucial, as it minimizes the chances of inactive neurons compared to the standard ReLU.

Leaky ReLU Usage

- Formal/Technical Tagging:

- Machine Learning

- Neural Networks

- Activation Function - Typical Collocations:

- "Leaky ReLU function"

- "activation layer with Leaky ReLU"

- "preventing dead neurons with Leaky ReLU"

- "gradient flow in Leaky ReLU"

Leaky ReLU Examples in Context

- In image recognition models, Leaky ReLU activation functions are used to allow the model to retain learning through slight gradients, even with negative pixel values.

- Leaky ReLU layers can be added to prevent information loss in speech recognition networks, enhancing the model’s ability to process varying sound frequencies.

- In game development, Leaky ReLU is integrated into reinforcement learning models to optimize decision-making algorithms, even with complex input data.

Leaky ReLU FAQ

- What is Leaky ReLU?

Leaky ReLU is an activation function that allows a small gradient for negative inputs, unlike the standard ReLU. - Why is Leaky ReLU used in deep learning?

It helps avoid the “dying ReLU” problem by allowing slight gradients, aiding in more effective learning. - How does Leaky ReLU differ from ReLU?

Leaky ReLU has a small slope for negative values, while ReLU outputs zero for any negative input. - When is Leaky ReLU better than ReLU?

It’s preferred in deep networks where dead neurons may affect performance due to many negative inputs. - Is Leaky ReLU suitable for all layers?

It’s generally used in hidden layers but not as commonly in output layers. - What is the slope of Leaky ReLU?

It typically has a slope of 0.01 for negative values, but this can vary. - Can Leaky ReLU reduce model accuracy?

Not typically, though its small slope might be less effective in some tasks, depending on the data. - Is Leaky ReLU computationally expensive?

It’s slightly more complex than ReLU but generally does not impact model speed significantly. - What are the alternatives to Leaky ReLU?

Alternatives include ELU (Exponential Linear Unit) and PReLU (Parametric ReLU). - How is Leaky ReLU implemented?

It’s implemented as a simple layer in most neural network libraries like TensorFlow and PyTorch.

Leaky ReLU Related Words

- Categories/Topics:

- Deep Learning

- Activation Functions

- Neural Networks

Did you know?

Leaky ReLU has contributed significantly to advancements in image recognition, where it allows for a richer and more resilient learning process. This function helps prevent neuron "death," making it highly valued in modern AI models for its capacity to maintain model depth without sacrificing performance.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment