Gradient Clipping

Quick Navigation:

- Gradient Clipping Definition

- Gradient Clipping Explained Easy

- Gradient Clipping Origin

- Gradient Clipping Etymology

- Gradient Clipping Usage Trends

- Gradient Clipping Usage

- Gradient Clipping Examples in Context

- Gradient Clipping FAQ

- Gradient Clipping Related Words

Gradient Clipping Definition

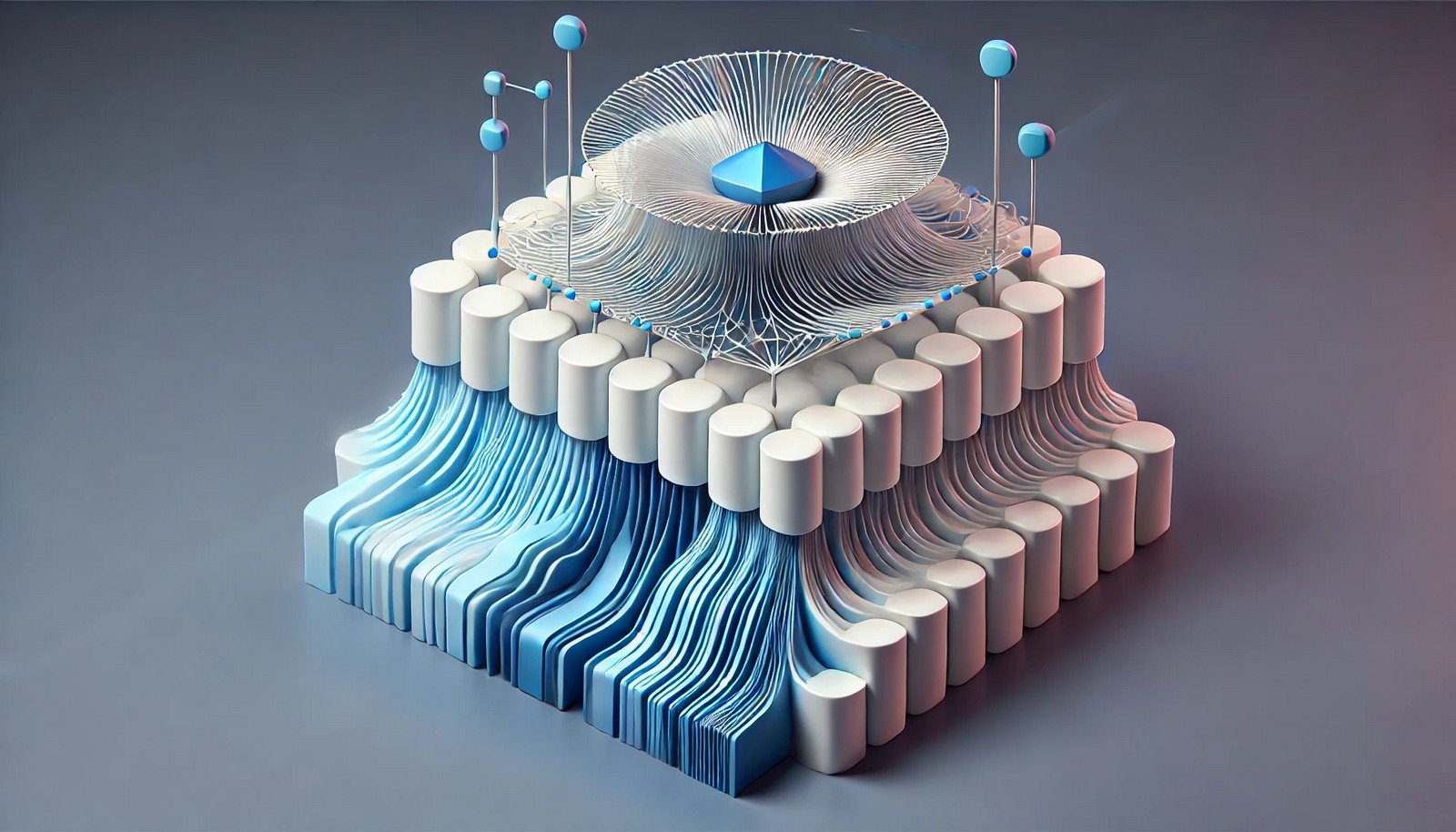

Gradient Clipping is a technique used in deep learning to control the values of gradients during backpropagation. It prevents the gradients from becoming excessively large, a problem often referred to as "exploding gradients." This technique modifies gradients that exceed a predefined threshold, ensuring they do not destabilize the neural network during training. It’s especially useful in deep networks and recurrent neural networks (RNNs), where unbounded gradients can lead to model failure.

Gradient Clipping Explained Easy

Imagine you’re pouring water into a cup. If you pour too much, the water spills over. Gradient Clipping is like limiting the water flow so it doesn’t overflow the cup. In deep learning, it stops numbers (gradients) from getting too big and messing up the training.

Gradient Clipping Origin

The method of Gradient Clipping originated from research addressing the challenges of training deep networks, specifically long sequences in RNNs. This technique became popular as neural networks grew deeper and more complex, making gradient control essential.

Gradient Clipping Etymology

The term “Gradient Clipping” stems from "clipping," meaning to trim or cut. Here, it refers to capping gradients at a maximum value to prevent overflow.

Gradient Clipping Usage Trends

Over the past decade, Gradient Clipping has become more widely adopted as deep learning models have advanced. It’s a standard technique in training complex models, particularly in fields like natural language processing, where sequence length can lead to large gradients, and in reinforcement learning, which relies heavily on gradient-based optimization.

Gradient Clipping Usage

- Formal/Technical Tagging:

- Deep Learning

- Neural Network Training

- Gradient Descent - Typical Collocations:

- "gradient clipping technique"

- "control exploding gradients"

- "gradient thresholding"

- "training stability with gradient clipping"

Gradient Clipping Examples in Context

- In training an RNN for language translation, Gradient Clipping prevents model instability by limiting gradient values.

- For a deep neural network used in image recognition, Gradient Clipping helps stabilize training by controlling gradients in early layers.

- Reinforcement learning algorithms often apply Gradient Clipping to handle large, fluctuating gradient values from complex reward functions.

Gradient Clipping FAQ

- What is Gradient Clipping?

Gradient Clipping is a method to limit the size of gradients during model training to prevent exploding gradients. - Why is Gradient Clipping needed?

It helps stabilize training by capping excessive gradient values, preventing model divergence. - How does Gradient Clipping work?

It limits gradient values exceeding a set threshold, ensuring the training process remains stable. - Where is Gradient Clipping used?

It’s commonly used in deep learning, especially in RNNs and reinforcement learning. - What problems does Gradient Clipping solve?

It addresses the issue of exploding gradients, which can disrupt neural network training. - Is Gradient Clipping only for deep networks?

While more common in deep networks, it can also be used in simpler models facing gradient explosion. - How is Gradient Clipping implemented?

It’s usually added as a parameter in training frameworks like TensorFlow or PyTorch. - Does Gradient Clipping affect model accuracy?

It primarily stabilizes training but can indirectly improve accuracy by preventing erratic updates. - What is an exploding gradient?

When gradients become excessively large, leading to unstable model weights and training failure. - Can Gradient Clipping be combined with other techniques?

Yes, it’s often used alongside other optimization strategies for enhanced stability.

Gradient Clipping Related Words

- Categories/Topics:

- Deep Learning

- Gradient Descent

- Neural Networks

Did you know?

Gradient Clipping was pivotal in advancing NLP models like LSTMs, which handle long text sequences. Without it, many of these models would fail due to exploding gradients, making tasks like language translation unreliable.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment