Cross-Entropy Loss

Quick Navigation:

- Cross-Entropy Loss Definition

- Cross-Entropy Loss Explained Easy

- Cross-Entropy Loss Origin

- Cross-Entropy Loss Etymology

- Cross-Entropy Loss Usage Trends

- Cross-Entropy Loss Usage

- Cross-Entropy Loss Examples in Context

- Cross-Entropy Loss FAQ

- Cross-Entropy Loss Related Words

Cross-Entropy Loss Definition

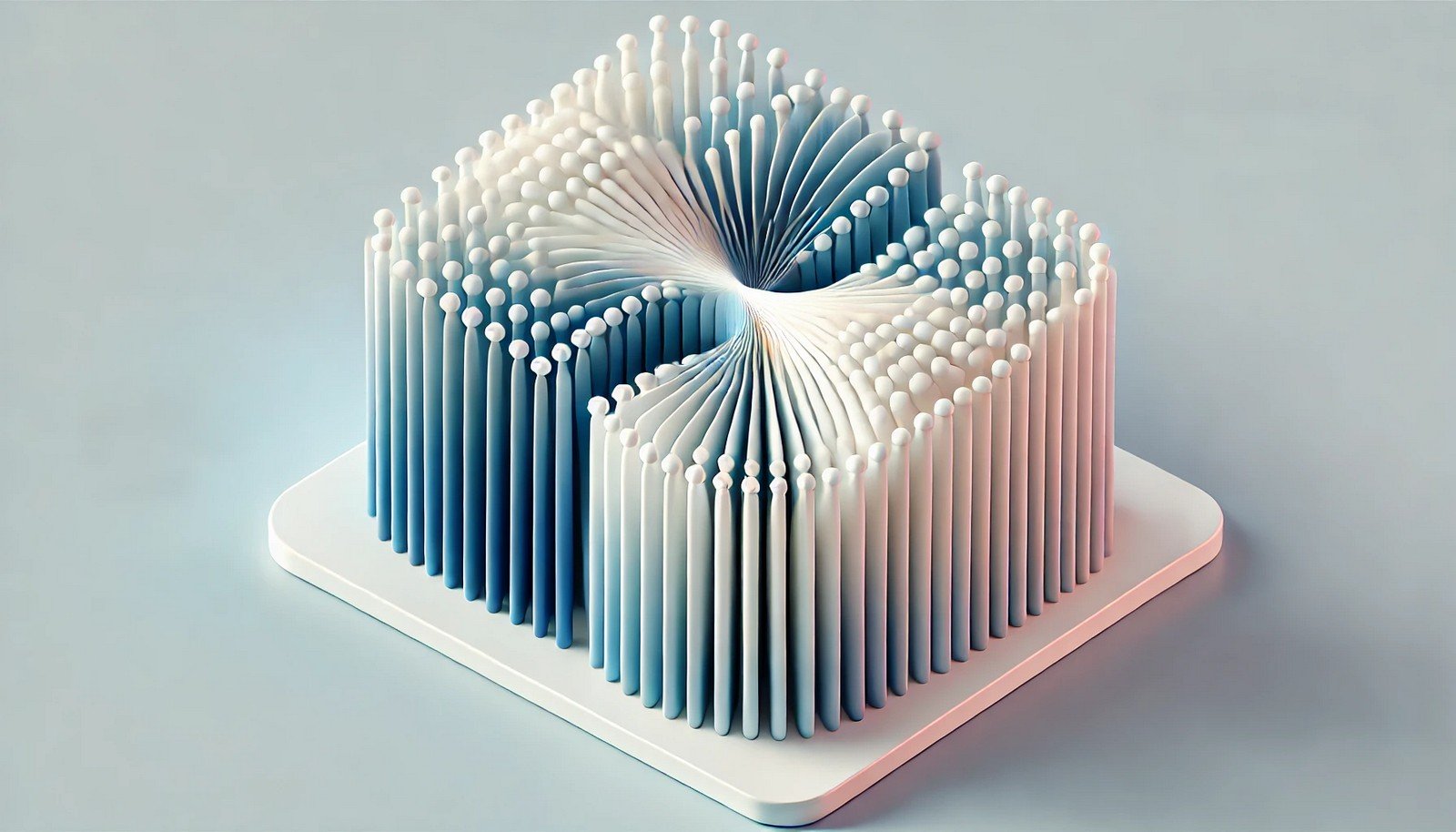

Cross-Entropy Loss is a key performance metric in machine learning, specifically in classification problems, that quantifies the error between predicted probabilities and the actual class labels. Mathematically, it computes the difference in probability distributions between the predicted and true classes, penalizing inaccurate predictions more heavily. This loss function is crucial in guiding neural networks and deep learning models to improve their accuracy, making it one of the most widely used loss metrics in supervised learning.

Cross-Entropy Loss Explained Easy

Imagine playing a guessing game where you’re supposed to guess the chances of drawing a specific colored marble from a bag. If you guess one color with high confidence but it turns out to be another, you lose points. Cross-Entropy Loss is like that scoring system – it checks how confident you are in your guess and penalizes you for being far from the correct answer.

Cross-Entropy Loss Origin

Cross-Entropy Loss has roots in information theory, where it was first used to measure the "distance" between two probability distributions. Claude Shannon’s information theory laid the groundwork, and it has since been adapted for machine learning.

Cross-Entropy Loss Etymology

Derived from “entropy” in information theory, it measures the “spread” or uncertainty in predictions.

Cross-Entropy Loss Usage Trends

With the rise of neural networks and deep learning, Cross-Entropy Loss has become a standard for evaluating classification models in areas like image recognition, speech analysis, and natural language processing. As models become more complex, the reliance on this loss metric for accuracy optimization continues to grow.

Cross-Entropy Loss Usage

- Formal/Technical Tagging:

- Machine Learning

- Deep Learning

- Loss Functions - Typical Collocations:

- "minimizing cross-entropy loss"

- "cross-entropy for classification"

- "binary cross-entropy"

- "multi-class cross-entropy"

Cross-Entropy Loss Examples in Context

- Cross-Entropy Loss helps guide a neural network in distinguishing between different animal species in an image dataset.

- In sentiment analysis, Cross-Entropy Loss penalizes the model when it misclassifies positive or negative sentiments.

- Speech recognition systems use Cross-Entropy Loss to improve their transcription accuracy by comparing predicted words with the actual spoken words.

Cross-Entropy Loss FAQ

- What is Cross-Entropy Loss?

Cross-Entropy Loss is a machine learning metric that measures the error between predicted and actual class distributions. - Why is Cross-Entropy Loss important in machine learning?

It guides models to improve prediction accuracy by penalizing wrong predictions. - How does Cross-Entropy Loss work in classification?

It compares the predicted probabilities of each class to the actual class, calculating a score that penalizes incorrect classifications. - What’s the difference between binary and multi-class Cross-Entropy Loss?

Binary Cross-Entropy is for two-class problems, while multi-class is for cases with more than two classes. - Is Cross-Entropy Loss used in deep learning?

Yes, it’s essential for training neural networks in classification tasks. - How does Cross-Entropy Loss compare with MSE (Mean Squared Error)?

Cross-Entropy Loss is better suited for classification, while MSE is common in regression. - Does Cross-Entropy Loss work with unbalanced datasets?

Yes, but it may require adjustment or weighting to handle class imbalance effectively. - Can Cross-Entropy Loss handle probability distributions?

Yes, it’s designed to measure differences between probability distributions. - Why do neural networks often use Cross-Entropy Loss?

It directly penalizes misclassifications, helping the network improve its accuracy on complex tasks. - What optimizations use Cross-Entropy Loss?

Techniques like backpropagation and gradient descent often minimize Cross-Entropy Loss to optimize model performance.

Cross-Entropy Loss Related Words

- Categories/Topics:

- Neural Networks

- Information Theory

- Predictive Modeling

Did you know?

Cross-Entropy Loss played a pivotal role in the development of natural language processing models like GPT, where it helps evaluate word prediction accuracy, leading to more fluent and contextually accurate responses in text generation tasks.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment