Spectral Normalization

Quick Navigation:

- Spectral Normalization Definition

- Spectral Normalization Explained Easy

- Spectral Normalization Origin

- Spectral Normalization Etymology

- Spectral Normalization Usage Trends

- Spectral Normalization Usage

- Spectral Normalization Examples in Context

- Spectral Normalization FAQ

- Spectral Normalization Related Words

Spectral Normalization Definition

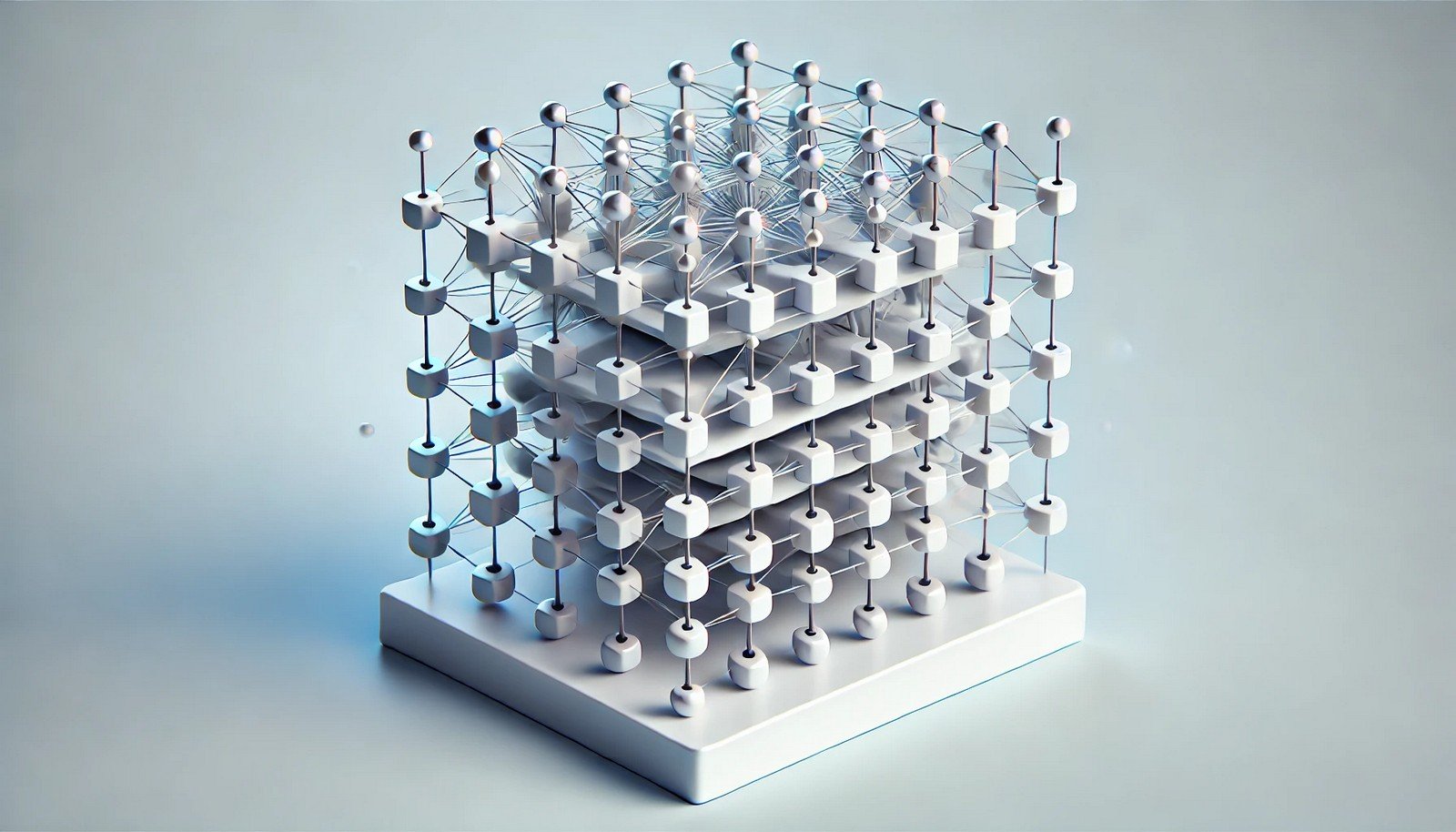

Spectral normalization is a regularization technique used in neural networks to control the spectral norm of weight matrices. It stabilizes training by limiting the largest singular value of these matrices, which reduces the risk of exploding gradients. By constraining the spectral norm, models become more robust and capable of generalizing better, particularly in adversarial settings and generative tasks. This technique is critical for architectures like GANs (Generative Adversarial Networks), where it helps in maintaining convergence and improving model performance.

Spectral Normalization Explained Easy

Imagine you’re learning how to stack blocks. If you stack them too high, they might fall over. Spectral normalization is like making sure each block isn’t too big so the stack stays stable. In computers, this helps a machine learn without making too many mistakes by keeping things steady!

Spectral Normalization Origin

Spectral normalization was introduced as a technique to improve the stability of neural networks, particularly for generative adversarial networks (GANs). It emerged from efforts to manage training instability in models and to improve generalization across varied input distributions.

Spectral Normalization Etymology

The term “spectral normalization” relates to the mathematical concept of the “spectral norm,” which refers to the largest singular value in matrix transformations.

Spectral Normalization Usage Trends

Spectral normalization has become increasingly popular in deep learning research, especially with the rise of GANs and other generative models. It is now widely used to control model behavior in adversarial settings and to support reliable model convergence, with adoption across academia and industry.

Spectral Normalization Usage

- Formal/Technical Tagging:

- Machine Learning

- Deep Learning

- Neural Networks

- Generative Models - Typical Collocations:

- "apply spectral normalization"

- "stabilize neural networks"

- "control spectral norm"

- "enhance GAN training"

Spectral Normalization Examples in Context

- In GAN training, spectral normalization is used to prevent weight matrices from becoming too large, helping to control training stability.

- Spectral normalization can help a neural network model handle noisy data more effectively by regularizing the impact of extreme inputs.

- Researchers often apply spectral normalization to reduce overfitting, enabling better performance on unseen data.

Spectral Normalization FAQ

- What is spectral normalization?

Spectral normalization is a regularization technique that constrains the largest singular value of weight matrices in neural networks. - Why is spectral normalization important?

It enhances stability during training, helping prevent issues like exploding gradients, especially in GANs. - How does spectral normalization work?

It works by dividing the weight matrix by its spectral norm, which is its largest singular value, effectively constraining its overall magnitude. - Where is spectral normalization commonly used?

Primarily in generative adversarial networks (GANs) and other architectures that benefit from controlled training dynamics. - Does spectral normalization affect model performance?

Yes, it often improves generalization and stability, leading to better performance on complex data tasks. - Is spectral normalization specific to GANs?

No, it can be applied in any neural network where stable and controlled training is desirable, though it is especially useful in GANs. - What happens without spectral normalization?

Models may experience instability, leading to poor convergence or overfitting. - Can spectral normalization prevent overfitting?

It helps reduce overfitting by limiting the effect of weight magnitudes, allowing the model to generalize better. - How does spectral normalization compare to other regularization methods?

Unlike dropout or L2 regularization, spectral normalization specifically targets the largest singular value of weight matrices. - Does spectral normalization slow down training?

It may slightly increase computation time but often leads to faster convergence and more reliable results.

Spectral Normalization Related Words

- Categories/Topics:

- Neural Networks

- Machine Learning

- Deep Learning

- GANs

- Model Regularization

Did you know?

Spectral normalization was a key innovation for stabilizing GANs, particularly for high-resolution image synthesis tasks. This simple yet powerful adjustment enabled GANs to achieve more realistic and stable outputs, advancing image generation technology.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment