Feedforward Neural Networks (FNN)

Quick Navigation:

- Feedforward Neural Networks Definition

- Feedforward Neural Networks Explained Easy

- Feedforward Neural Networks Origin

- Feedforward Neural Networks Etymology

- Feedforward Neural Networks Usage Trends

- Feedforward Neural Networks Usage

- Feedforward Neural Networks Examples in Context

- Feedforward Neural Networks FAQ

- Feedforward Neural Networks Related Words

Feedforward Neural Networks Definition

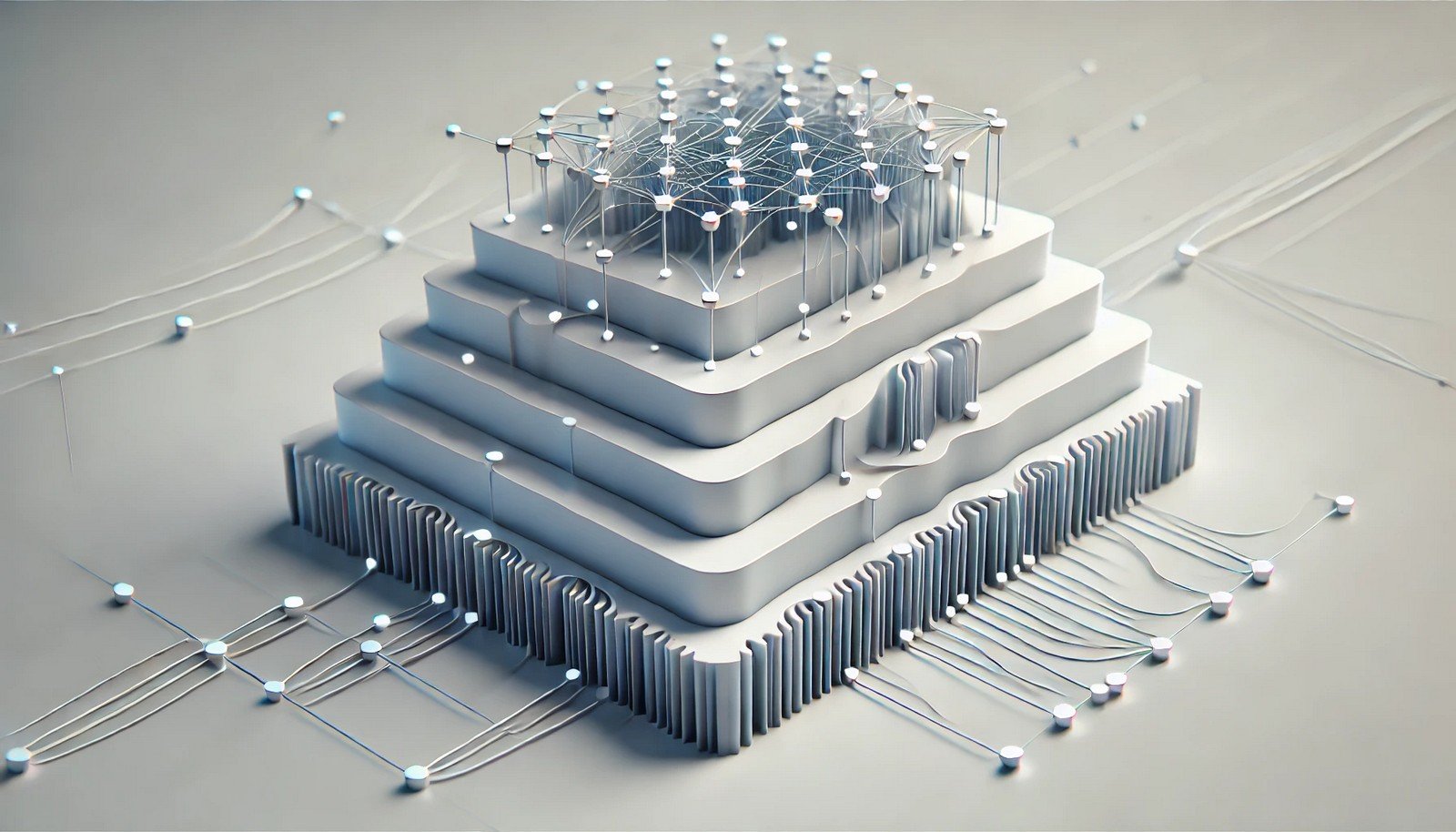

Feedforward Neural Networks are a fundamental type of artificial neural network where information moves in one direction—from input nodes, through hidden layers, to output nodes—without any loops or cycles. These networks are often employed in tasks requiring pattern recognition and regression analysis, such as image and speech recognition. The goal is to approximate functions that map inputs to desired outputs through a series of weighted connections. Activation functions, like ReLU and sigmoid, play a crucial role in transforming input signals within each layer, enabling complex, nonlinear mappings.

Feedforward Neural Networks Explained Easy

Imagine you have a series of rooms connected by doors, each door leading you closer to the end room. When you go through each door, you learn a bit more about the building. In Feedforward Neural Networks, information flows in one direction, like going room-to-room without turning back. Each "room" adds new clues that help the computer recognize patterns and make decisions at the end.

Feedforward Neural Networks Origin

The development of Feedforward Neural Networks can be traced back to the mid-20th century when researchers were exploring the computational modeling of human brain processes. With the invention of the perceptron model in the 1950s, foundational work laid the groundwork for what would become modern neural networks, evolving through significant contributions in the 1980s with the introduction of backpropagation to improve training accuracy.

Feedforward Neural Networks Etymology

The term "feedforward" describes the one-way flow of information through the network, contrasting with architectures that allow feedback loops.

Feedforward Neural Networks Usage Trends

Feedforward Neural Networks have been consistently popular, especially in applications where quick predictions are critical, such as image classification and basic predictive models. With advancements in computational power, their efficiency and simplicity make them a favored choice for preliminary or baseline models in machine learning pipelines.

Feedforward Neural Networks Usage

- Formal/Technical Tagging:

- Artificial Intelligence

- Deep Learning

- Supervised Learning - Typical Collocations:

- "feedforward network model"

- "activation function in feedforward network"

- "training a feedforward neural network"

- "layer structure of feedforward network"

Feedforward Neural Networks Examples in Context

- Feedforward Neural Networks are widely used in handwritten digit recognition systems, helping to classify numbers in postal services.

- In speech recognition, Feedforward Neural Networks process spoken words into text by analyzing sound patterns.

- For basic image processing tasks, Feedforward Neural Networks help detect simple objects and patterns.

Feedforward Neural Networks FAQ

- What is a Feedforward Neural Network?

A network type where data flows in a single direction through layers, making it ideal for pattern recognition tasks. - How does a Feedforward Neural Network work?

Information passes through input, hidden, and output layers without looping back, with each layer transforming data to achieve an accurate output. - What is the main advantage of Feedforward Neural Networks?

They are simple and efficient, often requiring less computation than more complex architectures. - Where are Feedforward Neural Networks used?

Common applications include image recognition, speech processing, and basic prediction models. - What is the role of activation functions in these networks?

Activation functions introduce non-linearity, helping networks handle complex patterns. - Can Feedforward Neural Networks learn sequences?

Not well; they are generally better suited for static data rather than sequential or time-series data. - What’s the difference between feedforward and feedback networks?

Feedforward networks allow only one-way data flow, while feedback networks, like RNNs, allow loops. - Are Feedforward Neural Networks considered deep learning?

Yes, if they have multiple hidden layers. - What is backpropagation in Feedforward Neural Networks?

It’s a training method to adjust weights by minimizing errors in predictions. - Do Feedforward Neural Networks have any limitations?

They struggle with sequential data and require large labeled datasets for effective training.

Feedforward Neural Networks Related Words

- Categories/Topics:

- Machine Learning

- Artificial Intelligence

- Neural Networks

Did you know?

Feedforward Neural Networks were some of the earliest models used in AI research. Despite being overshadowed by more complex architectures in modern deep learning, they still serve as the foundation for understanding neural networks, and their simplicity makes them an essential learning model for beginners in machine learning.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment