Stochastic Gradient

Quick Navigation:

- Stochastic Gradient Definition

- Stochastic Gradient Explained Easy

- Stochastic Gradient Origin

- Stochastic Gradient Etymology

- Stochastic Gradient Usage Trends

- Stochastic Gradient Usage

- Stochastic Gradient Examples in Context

- Stochastic Gradient FAQ

- Stochastic Gradient Related Words

Stochastic Gradient Definition

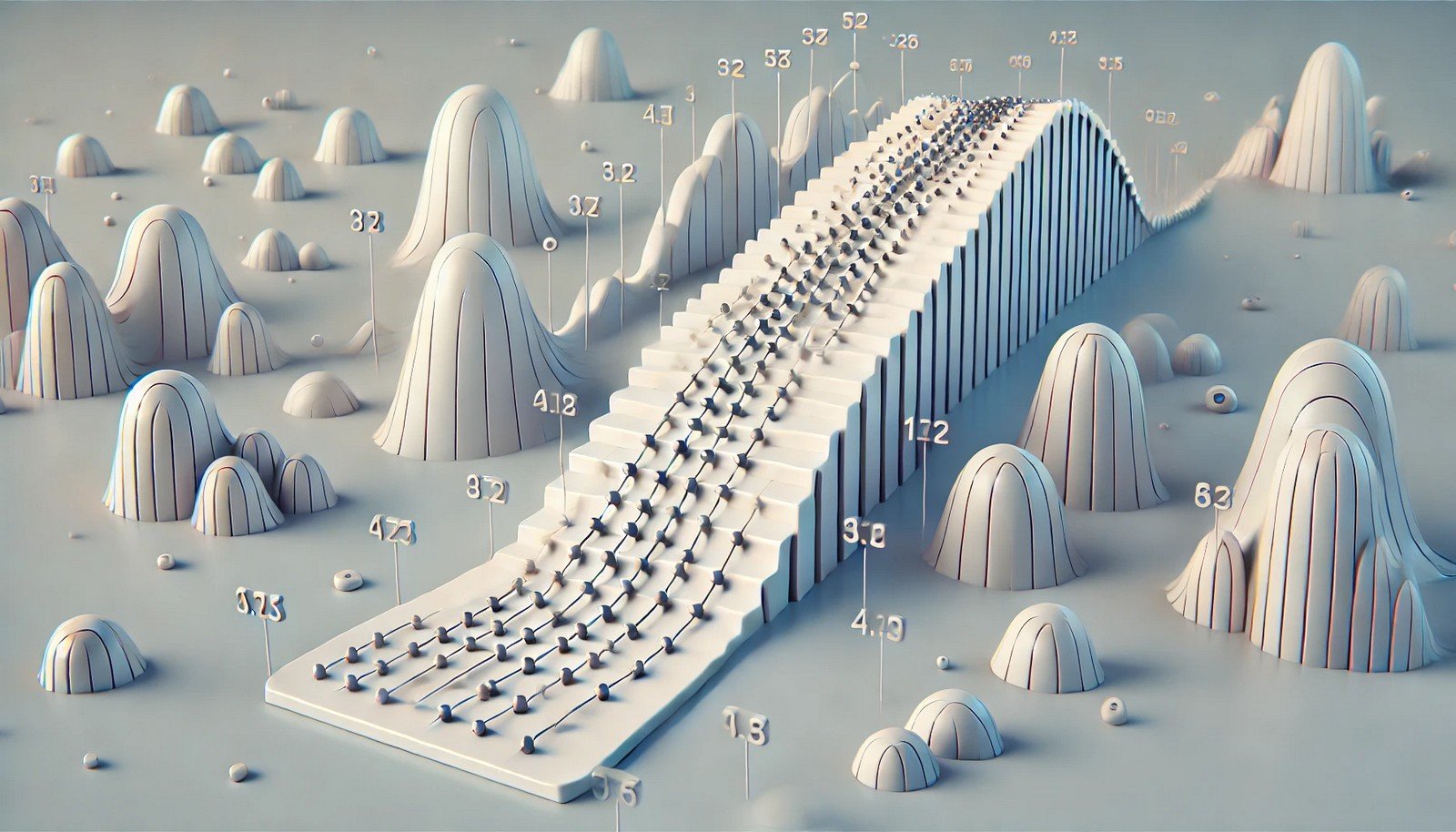

Stochastic Gradient is an optimization method commonly applied in machine learning for adjusting model parameters iteratively to minimize errors in predictions. By utilizing small, random subsets of data (batches), it speeds up the learning process compared to traditional methods like batch gradient descent. This randomness allows for faster convergence, though it may lead to less stable updates, which is often beneficial for avoiding local minima. Stochastic Gradient is essential for training large models efficiently and is particularly popular in neural network training.

Stochastic Gradient Explained Easy

Think of it like playing a guessing game with a big group. Instead of getting hints from everyone, you pick a few people at random and use their hints to make your next guess. Each guess gets you closer to the right answer, even if it’s a bit jumpy. Stochastic Gradient does something similar; it uses random data points to keep learning without waiting for all the information.

Stochastic Gradient Origin

The technique of Stochastic Gradient Descent emerged from optimization studies in mathematics and computer science. It became widely recognized in machine learning during the rise of neural networks in the 1990s and early 2000s, where its efficiency in handling large datasets was crucial for model training.

Stochastic Gradient Etymology

The word "stochastic" comes from a Greek word meaning "guess" or "aim." In mathematics and probability, it refers to processes that involve randomness or uncertainty, which fits well with the nature of the Stochastic Gradient's random sampling method.

Stochastic Gradient Usage Trends

Stochastic Gradient has seen increased usage with the growth of deep learning and artificial intelligence, where model complexity and data volume make full-batch processing impractical. This method is now central to many machine learning frameworks, especially in areas like natural language processing and computer vision, where quick iterations are necessary to refine models effectively.

Stochastic Gradient Usage

- Formal/Technical Tagging:

- Optimization

- Machine Learning

- Gradient Descent

- Neural Networks - Typical Collocations:

- "stochastic gradient update"

- "batch size in stochastic gradient"

- "stochastic gradient method"

- "SGD algorithm"

Stochastic Gradient Examples in Context

- A neural network model for image classification uses Stochastic Gradient to quickly adjust parameters as it processes new image data.

- During training, Stochastic Gradient helps in updating weights without having to process the entire dataset at once, saving computation time.

- Recurrent neural networks in natural language processing rely on Stochastic Gradient to fine-tune predictions across diverse language inputs.

Stochastic Gradient FAQ

- What is Stochastic Gradient?

Stochastic Gradient is an optimization technique for minimizing errors by updating model parameters in smaller, random data samples. - How does it differ from regular Gradient Descent?

Unlike traditional Gradient Descent, which processes the full dataset each iteration, Stochastic Gradient uses random batches, speeding up learning. - Why is it popular in machine learning?

Its efficiency in handling large datasets makes it ideal for deep learning, especially in neural networks. - What is a batch size in Stochastic Gradient?

Batch size refers to the subset of data points used in each update step, balancing learning stability and speed. - How does it help in training neural networks?

It enables quicker parameter adjustments, which is crucial for training large models on extensive datasets. - Can it cause unstable model updates?

Yes, due to its randomness, Stochastic Gradient can have fluctuations in updates, though this often helps avoid local minima. - Is Stochastic Gradient only for deep learning?

No, it’s used in various optimization tasks beyond deep learning, though it’s particularly valued there. - What is "learning rate" in this context?

The learning rate is a parameter controlling how much to adjust model parameters at each step. - Does Stochastic Gradient Descent always converge to a solution?

While effective, convergence depends on factors like learning rate and data quality. - What are alternatives to Stochastic Gradient?

Alternatives include Batch Gradient Descent and Mini-Batch Gradient Descent, which vary in dataset size per update.

Stochastic Gradient Related Words

- Categories/Topics:

- Machine Learning

- Optimization

- Neural Networks

Did you know?

Stochastic Gradient was initially explored in the 1950s for statistical estimation, but it wasn't until the development of neural networks that its potential for large-scale data optimization was fully realized. Today, it's one of the foundational techniques for AI model training.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment