Cache Hierarchy

Quick Navigation:

- Cache Hierarchy Definition

- Cache Hierarchy Explained Easy

- Cache Hierarchy Origin

- Cache Hierarchy Etymology

- Cache Hierarchy Usage Trends

- Cache Hierarchy Usage

- Cache Hierarchy Examples in Context

- Cache Hierarchy FAQ

- Cache Hierarchy Related Words

Cache Hierarchy Definition

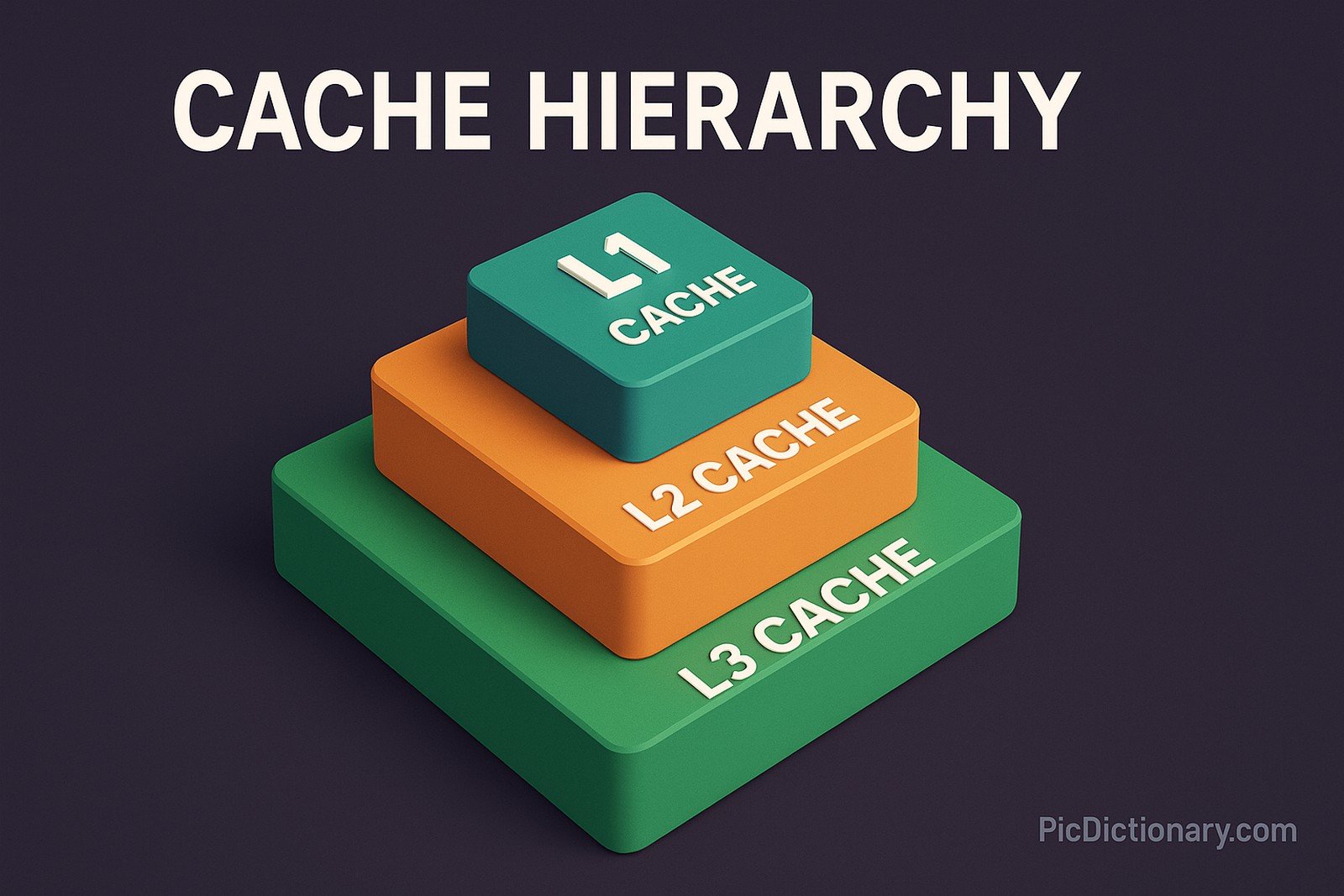

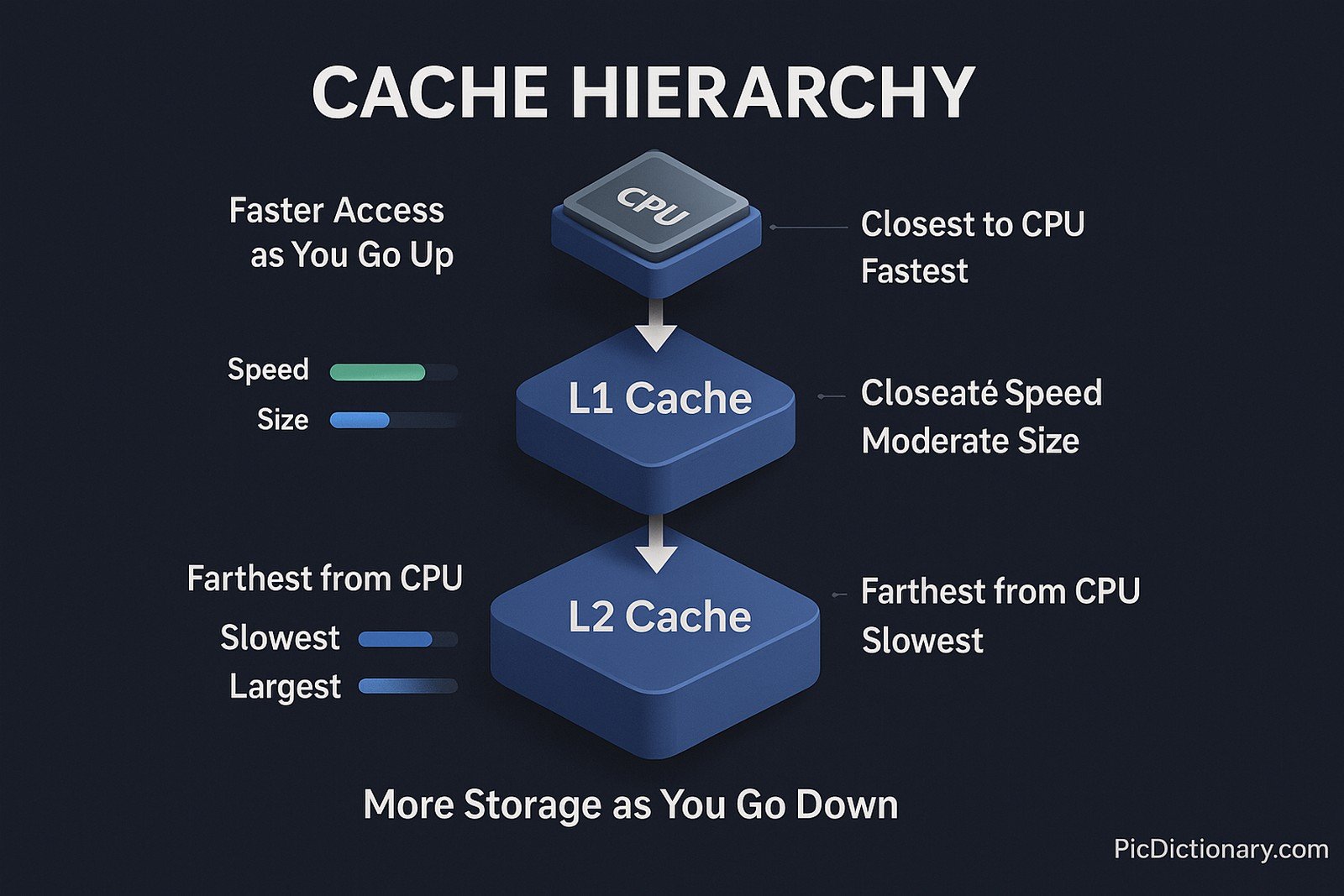

Cache hierarchy is a multi-level storage architecture designed to optimize data access speed by placing frequently used data closer to the processor. It typically consists of multiple cache levels—L1 (Level 1), L2 (Level 2), and L3 (Level 3)—each varying in size, speed, and proximity to the CPU. L1 is the smallest and fastest, residing closest to the CPU cores, while L3 is larger but slower. This hierarchical approach minimizes memory latency and improves overall system performance.

Cache Hierarchy Explained Easy

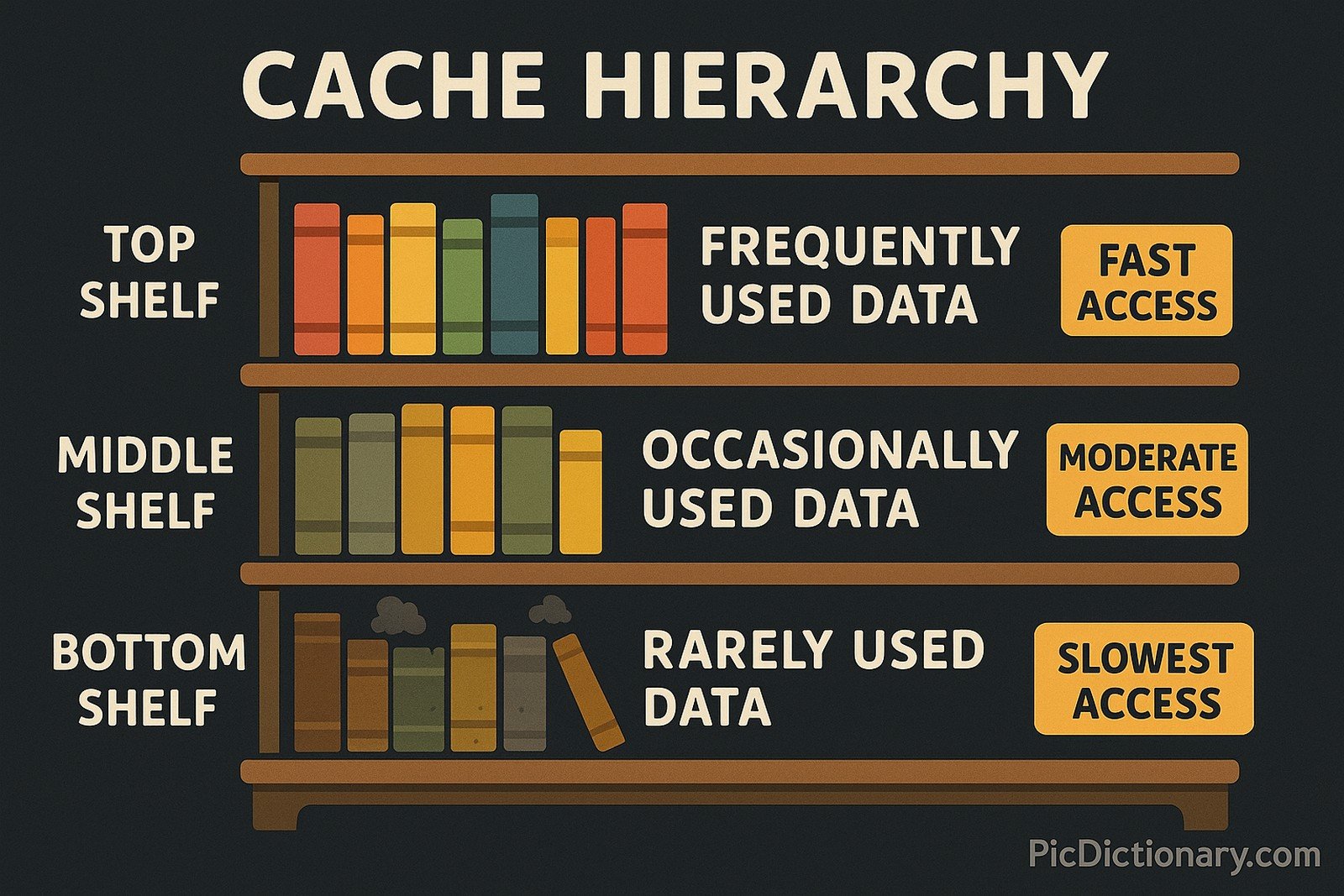

Imagine you have a bookshelf with different shelves. The top shelf holds the books you use most often (fast access), the middle shelf has books you sometimes use (slower access), and the bottom shelf stores books you rarely need (slowest access). Cache hierarchy works the same way for computers, storing the most needed data in the fastest memory, so your computer doesn't waste time searching for it in slower storage.

Cache Hierarchy Origin

The concept of cache memory hierarchy emerged in the early days of computer architecture to bridge the speed gap between the processor and main memory (RAM). As processors became faster, the need for intermediate storage layers grew, leading to modern multi-tiered cache designs used in today’s CPUs.

Cache Hierarchy Etymology

The term “cache” originates from the French word "cacher," meaning "to hide," referring to the hidden storage of frequently accessed data. "Hierarchy" refers to the structured levels of cache memory, each playing a role in optimizing data retrieval speed.

Cache Hierarchy Usage Trends

With the rise of high-performance computing, artificial intelligence, and gaming, optimizing cache hierarchy has become critical. Modern processors integrate larger and more sophisticated cache architectures, with some high-end CPUs featuring up to 128MB of L3 cache. Additionally, new cache technologies, such as Intel’s Adaptive Caching and AMD’s 3D V-Cache, aim to further enhance performance.

Cache Hierarchy Usage

- Formal/Technical Tagging:

- Computer Architecture

- Processor Design

- Memory Management - Typical Collocations:

- "multi-level cache hierarchy"

- "L1, L2, and L3 cache"

- "CPU cache optimization"

- "cache coherence mechanism"

Cache Hierarchy Examples in Context

- A modern gaming processor with a large L3 cache can load textures faster, reducing lag in high-end graphics applications.

- Mobile processors optimize cache hierarchy to improve battery life by reducing frequent access to slower main memory.

- High-performance computing systems use multi-tier cache hierarchies to speed up scientific simulations.

Cache Hierarchy FAQ

- What is cache hierarchy?

Cache hierarchy refers to the layered structure of cache memory (L1, L2, L3) designed to enhance data retrieval speed. - Why do CPUs use multiple levels of cache?

Multiple levels help balance speed and storage size, ensuring frequently used data is accessed quickly while less-used data remains available. - How does cache hierarchy improve performance?

It reduces latency by keeping critical data closer to the CPU, minimizing access times to slower RAM. - What is the difference between L1, L2, and L3 cache?

L1 is the smallest and fastest, L2 is larger but slightly slower, and L3 is the largest but slowest among them. - Does increasing cache size always improve performance?

Not necessarily; beyond a certain size, cache management overhead may reduce efficiency. - What is cache coherence in a multi-core system?

It ensures that all CPU cores see a consistent view of data stored in the cache. - Can cache hierarchy affect gaming performance?

Yes, faster caches can improve frame rates and reduce stuttering by quickly fetching required game assets. - How does cache hierarchy relate to RAM?

Cache acts as a high-speed intermediary between RAM and the CPU, reducing the need to fetch data from slower main memory. - What is the impact of cache hierarchy on power consumption?

A well-optimized cache hierarchy can reduce power usage by minimizing frequent access to high-power memory modules. - Are cache hierarchies the same across all processors?

No, different processors (Intel, AMD, ARM) have unique cache architectures tailored for specific use cases.

Cache Hierarchy Related Words

- Categories/Topics:

- CPU Architecture

- Memory Hierarchy

- Data Caching

Did you know?

The first modern microprocessor with an integrated cache was the Intel 80486, introduced in 1989. It featured an 8KB L1 cache, a groundbreaking innovation at the time that significantly boosted computational performance. Today, modern CPUs feature caches exceeding tens of megabytes in size, demonstrating the evolution of cache hierarchy in computing.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment