Asynchronous Advantage Actor-Critic (A3C)

Quick Navigation:

- Asynchronous Advantage Actor-Critic Definition

- Asynchronous Advantage Actor-Critic Explained Easy

- Asynchronous Advantage Actor-Critic Origin

- Asynchronous Advantage Actor-Critic Etymology

- Asynchronous Advantage Actor-Critic Usage Trends

- Asynchronous Advantage Actor-Critic Usage

- Asynchronous Advantage Actor-Critic Examples in Context

- Asynchronous Advantage Actor-Critic FAQ

- Asynchronous Advantage Actor-Critic Related Words

Asynchronous Advantage Actor-Critic Definition

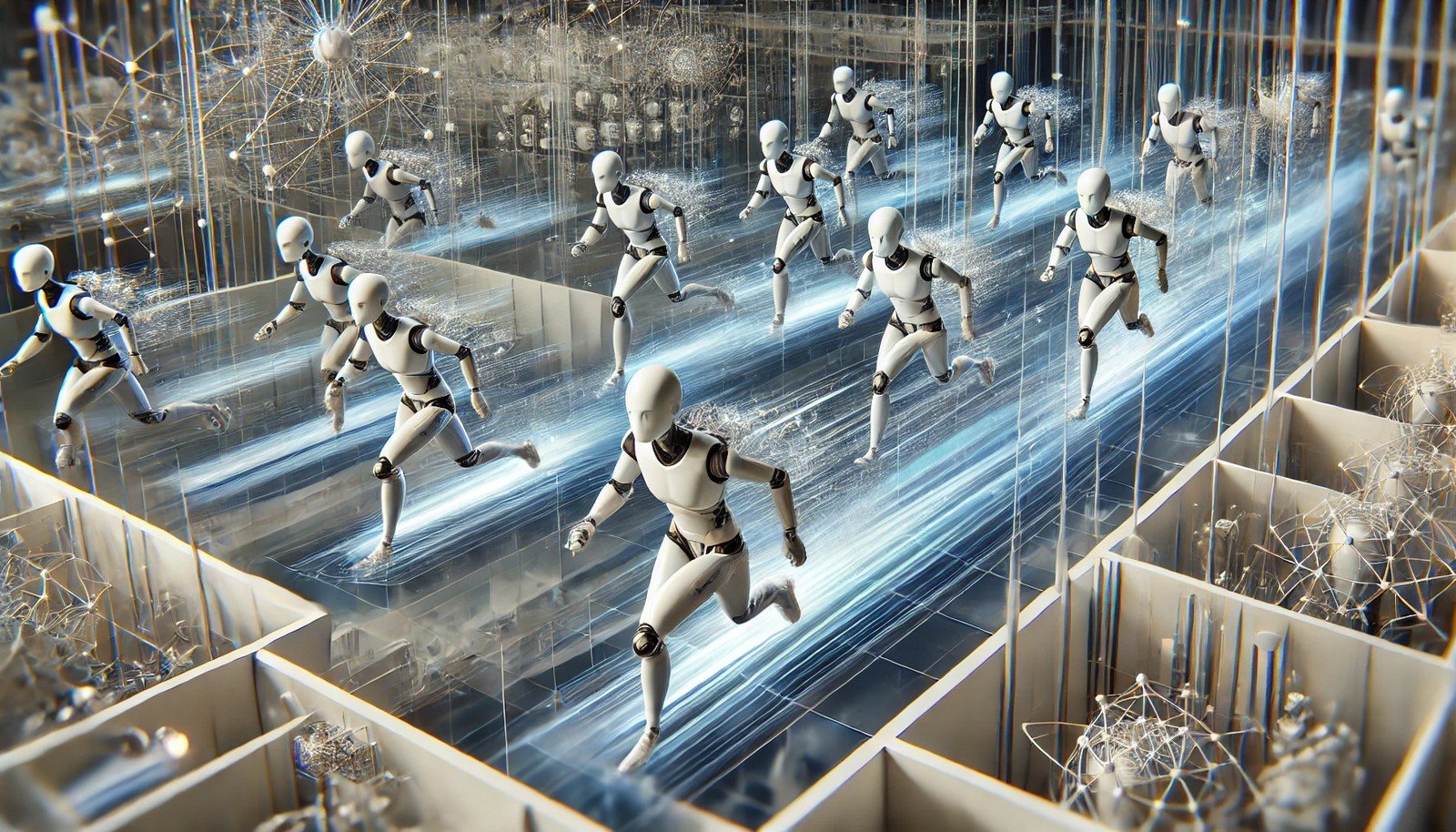

Asynchronous Advantage Actor-Critic, or A3C, is a reinforcement learning algorithm that enables the training of multiple agent instances simultaneously. These agents interact with different environments independently, which reduces correlation in data, stabilizing training. The "actor-critic" model involves two neural networks: the actor suggests actions, while the critic evaluates them, combining to create a more efficient and robust learning process.

Asynchronous Advantage Actor-Critic Explained Easy

Imagine you’re playing a video game with friends, but everyone plays different levels. Each person learns something new and shares it with the group. By learning this way, the group becomes better, faster. A3C works like this, but with many computer "friends" learning at once to speed up decision-making skills.

Asynchronous Advantage Actor-Critic Origin

The A3C algorithm was introduced by researchers at DeepMind in 2016, as a response to limitations in prior algorithms for reinforcement learning. The asynchronous structure was a novel way to stabilize training while allowing agents to learn continuously in parallel.

Asynchronous Advantage Actor-Critic Etymology

The term "asynchronous" refers to independent processing, "advantage" highlights the efficiency gained, and "actor-critic" describes the dual-network structure in reinforcement learning where one network suggests actions and the other evaluates them.

Asynchronous Advantage Actor-Critic Usage Trends

A3C has gained popularity, especially in areas requiring real-time decision-making, like robotics, gaming, and automated control systems. Its ability to utilize parallel training allows faster, more efficient models, making it a go-to in environments where rapid adaptation is essential.

Asynchronous Advantage Actor-Critic Usage

- Formal/Technical Tagging:

- Reinforcement Learning

- Parallel Processing

- Neural Networks - Typical Collocations:

- "A3C reinforcement learning"

- "asynchronous training"

- "actor-critic model"

- "policy optimization with A3C"

Asynchronous Advantage Actor-Critic Examples in Context

- The A3C algorithm enables robots to quickly learn movement patterns by training multiple models at once.

- In a video game AI, A3C allows agents to improve gameplay strategies by training on different scenarios asynchronously.

- Autonomous drones use A3C to learn how to navigate complex environments more efficiently through parallel training.

Asynchronous Advantage Actor-Critic FAQ

- What is Asynchronous Advantage Actor-Critic (A3C)?

A3C is a reinforcement learning algorithm that trains multiple agents asynchronously, enhancing learning speed and efficiency. - How does A3C differ from synchronous algorithms?

A3C uses asynchronous agents, reducing data correlation and stabilizing training, while synchronous methods train one model at a time. - What’s the main advantage of A3C?

Its parallel training of agents allows faster and more stable learning in complex environments. - How does A3C apply to robotics?

A3C is used in robotics for real-time decision-making and efficient learning of complex movement patterns. - Is A3C used in games?

Yes, it's widely used in game AI, enabling bots to learn strategies across various levels asynchronously. - What is the actor-critic model?

The actor-critic model involves two neural networks: one proposes actions (actor), and the other evaluates them (critic). - Who developed A3C?

A3C was introduced by researchers at DeepMind in 2016. - Can A3C be applied to autonomous vehicles?

Yes, A3C is used in autonomous vehicles for tasks requiring real-time decision-making, like navigation. - What are the challenges with A3C?

Challenges include computational demands and tuning for optimal performance in varied environments. - Why is "asynchronous" important in A3C?

Asynchronous processing allows independent learning, stabilizing training and improving efficiency.

Asynchronous Advantage Actor-Critic Related Words

- Categories/Topics:

- Reinforcement Learning

- Deep Learning

- Parallel Computing

Did you know?

A3C was a groundbreaking reinforcement learning method that pioneered parallel agent training. Its development marked a shift in AI, allowing machines to learn faster and more efficiently, which has been instrumental in fields like autonomous systems and advanced robotics.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment