Gradient Noise in AI and Machine Learning

Quick Navigation:

- Gradient Noise Definition

- Gradient Noise Explained Easy

- Gradient Noise Origin

- Gradient Noise Etymology

- Gradient Noise Usage Trends

- Gradient Noise Usage

- Gradient Noise Examples in Context

- Gradient Noise FAQ

- Gradient Noise Related Words

Gradient Noise Definition

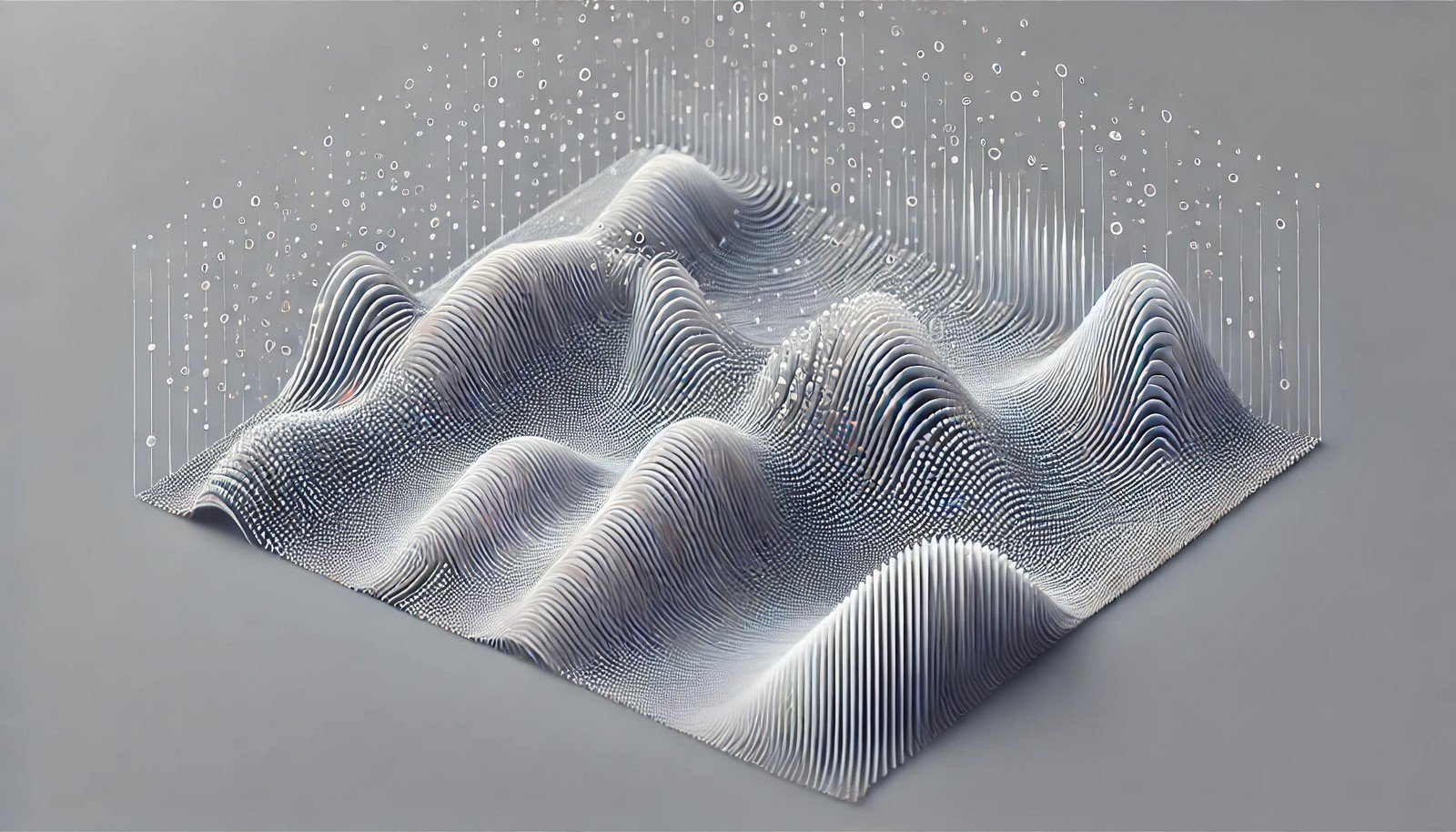

Gradient Noise is a technique in machine learning where controlled random values, known as noise, are added to gradients (the direction and rate of change in learning models). This process helps prevent models from becoming too dependent on specific patterns in training data, promoting generalization and helping the model adapt to a broader range of data. By introducing noise to gradients, models can avoid overfitting and become more robust, enhancing their ability to make accurate predictions with new data.

Gradient Noise Explained Easy

Imagine you’re studying for a test by practicing different types of math problems. If you only study one type of problem, you might get good at that specific type but struggle with new problems. Gradient Noise is like adding variety to your practice by mixing in different types of problems, helping you become better at math overall.

Gradient Noise Origin

The concept of Gradient Noise emerged as researchers sought ways to improve model robustness and adaptability, particularly in areas like neural networks. Initially explored in statistical methods, it gained traction in the AI community as computational capabilities expanded.

Gradient Noise Etymology

The term “Gradient Noise” combines "gradient," referring to the slope or rate of change in models, with "noise," indicating the added randomness to improve model performance.

Gradient Noise Usage Trends

With advancements in AI and deep learning, Gradient Noise has seen increased use across fields like natural language processing, computer vision, and robotics. Researchers use it to improve model reliability and ensure that AI systems perform well with real-world data that may vary from the training data.

Gradient Noise Usage

- Formal/Technical Tagging:

- Machine Learning

- Deep Learning

- Model Robustness

- Data Generalization - Typical Collocations:

- "add gradient noise"

- "gradient noise in neural networks"

- "improving model with gradient noise"

- "noise in gradients for generalization"

Gradient Noise Examples in Context

- In a machine learning model for image recognition, Gradient Noise is used to make the model more adaptable to new types of images it hasn’t encountered in training.

- In robotics, Gradient Noise helps ensure that robots can adapt to slightly varied environments by introducing randomness to their decision-making models.

- Gradient Noise is used in natural language processing models to prevent overfitting to specific language patterns seen in the training data.

Gradient Noise FAQ

- What is Gradient Noise?

Gradient Noise is a method of adding controlled randomness to the training process of AI models to improve their generalization and robustness. - Why is Gradient Noise important in machine learning?

It prevents overfitting, allowing models to perform better on new data outside the training set. - How does Gradient Noise help neural networks?

By adding noise, it allows neural networks to avoid over-reliance on training patterns and generalize better. - Is Gradient Noise used in all machine learning models?

No, it is particularly useful in complex models, such as neural networks, where overfitting is a concern. - What are some applications of Gradient Noise?

It’s used in image recognition, robotics, and natural language processing to enhance adaptability. - How does Gradient Noise affect model performance?

It can improve model accuracy on new data by preventing overfitting and enhancing generalization. - Can Gradient Noise make models too random?

If applied excessively, it might reduce model accuracy by adding too much randomness, but typically it’s carefully controlled. - Is Gradient Noise similar to data augmentation?

Both add variability, but Gradient Noise directly affects the learning process, while data augmentation alters the input data. - Is Gradient Noise useful in real-time applications?

Yes, it’s useful in any situation where models need to adapt to slightly different conditions from training data. - How is Gradient Noise different from random dropout?

Dropout randomly ignores parts of data, while Gradient Noise adds random variations to the gradient.

Gradient Noise Related Words

- Categories/Topics:

- Machine Learning

- Neural Networks

- Data Augmentation

- Model Generalization

Did you know?

Gradient Noise has played a role in making AI models more resilient to data shifts, improving their ability to handle new, unseen conditions. It’s a behind-the-scenes technique used to train models in fields as diverse as self-driving cars and speech recognition.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment