Gradient Descent

Quick Navigation:

- Gradient Descent Definition

- Gradient Descent Explained Easy

- Gradient Descent Origin

- Gradient Descent Etymology

- Gradient Descent Usage Trends

- Gradient Descent Usage

- Gradient Descent Examples in Context

- Gradient Descent FAQ

- Gradient Descent Related Words

Gradient Descent Definition

Gradient Descent is an optimization algorithm used primarily in machine learning and AI to minimize a function by iteratively moving towards the function's lowest point. This process helps optimize model parameters by calculating gradients (or slopes) of the cost function to guide adjustments, enabling improved accuracy in predictive tasks. Variants of Gradient Descent, such as Stochastic Gradient Descent and Mini-Batch Gradient Descent, offer tailored approaches for specific datasets and models.

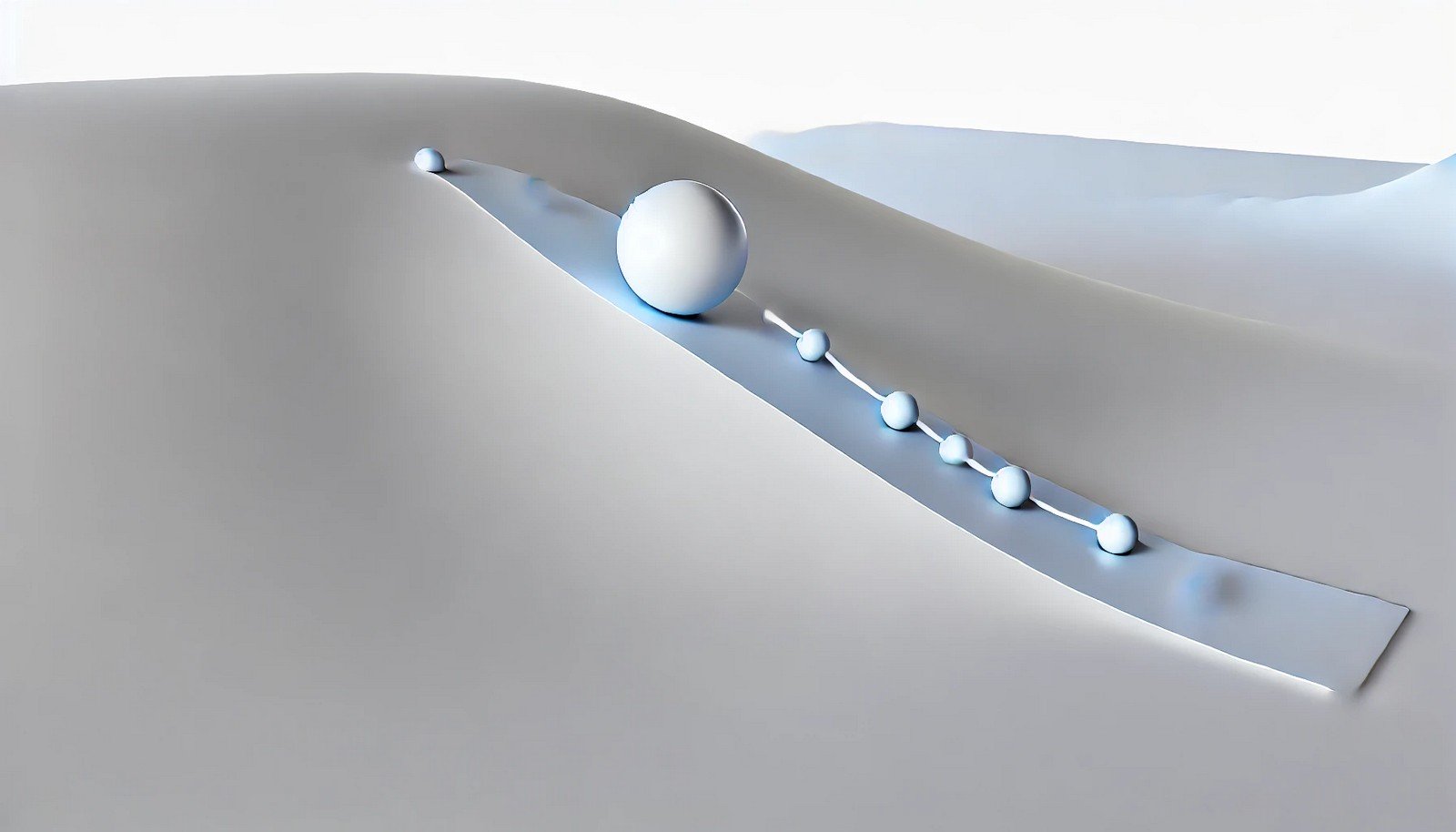

Gradient Descent Explained Easy

Imagine trying to find the bottom of a valley while blindfolded. You’d feel your way by taking small steps downhill. Gradient Descent works similarly; it takes steps based on the slope of a function to reach its lowest point, guiding a model to make better predictions.

Gradient Descent Origin

Gradient Descent has its roots in calculus and optimization theory, with significant development in machine learning and AI applications over the past few decades. The method’s adaptability and efficiency have made it integral in training complex models.

Gradient Descent Etymology

The term "Gradient Descent" derives from "gradient," referring to a slope or incline, and "descent," signifying movement downward, reflecting its function of minimizing values by following slopes.

Gradient Descent Usage Trends

Gradient Descent has become increasingly popular as data and computational resources have expanded, particularly in deep learning and AI model training. Its variants are commonly used in large-scale AI systems for tasks such as image recognition, natural language processing, and recommendation systems.

Gradient Descent Usage

- Formal/Technical Tagging:

- Machine Learning

- Deep Learning

- Optimization - Typical Collocations:

- "Gradient Descent algorithm"

- "learning rate in Gradient Descent"

- "convergence of Gradient Descent"

- "Stochastic Gradient Descent training"

Gradient Descent Examples in Context

- Machine learning models use Gradient Descent to adjust parameters, enabling better performance on tasks like image recognition.

- In deep learning, Gradient Descent minimizes errors across layers in neural networks, making it vital for accurate results.

- Gradient Descent is fundamental in NLP tasks, helping models learn patterns in text data for improved understanding.

Gradient Descent FAQ

- What is Gradient Descent?

Gradient Descent is an optimization technique that finds the minimum of a function by iteratively adjusting parameters based on slopes. - Why is Gradient Descent important in machine learning?

It’s essential for optimizing model parameters, improving accuracy, and speeding up learning. - What is the purpose of the learning rate in Gradient Descent?

The learning rate controls the step size during optimization, impacting convergence speed and accuracy. - How does Stochastic Gradient Descent differ from traditional Gradient Descent?

Stochastic Gradient Descent updates parameters for each data point, making it faster but noisier. - What is a cost function in Gradient Descent?

It’s a function that measures the error between predicted and actual values, guiding the direction of descent. - Can Gradient Descent be applied to non-machine learning fields?

Yes, it’s used in various optimization problems beyond machine learning, like finance and engineering. - What is overfitting in the context of Gradient Descent?

Overfitting occurs when the model fits training data too closely, reducing its generalization to new data. - What’s the difference between Batch and Mini-Batch Gradient Descent?

Batch Gradient Descent uses the entire dataset for updates, while Mini-Batch splits data into smaller groups for efficiency. - Why does Gradient Descent sometimes fail to find the minimum?

If it lands in a local minimum, it might not reach the global minimum, depending on the function's shape. - How does Gradient Descent apply in neural networks?

It optimizes weights across layers, enabling accurate predictions in deep learning models.

Gradient Descent Related Words

- Categories/Topics:

- Machine Learning

- Optimization

- Deep Learning

Did you know?

Gradient Descent is crucial for training AI systems in real-time applications like language translation and autonomous vehicles, enabling swift adjustments to maintain accuracy.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment