Layer Normalization

Quick Navigation:

- Layer Normalization Definition

- Layer Normalization Explained Easy

- Layer Normalization Origin

- Layer Normalization Etymology

- Layer Normalization Usage Trends

- Layer Normalization Usage

- Layer Normalization Examples in Context

- Layer Normalization FAQ

- Layer Normalization Related Words

Layer Normalization Definition

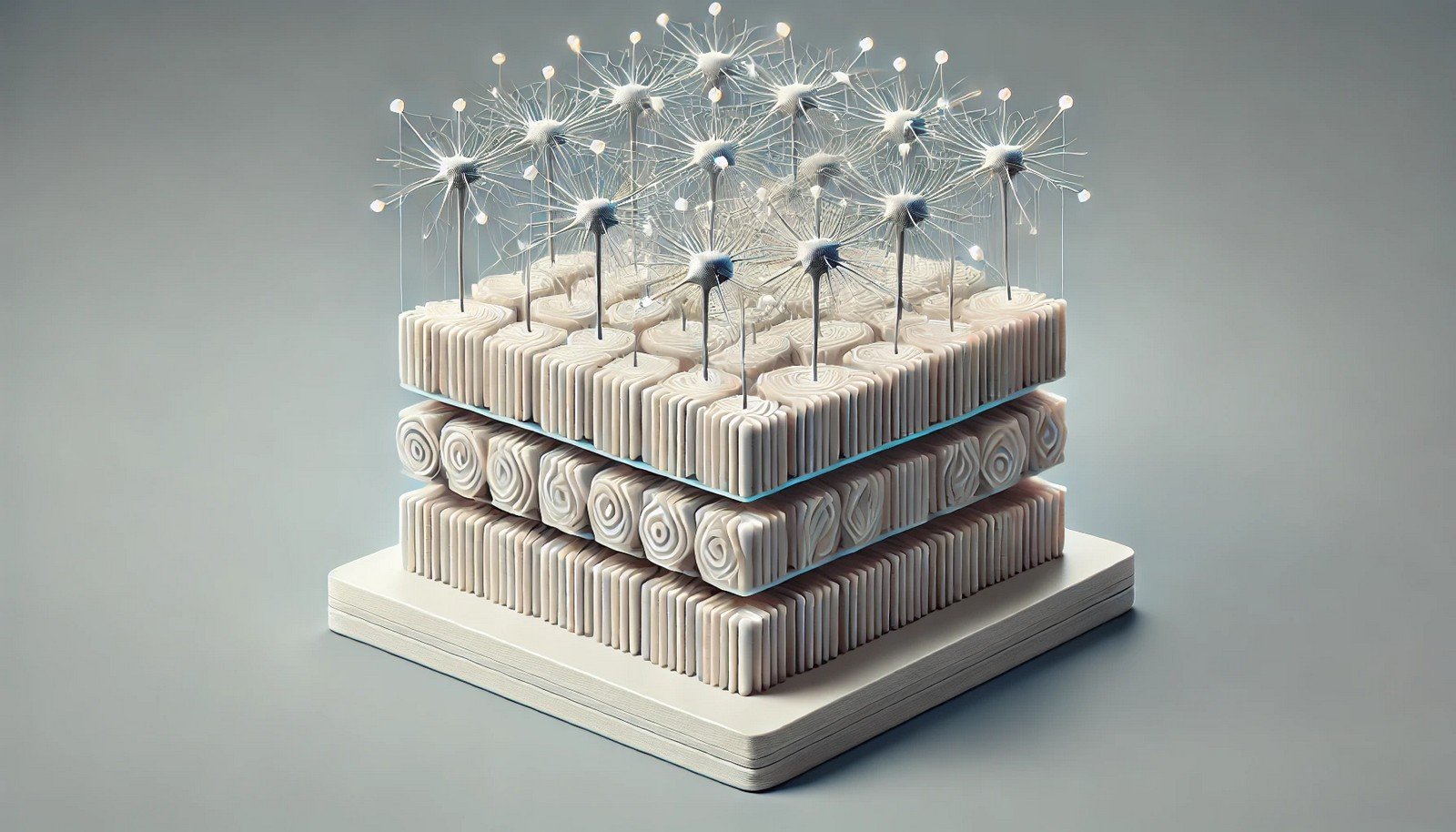

Layer Normalization is a method used in neural networks to standardize the inputs of each neuron in a layer independently. Unlike batch normalization, which standardizes across a batch of examples, layer normalization focuses on the neuron activations within a single example. This technique is especially useful in recurrent neural networks (RNNs) and other architectures sensitive to varying input distributions, improving stability and convergence in training.

Layer Normalization Explained Easy

Imagine you’re at a school where everyone’s scores are adjusted so each class has a similar average score for every student. Layer normalization does something like this for a computer model, balancing the "scores" or data it sees in each layer to help it learn more efficiently.

Layer Normalization Origin

Introduced by Ba, Kiros, and Hinton in 2016, layer normalization emerged as an alternative to batch normalization to address challenges in sequence models like RNNs, where input distributions vary across time steps.

Layer Normalization Etymology

The term "layer normalization" combines "layer," referring to each level of processing in a neural network, and "normalization," indicating the standardization of input data to ensure stability in learning.

Layer Normalization Usage Trends

As deep learning has expanded into diverse fields like natural language processing, layer normalization has gained popularity due to its effectiveness in RNNs and transformers. Its usage has grown alongside AI applications where input data varies significantly across examples and tasks.

Layer Normalization Usage

- Formal/Technical Tagging:

- Deep Learning

- Machine Learning

- Recurrent Neural Networks - Typical Collocations:

- "layer normalization technique"

- "layer-normalized neural network"

- "improving stability with layer normalization"

Layer Normalization Examples in Context

- Layer normalization helps improve training stability in transformers, enabling better language understanding models.

- In RNNs, layer normalization is used to control hidden state activations across time steps.

- Layer normalization is often used in NLP tasks to manage variability in input sequences.

Layer Normalization FAQ

- What is layer normalization?

Layer normalization standardizes neuron inputs within a layer to stabilize the network's learning process. - How is layer normalization different from batch normalization?

While batch normalization standardizes inputs across a batch, layer normalization does so across features within a layer for each example. - Why is layer normalization useful in RNNs?

It addresses the variance in inputs across time steps, making it ideal for recurrent models sensitive to sequential data. - Can layer normalization improve model convergence?

Yes, by stabilizing neuron outputs, it helps the model converge faster and more reliably. - Is layer normalization commonly used in transformers?

Yes, it's widely used in transformer architectures for natural language processing and other tasks. - How does layer normalization impact training stability?

It reduces fluctuations in neuron activations, enhancing the model's stability during training. - Is layer normalization effective for convolutional neural networks?

It’s less common but can be beneficial in specific scenarios where batch normalization isn’t effective. - Does layer normalization add computational overhead?

Yes, but the benefits in training stability often outweigh the minor increase in computation. - How does layer normalization relate to gradient flow?

By normalizing layer outputs, it prevents exploding and vanishing gradients, improving gradient flow. - Can layer normalization replace batch normalization?

It can be an alternative, especially in sequence models, though both have their unique advantages.

Layer Normalization Related Words

- Categories/Topics:

- Neural Networks

- Standardization Techniques

- Training Optimization

Did you know?

Layer normalization was designed to address the limitations of batch normalization in sequence-based neural networks. Its impact is especially significant in RNNs, where varying input distributions across time steps posed a challenge that layer normalization effectively addressed.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment