Stochastic Gradient Descent (SGD)

Quick Navigation:

- Stochastic Gradient Descent Definition

- Stochastic Gradient Descent Explained Easy

- Stochastic Gradient Descent Origin

- Stochastic Gradient Descent Etymology

- Stochastic Gradient Descent Usage Trends

- Stochastic Gradient Descent Usage

- Stochastic Gradient Descent Examples in Context

- Stochastic Gradient Descent FAQ

- Stochastic Gradient Descent Related Words

Stochastic Gradient Descent Definition

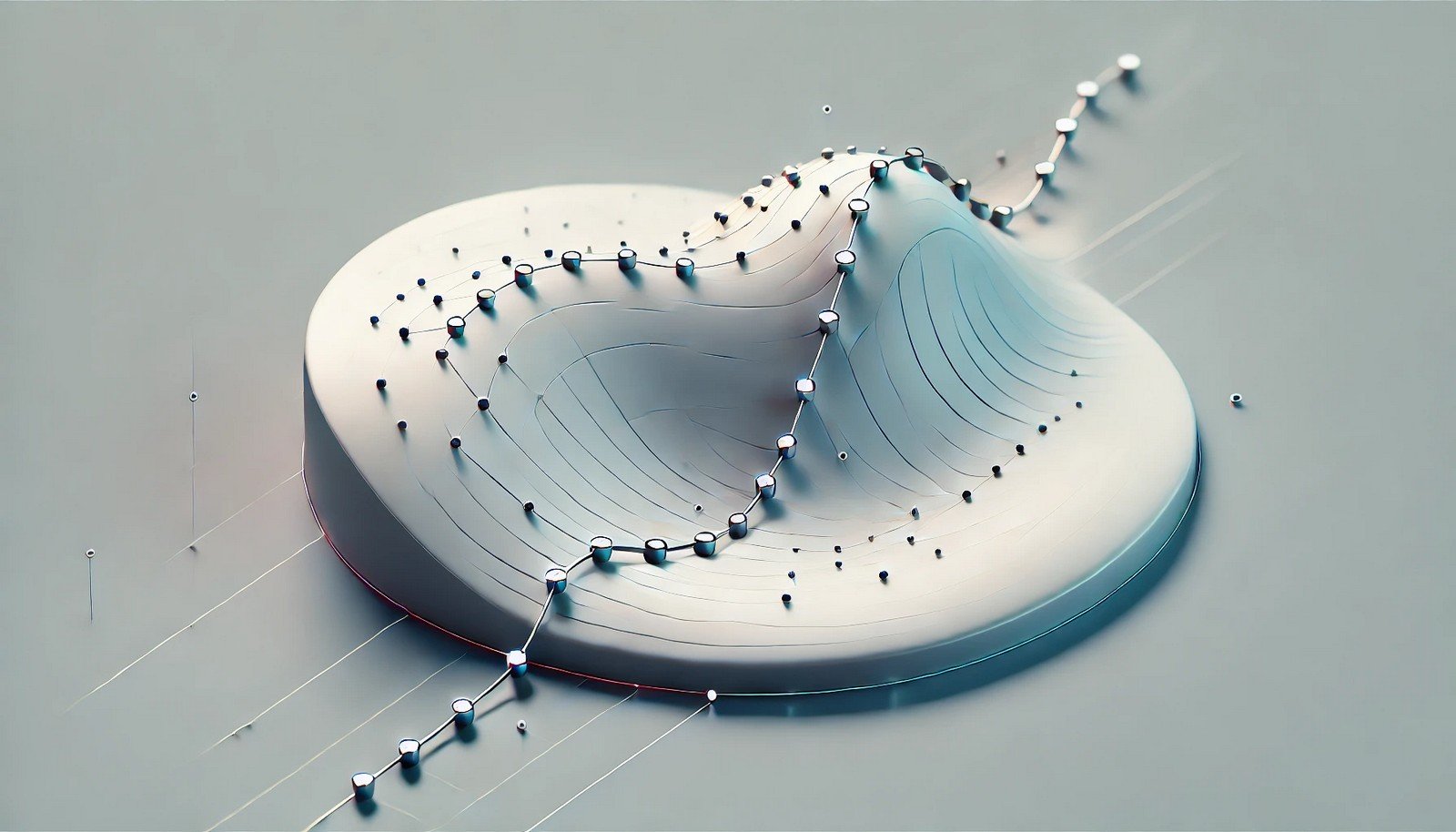

Stochastic Gradient Descent (SGD) is an optimization algorithm used in machine learning and statistical modeling. It iteratively adjusts model parameters to minimize the error in predictions. Unlike batch gradient descent, which calculates gradients over the entire dataset, SGD performs updates using a single data point at a time, making it faster and more suitable for large datasets. By adjusting parameters step-by-step, SGD enables models to achieve higher accuracy, essential in neural networks and other machine learning tasks.

Stochastic Gradient Descent Explained Easy

Think of it like learning to shoot a basketball. You don’t practice using thousands of balls at once. Instead, you throw one, see how you did, and adjust your aim for the next shot. Stochastic Gradient Descent is like that: it learns little by little, adjusting each time it sees new data, helping it get better and better.

Stochastic Gradient Descent Origin

SGD originated in the fields of statistics and numerical analysis, with roots tracing back to early optimization studies in the mid-20th century. Its efficiency and effectiveness became more pronounced as machine learning evolved and data volumes grew.

Stochastic Gradient Descent Etymology

The term "stochastic" comes from the Greek word for "random," indicating the algorithm's reliance on random samples from data to compute parameter updates. "Gradient Descent" describes the process of following the direction of the steepest slope to minimize errors.

Stochastic Gradient Descent Usage Trends

The rise of deep learning and neural networks has intensified interest in SGD. Its efficiency in handling large datasets and compatibility with online learning makes it a staple in various industries, including finance, healthcare, and tech.

Stochastic Gradient Descent Usage

- Formal/Technical Tagging:

- Optimization

- Machine Learning

- Statistical Modeling - Typical Collocations:

- "SGD optimizer"

- "stochastic updates"

- "SGD algorithm"

- "SGD in neural networks"

Stochastic Gradient Descent Examples in Context

- In image recognition, SGD helps update parameters to classify images more accurately.

- In recommendation systems, SGD is used to adjust user preferences based on feedback.

- Language models leverage SGD to learn vocabulary and sentence structures through repetitive updates.

Stochastic Gradient Descent FAQ

- What is Stochastic Gradient Descent?

SGD is an optimization technique that updates model parameters iteratively, using individual data points rather than entire datasets. - Why is it called "stochastic"?

"Stochastic" refers to the randomness in data selection for updates, making each step a sample from the dataset. - How is SGD different from traditional gradient descent?

SGD updates parameters using single data points, while traditional gradient descent uses the entire dataset for each update. - Where is SGD commonly used?

SGD is widely used in training neural networks, image classification, and recommendation systems. - Can SGD handle large datasets?

Yes, its incremental approach makes it efficient for large datasets. - Why is SGD important in deep learning?

SGD is essential because it allows faster training and adapts well to online learning environments. - Is SGD only used in machine learning?

While prominent in machine learning, SGD is also valuable in statistics and optimization tasks beyond AI. - What are some drawbacks of SGD?

SGD can sometimes converge too slowly or get trapped in local minima. - How can the performance of SGD be improved?

Adjusting learning rates or using adaptive optimizers can enhance SGD performance. - Can SGD be used with other algorithms?

Yes, it often combines with momentum or batch normalization to improve accuracy.

Stochastic Gradient Descent Related Words

- Categories/Topics:

- Optimization

- Machine Learning

- Statistical Modeling

Did you know?

Stochastic Gradient Descent is foundational to training deep neural networks. Without it, modern advancements in AI, such as image and speech recognition, would be far less accurate and efficient. SGD has unlocked applications in autonomous driving, recommendation engines, and even personalized healthcare.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment