Deep Belief Networks (DBN)

Quick Navigation:

- Deep Belief Networks Definition

- Deep Belief Networks Explained Easy

- Deep Belief Networks Origin

- Deep Belief Networks Etymology

- Deep Belief Networks Usage Trends

- Deep Belief Networks Usage

- Deep Belief Networks Examples in Context

- Deep Belief Networks FAQ

- Deep Belief Networks Related Words

Deep Belief Networks Definition

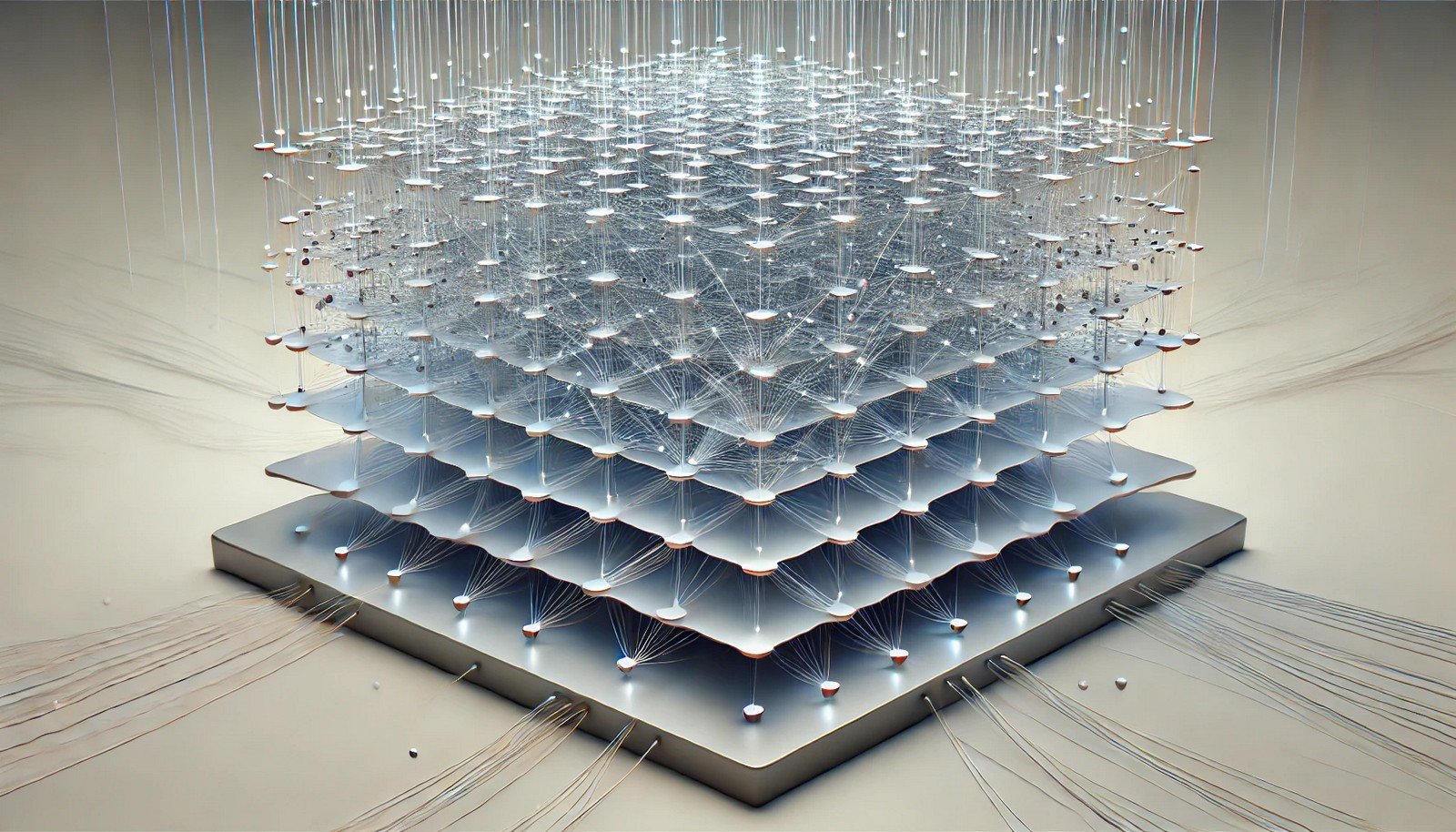

Deep Belief Networks (DBNs) are a type of unsupervised deep learning model, composed of multiple layers of restricted Boltzmann machines (RBMs) or similar neural network structures. DBNs are generative models that learn representations by layer-wise training, gradually uncovering the underlying structures in the data. They are especially powerful in applications requiring feature extraction, such as image and speech recognition. DBNs can learn hierarchical representations, making them suitable for complex data patterns and contributing to advancements in AI.

Deep Belief Networks Explained Easy

Imagine you’re piecing together a big puzzle without knowing the picture beforehand. Each layer in a Deep Belief Network works like a detective, identifying small pieces of patterns. Eventually, when these layers come together, they create a complete picture, making sense of the patterns in data.

Deep Belief Networks Origin

Deep Belief Networks were popularized in the mid-2000s, pioneered by researchers like Geoffrey Hinton, a leader in AI. DBNs were a major breakthrough in deep learning, particularly for pre-training neural networks, which helped overcome issues in traditional network training.

Deep Belief Networks Etymology

The term “deep belief” refers to the multi-layer structure of these networks and the model’s ability to learn “beliefs” or representations from data, often without labeled examples.

Deep Belief Networks Usage Trends

Initially, DBNs were crucial in deep learning but have since been overshadowed by other architectures like convolutional and recurrent neural networks. However, they remain significant in unsupervised learning and are occasionally used for dimensionality reduction and data pre-processing.

Deep Belief Networks Usage

- Formal/Technical Tagging:

- Machine Learning

- Deep Learning

- Unsupervised Learning - Typical Collocations:

- "deep belief network model"

- "DBN layer"

- "restricted Boltzmann machine in DBN"

- "DBN pre-training"

Deep Belief Networks Examples in Context

- Deep Belief Networks can recognize handwritten digits by learning from a large dataset without explicit labels.

- In audio processing, DBNs are used to identify spoken words by learning sound patterns.

- DBNs are also applied in healthcare to classify medical images based on learned representations.

Deep Belief Networks FAQ

- What is a Deep Belief Network?

A Deep Belief Network (DBN) is a multi-layer, unsupervised neural network model that learns hierarchical data representations. - How does a DBN differ from other deep learning models?

Unlike other models, DBNs use a layer-by-layer approach and are typically unsupervised, making them unique in feature extraction. - Who developed Deep Belief Networks?

Geoffrey Hinton and colleagues were pioneers in developing DBNs for machine learning. - Where are DBNs used today?

DBNs are primarily used in research and specialized applications in data representation and pre-training. - How does DBN training work?

DBNs are trained in layers, with each layer acting as an RBM, which captures data patterns progressively. - Can DBNs work with labeled data?

While mainly unsupervised, DBNs can incorporate labels in certain layers for supervised learning tasks. - What fields benefit from DBNs?

DBNs are used in fields like image processing, speech recognition, and healthcare diagnostics. - What is a Restricted Boltzmann Machine in DBNs?

An RBM is a two-layer neural network used in DBNs for unsupervised learning, capturing data features. - Why are DBNs less popular now?

Advances in other deep learning models have overshadowed DBNs, though they still serve specific uses. - How does DBN training differ from backpropagation?

DBNs use layer-wise training instead of backpropagation, making them effective for unsupervised tasks.

Deep Belief Networks Related Words

- Categories/Topics:

- Neural Networks

- Unsupervised Learning

- Deep Learning

Did you know?

DBNs played a pivotal role in the revival of deep learning in the early 2000s. Geoffrey Hinton’s work on DBNs laid the groundwork for developing more advanced neural network models, setting the stage for today’s AI breakthroughs.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment