Energy-based Regularization

Quick Navigation:

- Energy-based Regularization Definition

- Energy-based Regularization Explained Easy

- Energy-based Regularization Origin

- Energy-based Regularization Etymology

- Energy-based Regularization Usage Trends

- Energy-based Regularization Usage

- Energy-based Regularization Examples in Context

- Energy-based Regularization FAQ

- Energy-based Regularization Related Words

Energy-based Regularization Definition

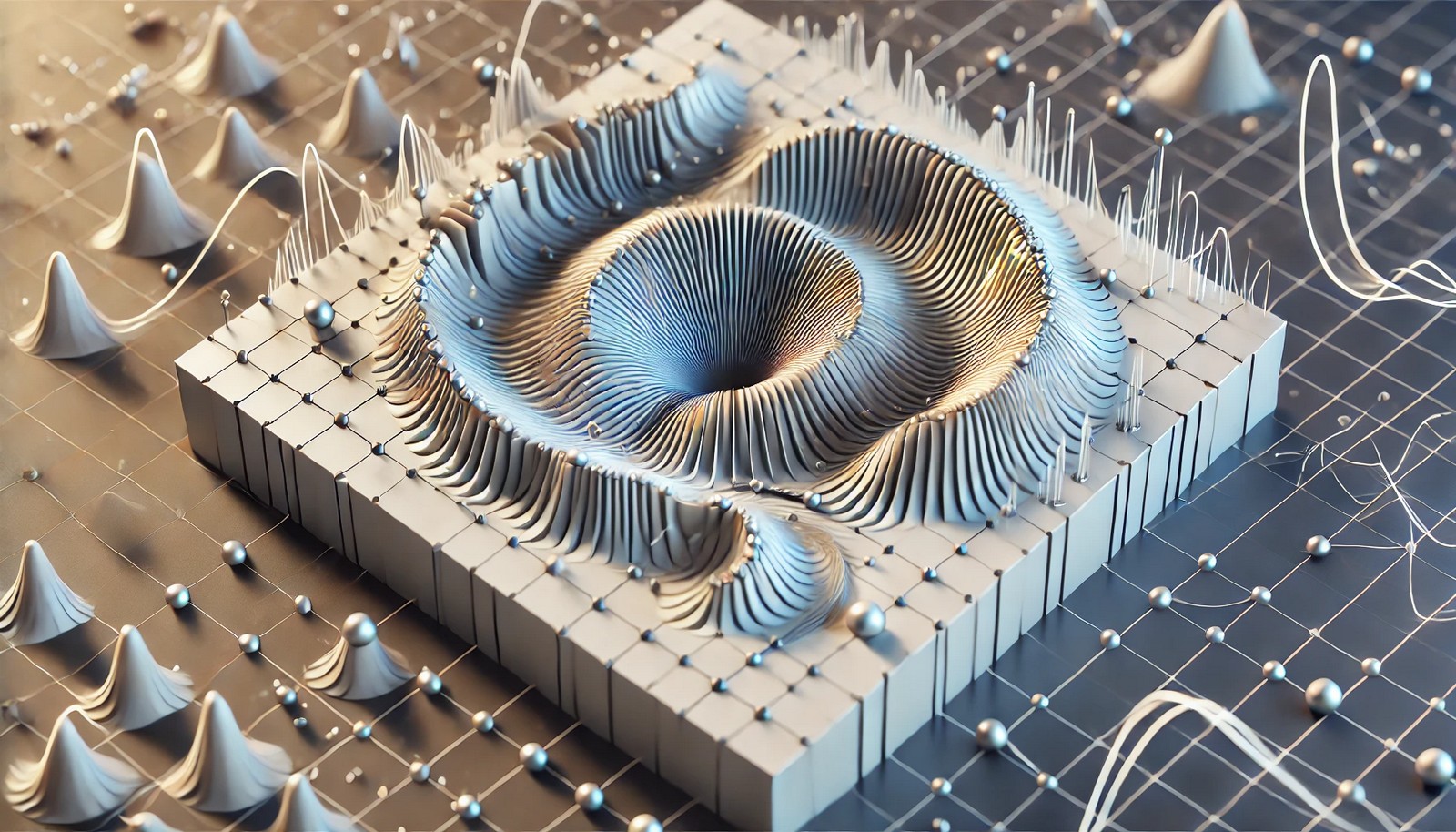

Energy-based Regularization is a technique in artificial intelligence (AI) and machine learning used to improve model performance and generalization by penalizing certain configurations in the model's energy landscape. By adding a regularization term that restricts high-energy states, this method encourages the model to find solutions with lower complexity, thereby avoiding overfitting and enhancing robustness. This approach is particularly useful in scenarios with limited data or high-dimensional spaces, as it stabilizes the learning process and improves prediction accuracy.

Energy-based Regularization Explained Easy

Think of Energy-based Regularization as a way to keep your model from “going wild.” Imagine a race car driving on a curvy track. If it goes too fast, it’ll veer off the path and crash. Energy-based Regularization is like setting a speed limit so the car (or model) stays on track. By limiting the “energy” in certain parts, it helps the model avoid extreme behaviors and stay focused.

Energy-based Regularization Origin

The concept of regularization emerged from statistical modeling, but its adaptation to energy-based methods became prominent as AI systems grew in complexity. These methods were popularized in the 2000s with the rise of more sophisticated neural networks.

Energy-based Regularization Etymology

The term "energy-based" reflects the mathematical idea of minimizing an “energy function” to create stable, low-energy configurations in AI models, thus promoting generalization.

Energy-based Regularization Usage Trends

Energy-based Regularization has gained attention in recent years as AI applications expand into complex fields like autonomous systems and medical diagnostics, where model robustness and reliability are essential. Its usage is especially popular in deep learning and reinforcement learning communities focused on preventing overfitting in high-dimensional data environments.

Energy-based Regularization Usage

- Formal/Technical Tagging:

- Machine Learning

- Regularization Techniques

- Model Optimization - Typical Collocations:

- "energy-based penalty"

- "energy regularization"

- "preventing overfitting with energy-based models"

Energy-based Regularization Examples in Context

- In self-driving technology, Energy-based Regularization helps stabilize the learning process, allowing models to react reliably to unexpected situations.

- Energy-based Regularization is used in image classification tasks to reduce noise and ensure stable categorization across various image qualities.

- In reinforcement learning, it prevents over-exploration by setting bounds, leading to more efficient strategies in complex environments.

Energy-based Regularization FAQ

- What is Energy-based Regularization?

It’s a method that uses energy penalties to make AI models more stable and less prone to overfitting. - How does it help in machine learning?

It guides models to simpler solutions, which makes them perform better on new data. - Why is regularization important in AI?

Regularization prevents models from memorizing data, helping them generalize and make accurate predictions. - How is it different from standard regularization?

It focuses on managing the “energy” or stability of a model, rather than solely minimizing complexity. - Where is Energy-based Regularization used?

It’s used in areas requiring reliable AI models, like medical imaging and autonomous systems. - Does it slow down model training?

Slightly, as it adds constraints, but this trade-off is beneficial for model robustness. - Can it be applied to all types of machine learning models?

Yes, but it’s most effective in high-dimensional or complex models like neural networks. - How does it affect model accuracy?

By preventing overfitting, it usually improves accuracy on new, unseen data. - Is Energy-based Regularization commonly used?

It's growing in popularity in fields where stability and robustness are critical. - How does it relate to energy minimization techniques?

It applies similar concepts, aiming to find low-energy configurations for stability.

Energy-based Regularization Related Words

- Categories/Topics:

- Machine Learning

- Artificial Intelligence

- Neural Networks

- Reinforcement Learning

Did you know?

Energy-based Regularization has roots in physics, where energy minimization techniques were applied to stabilize physical systems. Adapting this to AI has enabled the development of models that mimic certain stability properties of natural systems.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment