Long Short-Term Memory (LSTM)

Quick Navigation:

- Long Short-Term Memory Definition

- Long Short-Term Memory Explained Easy

- Long Short-Term Memory Origin

- Long Short-Term Memory Etymology

- Long Short-Term Memory Usage Trends

- Long Short-Term Memory Usage

- Long Short-Term Memory Examples in Context

- Long Short-Term Memory FAQ

- Long Short-Term Memory Related Words

Long Short-Term Memory Definition

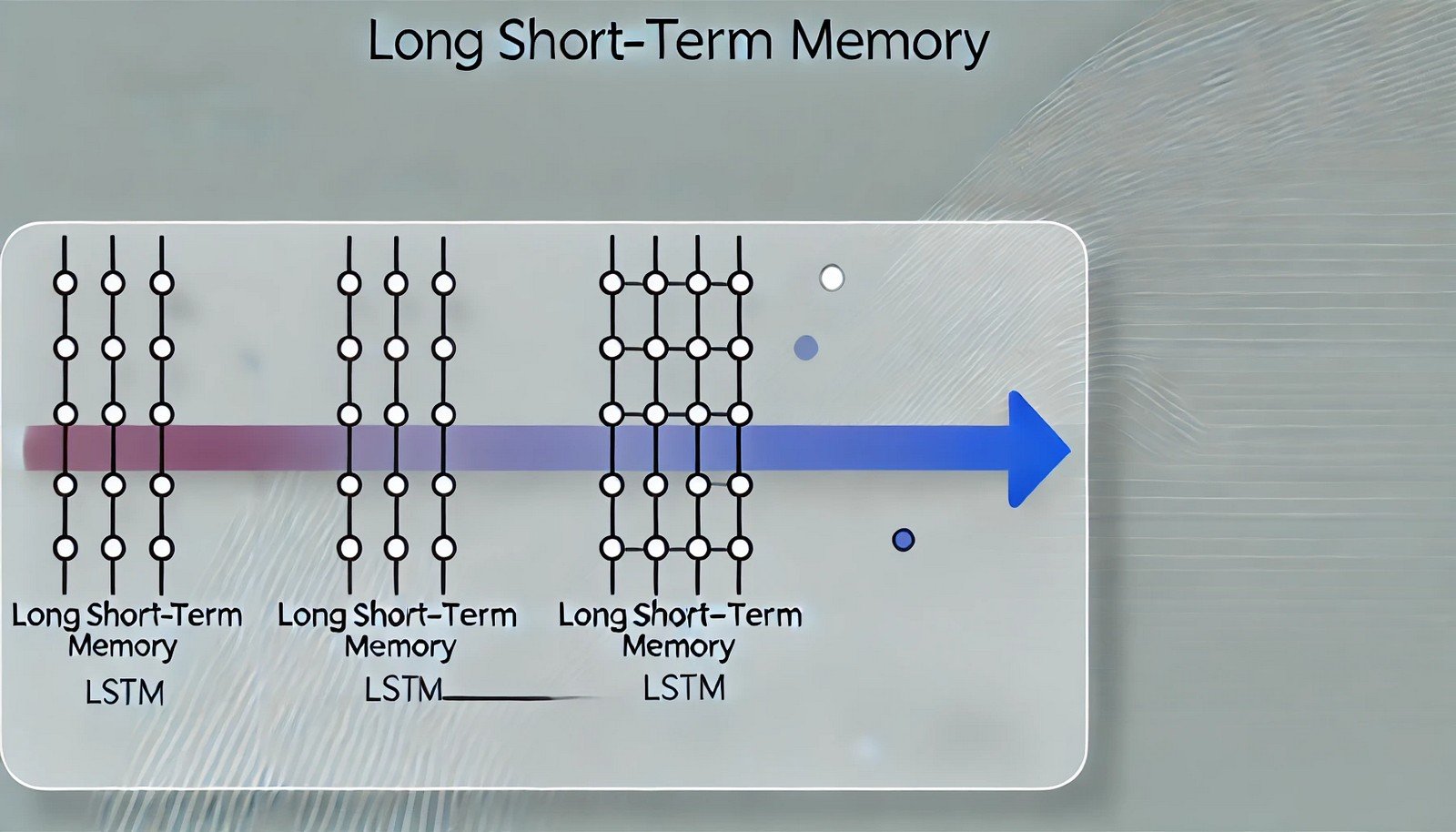

Long Short-Term Memory, or LSTM, is a type of recurrent neural network (RNN) architecture specifically designed to address the limitations of traditional RNNs in retaining information over longer sequences. By incorporating memory cells, input gates, forget gates, and output gates, LSTM networks maintain information across longer time intervals, which is essential for tasks involving sequential data. Applications range from language processing to anomaly detection, as LSTM networks can handle dependencies in data that are separated by long delays.

Long Short-Term Memory Explained Easy

Imagine your brain trying to remember a story over several days. Traditional computers often "forget" parts of the story when it's long, but LSTMs are like having a notebook to jot down important parts and review them later. This way, they "remember" what’s essential for longer without forgetting. This ability makes LSTMs very good at tasks like translating languages or understanding speech, where remembering the sequence is important.

Long Short-Term Memory Origin

LSTMs were introduced by Sepp Hochreiter and Jürgen Schmidhuber in 1997 to solve the problem of long-term dependencies in machine learning models. Traditional RNNs often struggle with vanishing gradients, making it difficult to learn dependencies far back in the sequence. The development of LSTMs marked a breakthrough in handling such sequential data effectively.

Long Short-Term Memory Etymology

The term “long short-term memory” represents the network’s capability to retain information for extended periods, bridging the gap between short-term and long-term memory.

Long Short-Term Memory Usage Trends

In recent years, LSTM networks have become foundational in many AI applications, particularly in natural language processing, time-series prediction, and audio analysis. Their popularity surged with advancements in computational power and the growth of big data, making them a favored tool in AI research and applications.

Long Short-Term Memory Usage

- Formal/Technical Tagging:

- Machine Learning

- Deep Learning

- Neural Networks

- Time-Series Analysis - Typical Collocations:

- "LSTM network architecture"

- "sequence modeling with LSTM"

- "LSTM for speech recognition"

- "predictive modeling with LSTM"

Long Short-Term Memory Examples in Context

- In speech recognition, LSTMs are used to understand spoken words by processing sequences of audio data.

- In language modeling, LSTMs help translate languages by remembering the context of previous words.

- Stock price prediction models use LSTM networks to analyze historical data and predict future trends.

Long Short-Term Memory FAQ

- What is Long Short-Term Memory (LSTM)?

LSTM is a neural network model that enables longer-term retention of information, especially useful for processing sequential data. - How does LSTM differ from traditional RNNs?

LSTMs include mechanisms like memory cells and gates, allowing them to remember information over long sequences, unlike standard RNNs. - Where is LSTM commonly used?

LSTMs are commonly used in language translation, speech recognition, and time-series prediction. - What are the main components of LSTM?

LSTM networks consist of memory cells, input gates, forget gates, and output gates, managing information flow through the network. - How does an LSTM handle long-term dependencies?

LSTMs use memory cells and gates to control what information to retain or discard over extended sequences. - Why are LSTMs popular in natural language processing (NLP)?

LSTMs effectively handle sequential data, essential in NLP tasks where word order and context are important. - Can LSTMs predict stock prices?

Yes, LSTMs are used in financial modeling to predict stock price trends by analyzing time-series data. - Is LSTM suitable for real-time applications?

Yes, with optimization, LSTM models can process real-time data, making them suitable for applications like speech recognition. - How does LSTM help in speech recognition?

LSTM networks retain audio sequence information, aiding in recognizing and interpreting spoken words accurately. - Are LSTMs still widely used today?

Yes, despite newer models, LSTMs are still widely used for tasks that require processing sequential data over long periods.

Long Short-Term Memory Related Words

- Categories/Topics:

- Recurrent Neural Networks (RNN)

- Time-Series Modeling

- Sequential Data Processing

- Natural Language Processing (NLP)

Did you know?

LSTM networks played a crucial role in the development of early machine translation systems, enabling translations that were previously unmanageable due to long dependency requirements. Today, they remain fundamental in language processing tasks.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment