Momentum Decay

Quick Navigation:

- Momentum Decay Definition

- Momentum Decay Explained Easy

- Momentum Decay Origin

- Momentum Decay Etymology

- Momentum Decay Usage Trends

- Momentum Decay Usage

- Momentum Decay Examples in Context

- Momentum Decay FAQ

- Momentum Decay Related Words

Momentum Decay Definition

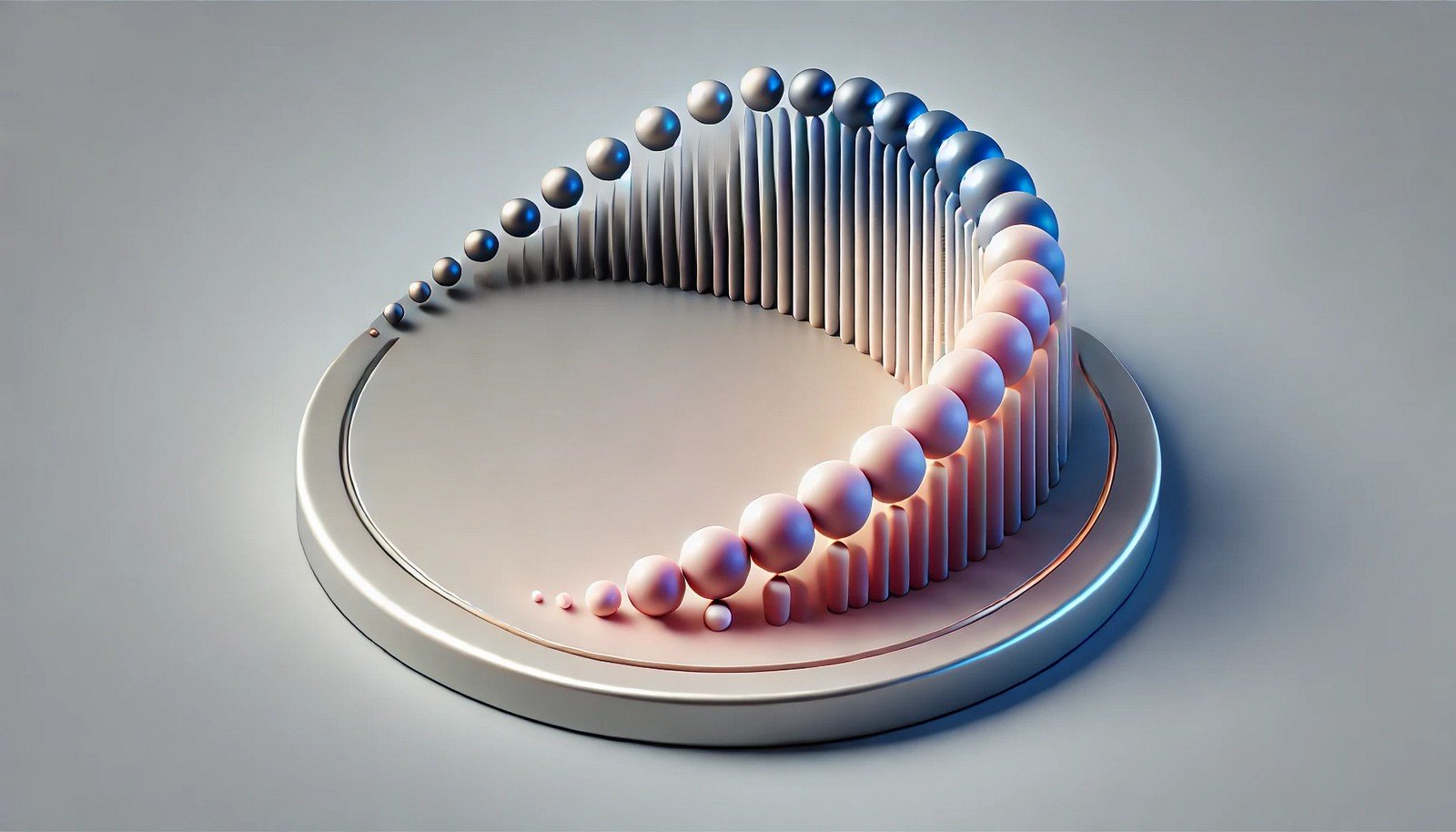

Momentum Decay is a parameter in optimization algorithms, especially those using momentum, which gradually reduces the influence of previous gradients. In machine learning, this decay stabilizes convergence, reducing oscillations and allowing models to adapt to recent patterns more quickly. It’s often applied in stochastic gradient descent variants to fine-tune learning rates dynamically, enhancing training efficiency and model accuracy in tasks like image recognition and language processing.

Momentum Decay Explained Easy

Imagine riding a bike down a hill. If you don’t brake, you’ll keep speeding up and might lose control. Momentum Decay acts like a gentle brake, slowing down previous speeds so the bike (or model) stays steady and doesn’t go too fast down the hill. This helps the computer learn without getting too "wobbly" or off-track.

Momentum Decay Origin

Momentum Decay emerged from improvements in machine learning optimization techniques as researchers aimed to make gradient-based learning more stable and efficient. This concept evolved from methods in physics applied to neural networks, where controlling "momentum" improved model convergence, especially as computing power increased in the early 2000s.

Momentum Decay Etymology

The term "momentum decay" combines "momentum" from physics, describing a force that keeps something moving, with "decay," meaning a gradual reduction.

Momentum Decay Usage Trends

In recent years, momentum decay has become a critical component in deep learning frameworks like TensorFlow and PyTorch. Researchers apply it to achieve smoother convergence and enhance performance in neural networks. Its usage has grown in applications like natural language processing and computer vision due to increased model complexity and data volumes.

Momentum Decay Usage

- Formal/Technical Tagging:

- Optimization Algorithms

- Machine Learning

- Deep Learning

- Gradient-Based Methods - Typical Collocations:

- "apply momentum decay"

- "momentum decay rate"

- "control momentum in optimization"

- "momentum decay parameter tuning"

Momentum Decay Examples in Context

- Momentum decay is used to stabilize model training in deep neural networks by dampening large gradients that might lead to erratic updates.

- Applying momentum decay in gradient descent optimization allows for faster convergence in models with large datasets.

- Researchers often tune momentum decay rates to optimize model performance in complex tasks, such as speech recognition and image classification.

Momentum Decay FAQ

- What is momentum decay in machine learning?

Momentum decay reduces the influence of past gradients to stabilize model training. - Why is momentum decay important in optimization?

It prevents oscillations and helps the model converge smoothly to a solution. - How does momentum decay affect model accuracy?

It enables faster convergence and helps achieve higher accuracy by reducing erratic updates. - Where is momentum decay applied?

It's applied in optimization algorithms like stochastic gradient descent in neural networks. - What’s the difference between momentum and momentum decay?

Momentum amplifies the influence of past gradients, while momentum decay reduces it over time. - How is momentum decay different from learning rate decay?

Momentum decay targets gradient influence, while learning rate decay reduces the step size over time. - Can momentum decay improve convergence in all models?

It’s especially beneficial in deep learning but not necessary for simpler models. - How do you choose a momentum decay rate?

The rate is typically tuned based on the model’s needs and data complexity. - What are some challenges of using momentum decay?

Choosing the optimal rate can be challenging, and incorrect values may hinder learning. - Is momentum decay commonly used in modern AI?

Yes, it’s widely used in training deep neural networks to improve stability.

Momentum Decay Related Words

- Categories/Topics:

- Neural Networks

- Optimization Techniques

- Gradient Descent

Did you know?

Momentum decay, inspired by physics, was adopted in machine learning to help neural networks converge faster without getting "stuck" in local minima. This approach has been critical in advancing models that power AI applications we use daily.

PicDictionary.com is an online dictionary in pictures. If you have questions or suggestions, please reach out to us on WhatsApp or Twitter.Authors | Arjun Vishnu | @ArjunAndVishnu

I am Vishnu. I like AI, Linux, Single Board Computers, and Cloud Computing. I create the web & video content, and I also write for popular websites.

My younger brother, Arjun handles image & video editing. Together, we run a YouTube Channel that's focused on reviewing gadgets and explaining technology.

Comments powered by CComment